Tutorial: Create an MPI Cluster on the Amazon Elastic Cloud (EC2)

--D. Thiebaut (talk) 14:57, 20 October 2013 (EDT)

Updated --D. Thiebaut (talk) 16:38, 15 March 2017 (EDT)

Contents

|

This tutorial illustrates how to setup a cluster of Linux PCs with MIT's StarCluster app to run MPI programs. The setup creates an EBS disk to store programs and data files that need to remain intact from one powering-up of the cluster to the next. This setup is used in the Computer Science CSC352 seminar on parallel and distributed computing taught at Smith College, Spring 2017. |

Goals

The goals of this setup are to

- install the starcluster package from MIT. It allows one to create an MPI-enabled cluster easily from the command line using Python scripts developed at MIT. The cluster uses a predefined Amazon Machine Image (AMI) that already contains everything required for clustering.

- Edit and initialize a configuration file on our local machine for starcluster to access Amazon's AWS services and setup our cluster with the type of machine we need and the number of nodes we want. Clusters are setup for a Manager-Workers programming paradigm.

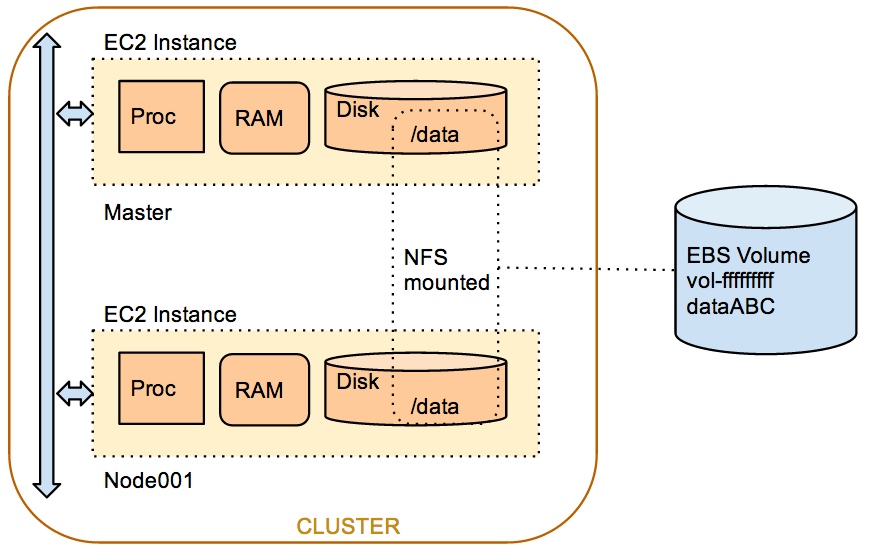

- Create an EBS volume (disk) on Amazon. EBS volumes are created on storage devices and can be attached to a cluster using the Network File System protocol, so that every node in the cluster will share files at the same path.

- Define a 2-node cluster attached to the previously created EBS volume.

- Run an MPI program on the just-created cluster.

- Be ready for a second tutorial where we create a 10-node cluster and run an MPI program on it.

Setup

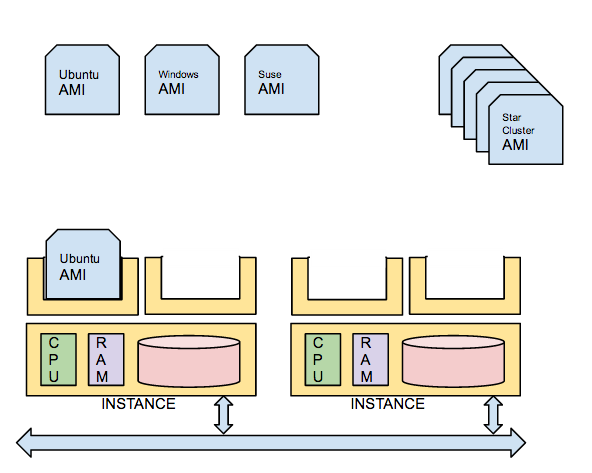

The setup is illustrated by the two images below. The first one illustrates AWS instances available for service. These can be m1.small, m3.medium, or some other type of instances. The two instances have a processor which may contain several cores, some amount of RAM and some disk space. One instance shown in the diagram is running an AMI in virtual mode.

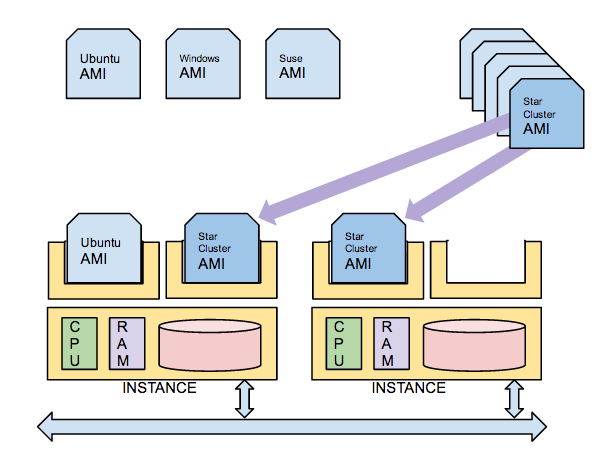

The second image shows what happens when we start a 2-node MPI cluster on AWS using the starcluster utility (which you will install next). Two AMIs preinitialized by the developers of starcluster are loaded on two instances that have space for additional virtual machines. Note that when you stop a cluster and restart it, it may be restarted on different instances.

AWS Account

You should first open an account on Amazon AWS. You can do so either with a credit card, or by getting a grant from Amazon for free compute time for educational purposes.

To create an account on AWS, start here.

First make sure you have setup the credentials for admins and users using Amazon's IAM system (The video introduction is a great way to get your groups and users setup).

Once you have created your account, login to the console of https://aws.amazon.com, and record 3 pieces of information you will need to run programs on AWS clusters.

- Your Account Id

- Your Access Key

- Your Secret Access Key

Your Account Id can be obtained from the "My Account" menu. The keys can be obtained by clicking on My Account and in the menu that pops up, clicking on Security Credentials. You should be taken to a page that will either show you your keys, or allow you to create them.

Reference Material

The following references were consulted to put this page together:

- the the MPI Tutorial from mpitutorial.com, and

- the StarCluster quick-start tutorial from the StarCluster project

The setup presented here uses MIT's Starcluster package. A good reference for Starcluster is its documentation pages on quickstart.html. Its Overview page is a must read. Please do so!

You may also find Glenn K. Lockwood's tutorial on the same subject of interest.

Installing StarCluster

If unsuccessful with this section, try web.mit.edu/STAR/cluster/docs/latest/installation.html.

- The simplest way is to use the Python installer pip on the command line. This should work whether you have a Mac or a Windows PC.

pip install starcluster

- If that doesn't work, you could try:

sudo easy_install starcluster

- Once the installation has completed successfully, move on to the next step: creating a configuration file.

Creating a Configuration File

- Create a configuration file by typing the following command, and picking Option 2.

starcluster help StarCluster - (http://star.mit.edu/cluster) (v. 0.9999) Software Tools for Academics and Researchers (STAR) Please submit bug reports to starcluster@mit.edu !!! ERROR - config file /Users/thiebaut/.starcluster/config does not exist Options: -------- [1] Show the StarCluster config template [2] Write config template to /Users/youraccountname/.starcluster/config [q] Quit Please enter your selection: 2 >>> Config template written to /Users/youraccountname/.starcluster/config >>> Please customize the config template

- Look at the last two lines output by the command and see where the config file is stored. Cd your way there,

and edit the config file using your favorite editor (mine is emacs). Enter your amazon key, secret key, and Id.

emacs -nw ~/.starcluster/config

- and enter your key, secret key and Id next to the variable definitions

AWS_ACCESS_KEY_ID = ABCDEFGHIJKLMNOPQRSTUVWXYZ AWS_SECRET_ACCESS_KEY = abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ AWS_USER_ID= 123456789012 # your AWS Account Id, 12 digits

- Save the file and close your editor.

- Back at the Terminal prompt, create an RSA key, and call it mykeyABC.rsa, and replace ABC with your initials. This will make it easy later if you you all have different RSA keys.

mkdir ~/.ssh starcluster createkey mykeyABC -o ~/.ssh/mykeyABC.rsa

- Edit the config file so that it contains this new information.

emacs -nw ~/.starcluster/config

- and locate the lines:

[key mykey] KEY_LOCATION=~/.ssh/mykey.rsa

- and change them as follows (replace ABC with your initials, as you did above):

[key mykeyABC] KEY_LOCATION=~/.ssh/mykeyABC.rsa

- while you're there, search for "[cluster smallcluster]" in the config file, and check also that the small cluster you will use will also use the right ssh key mykeyABC (replace ABC with your initials):

[cluster smallcluster] # change this to the name of one of the keypair sections defined above KEYNAME = mykeyABC

Finally, we have found that clusters using the default instance provided in the original Starcluster config file sometimes fail to start. So we'll use the m3.medium instance instead. Locate the line shown below in the config file and edit it as shown.

NODE_INSTANCE_TYPE = m3.medium

That's it for the configuration for right now. Skip the two section on EBS Volumes for right now.

Creating an EBS Volume (Skip this Step)

Skip this section for now. It is necessary if you work with a large data set, or want to setup a database that you will want to maintain loaded with data between experiments.

According to the AWS documentation, an EBS volume is a disk that

- is network-attached to and shared by all nodes in the cluster at the same mount point,

- persists with its data independently of the instances (i.e. you can stop the cluster and keep the data saved to the volume),

- and is highly available, highly reliable and predictable.

We now create an EbS volume for our cluster. This is the equivalent of a disk that is NSF mounted as a predefined directory on each node of our cluster. It's a shared drive; only one physical drive appearing as belonging to each node of our cluster. Files created on this volume are available to all nodes.

Starcluster supports a command expressly for the purpose of creating EBS volumes. When an EBS volume is created, it gets an Id that uniquely defines it, and which we use in the cluster config file to indicate we want it attached to our cluster when the cluster starts.

All files that will be stored on the EBS volume will remain on the volume when we terminate the cluster.

Assuming that you are connected to the Terminal window of your local Mac or Windows laptop, use starcluster to create a new 1-GByte EBS volume called dataABC where ABC represent your initials (we use the us-east-1c region as it is the one closest to Smith College):

starcluster createvolume --name=dataABC 1 us-east-1c StarCluster - (http://star.mit.edu/cluster) (v. 0.9999) Software Tools for Academics and Researchers (STAR) Please submit bug reports to starcluster@mit.edu *** WARNING - Setting 'EC2_PRIVATE_KEY' from environment... *** WARNING - Setting 'EC2_CERT' from environment... >>> No keypair specified, picking one from config... ... >>> New volume id: vol-cf999998 ( <---- we'll need this information again! ) >>> Waiting for vol-cf999998 to become 'available'... >>> Attaching volume vol-cf999998 to instance i-9a80f8fc... >>> Waiting for vol-cf999998 to transition to: attached... >>> Formatting volume... *** WARNING - There are still volume hosts running: i-9a80f8fc, i-9a80f8fc >>> Creating volume took 1.668 mins

Make a note of the volume Id reported by the command. In our case it is vol-cf999998

We can now stop the Amazon server instance that was used to create the EBS volume:

starcluster terminate -f volumecreator StarCluster - (http://star.mit.edu/cluster) (v. 0.9999) Software Tools for Academics and Researchers (STAR) Please submit bug reports to starcluster@mit.edu ... >>> Removing @sc-volumecreator security group...

Attaching the EBS Volume to the Cluster (Skip this Step)

Skip this section as well if you don't need an EBS Volume.

We now edit the starcluster config file to specify that it should attach the newly created EBS volume to our cluster.

- Locate the [cluster smallcluster] section, and in this section locate the VOLUMES line, and edit it to look as follows:

VOLUMES = dataABC (remember to replace ABC by your initials)

- Then locate the Configuring EBS Volumes section, and add these three lines, defining the

[volume dataABC] VOLUME_ID = vol-cf999998 (use the volume Id returned by t the starcluster command above) MOUNT_PATH = /data

Starting the Cluster

The default cluster is a cluster of 2 instances of type m3.medium, which is characterized by a

64-bit virtual Intel Xeon E5-2670 v2 processor, 3.75 GB or RAM, and 1x4 GB of SSD disk storage (for OS + data). This is perfect for starting with MPI.

Once the config file is saved, simply enter the command (replace ABC at the end of the name with your initials):

starcluster start myclusterABC (This may take several minutes... be patient!)

StarCluster - (http://star.mit.edu/cluster) (v. 0.95.6)

Software Tools for Academics and Researchers (STAR)

Please submit bug reports to starcluster@mit.edu

*** WARNING - Setting 'EC2_PRIVATE_KEY' from environment...

*** WARNING - Setting 'EC2_CERT' from environment...

>>> Using default cluster template: smallcluster

>>> Validating cluster template settings...

>>> Cluster template settings are valid

>>> Starting cluster...

>>> Launching a 2-node cluster...

>>> Creating security group @sc-mpicluster...

Reservation:r-068634b1c230c1bbd

>>> Waiting for instances to propagate...

2/2 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Waiting for cluster to come up... (updating every 30s)

>>> Waiting for all nodes to be in a 'running' state...

2/2 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Waiting for SSH to come up on all nodes...

2/2 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Waiting for cluster to come up took 1.206 mins

>>> The master node is ec2-54-205-145-52.compute-1.amazonaws.com

>>> Configuring cluster...

>>> Running plugin starcluster.clustersetup.DefaultClusterSetup

>>> Configuring hostnames...

2/2 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Creating cluster user: sgeadmin (uid: 1001, gid: 1001)

2/2 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Configuring scratch space for user(s): sgeadmin

2/2 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Configuring /etc/hosts on each node

2/2 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Starting NFS server on master

>>> Configuring NFS exports path(s):

/home

>>> Mounting all NFS export path(s) on 1 worker node(s)

1/1 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Setting up NFS took 0.082 mins

>>> Configuring passwordless ssh for root

>>> Configuring passwordless ssh for sgeadmin

>>> Running plugin starcluster.plugins.sge.SGEPlugin

>>> Configuring SGE...

>>> Configuring NFS exports path(s):

/opt/sge6

>>> Mounting all NFS export path(s) on 1 worker node(s)

1/1 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Setting up NFS took 0.063 mins

>>> Installing Sun Grid Engine...

1/1 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Creating SGE parallel environment 'orte'

2/2 |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 100%

>>> Adding parallel environment 'orte' to queue 'all.q'

>>> Configuring cluster took 2.028 mins

>>> Starting cluster took 3.300 mins

The cluster is now ready to use. To login to the master node

as root, run:

$ starcluster sshmaster myclusterABC

If you're having issues with the cluster you can reboot the

instances and completely reconfigure the cluster from

scratch using:

$ starcluster restart myclusterABC

When you're finished using the cluster and wish to terminate

it and stop paying for service:

$ starcluster terminate myclusterABC

Alternatively, if the cluster uses EBS instances, you can

use the 'stop' command to shutdown all nodes and put them

into a 'stopped' state preserving the EBS volumes backing

the nodes:

$ starcluster stop myclusterABC

WARNING: Any data stored in ephemeral storage (usually /mnt)

will be lost!

You can activate a 'stopped' cluster by passing the -x

option to the 'start' command:

$ starcluster start -x myclusterABC

This will start all 'stopped' nodes and reconfigure the

cluster.

Now that the cluster is running, we can run our first mpi hello-world program. But before that, make sure you know how to stop the cluster, or restart it in case of trouble.

Starting, Restarting, Stopping, or Terminating your Cluster

The lines highlighted in yellow above are important, and you should make note of them. They will help you restart your cluster in case of trouble, or stop the cluster while saving its state, or terminating it altogether and stop paying for it.

GENERAL COMMANDS

| Command | Information |

|---|---|

|

starcluster start myclusterABC |

Starts a cluster for the first time and gives it a name. |

|

starcluster restart myclusterABC |

If you're having issues with the cluster you can reboot the instances and completely reconfigure the cluster from scratch. |

|

starcluster terminate myclusterABC |

When you're finished using the cluster and wish to terminate it and stop paying for service. |

|

starcluster stop myclusterABC |

if the cluster uses EBS instances, you can use the 'stop' command to shutdown all nodes and put them into a 'stopped' state preserving the EBS volumes backing the nodes. You can restart a stopped cluster with: starcluster start -x myclusterABC |

|

starcluster sshmaster myclusterABC |

To login to the master node as root. |

ALWAYS REMEMBER TO STOP YOUR CLUSTER WHEN YOU ARE DONE WITH YOUR COMPUTATION.

Connecting to the Master Node of the Cluster

Assuming that you have started your cluster with the starcluster start myclusterABC command.

In the console/Terminal window of your local machine, type the following commands (user input in bold):

starcluster sshmaster myclusterABC

StarCluster - (http://star.mit.edu/cluster) (v. 0.95.6)

Software Tools for Academics and Researchers (STAR)

Please submit bug reports to starcluster@mit.edu

*** WARNING - Setting 'EC2_PRIVATE_KEY' from environment...

*** WARNING - Setting 'EC2_CERT' from environment...

The authenticity of host 'ec2-54-205-145-52.compute-1.amazonaws.com (54.205.145.52)' can't be established.

RSA key fingerprint is a4:bb:5c:7f:5b:5e:09:eb:b1:7c:a0:ea:77:4e:44:81.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'ec2-54-205-145-52.compute-1.amazonaws.com,54.205.145.52' (RSA) to the list of known hosts.

_ _ _

__/\_____| |_ __ _ _ __ ___| |_ _ ___| |_ ___ _ __

\ / __| __/ _` | '__/ __| | | | / __| __/ _ \ '__|

/_ _\__ \ || (_| | | | (__| | |_| \__ \ || __/ |

\/ |___/\__\__,_|_| \___|_|\__,_|___/\__\___|_|

StarCluster Ubuntu 13.04 AMI

Software Tools for Academics and Researchers (STAR)

Homepage: http://star.mit.edu/cluster

Documentation: http://star.mit.edu/cluster/docs/latest

Code: https://github.com/jtriley/StarCluster

Mailing list: http://star.mit.edu/cluster/mailinglist.html

This AMI Contains:

* Open Grid Scheduler (OGS - formerly SGE) queuing system

* Condor workload management system

* OpenMPI compiled with Open Grid Scheduler support

* OpenBLAS - Highly optimized Basic Linear Algebra Routines

* NumPy/SciPy linked against OpenBlas

* Pandas - Data Analysis Library

* IPython 1.1.0 with parallel and notebook support

* Julia 0.3pre

* and more! (use 'dpkg -l' to show all installed packages)

Open Grid Scheduler/Condor cheat sheet:

* qstat/condor_q - show status of batch jobs

* qhost/condor_status- show status of hosts, queues, and jobs

* qsub/condor_submit - submit batch jobs (e.g. qsub -cwd ./job.sh)

* qdel/condor_rm - delete batch jobs (e.g. qdel 7)

* qconf - configure Open Grid Scheduler system

Current System Stats:

System load: 0.08 Processes: 91

Usage of /: 34.6% of 7.84GB Users logged in: 0

Memory usage: 3% IP address for eth0: 10.147.42.140

Swap usage: 0%

https://landscape.canonical.com/

/usr/bin/xauth: file /root/.Xauthority does not exist

root@master:~#

- That's it! We're in!

- Look at the list of software packages installed by default on our instance; it contains OpenMPI! Exactly what we need to run parallel MPI programs!

Creating and Running our First MPI Program

- While we're connected to the master node, let's login as sgeadmin, the default user created by StarCluster, and create hello.c:

root@master:~# su - sgeadmin sgeadmin@master:~ $ emacs -nw hello.c

- type in or copy/paste the code below:

#include <stdio.h> /* printf and BUFSIZ defined there */ #include <stdlib.h> /* exit defined there */ #include <mpi.h> /* all MPI-2 functions defined there */ int main( int argc, char *argv[] ) { int rank, size, length; char name[BUFSIZ]; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &size); MPI_Get_processor_name(name, &length); printf("%s: hello world from process %d of %d\n", name, rank, size); MPI_Finalize(); exit(0); }

- Compile it:

sgeadmin@master:~ $ mpicc hello.c -o hello

- Run it

sgeadmin@master:~ $ mpirun.mpich2 -np 10 ./hello master: hello world from process 9 of 10 master: hello world from process 7 of 10 master: hello world from process 8 of 10 master: hello world from process 6 of 10 master: hello world from process 2 of 10 master: hello world from process 3 of 10 master: hello world from process 4 of 10 master: hello world from process 1 of 10 master: hello world from process 5 of 10 master: hello world from process 0 of 10

- If you get the same output, congratulate yourself and tap yourself on the back!

- Note that we haven't necessarily distributed the hello program to the two nodes on the cluster. To make this happen we need to tell mpirun which nodes it should run the processes on. We'll do that next.

Transferring Files between Local Machine and Cluster

But first, let's see how to transfer files back and forth between your Mac and your cluster.

If you have programs on your local machine you want to transfer to the cluster, you can use rsync, of course, but you can also use starcluster which supports a put and a get command.

We will assume that there is a file in our local (Mac or Windows laptop) Desktop/mpi directory called hello_world.c that we want to transfer to our newly created mpi directory on the cluster. This is how we would put it there.

(on the laptop, in a Terminal window) cd cd Desktop/mpi starcluster put myclusterABC hello_world.c /home/sgeadmin/

Note that the put command expects:

- the name of the cluster we're using, in this case myclusterABC.

- the name of the file or directory to send (it can send whole directories). In this case hello_world.c.

- the name of the directory where to store the file or directory, in this case /home/sgeadmin/.

There is also a get command that works similarly.

You can get more information on put and get here.

Run MPI Programs on all the Nodes of the Cluster

Creating a Host File

To run an MPI program on several nodes, we need a host file, a simple text file that resides in a particular place (say the local directory where the mpi source and executable reside). This file simply contains the nicknames of all the nodes. Fortunately the MIT starcluster package does things very well, and this information is already stored in the /etc/hosts file of all our the nodes of our cluster.

If you are not logged in to your master node, please do so now:

starcluster sshmaster myclusterABC

Once there, type these commands (user input in bold):

cat /etc/hosts 127.0.0.1 localhost # The following lines are desirable for IPv6 capable hosts ::1 ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters ff02::3 ip6-allhosts 10.29.203.115 master 10.180.162.168 node001

The information we need is in the last two lines, where we find the two nicknames we're after: master and node001.

We simply take this information and create a file called hosts in our local /home/mpi directory:

emacs ~/hosts

- and add these two lines:

master node001

- Save the file.

Running Hello-World on All the Nodes

We can now run our hello world on both machines of our cluster:

sgeadmin@master:~ $ mpirun.mpich2 -np 10 -f hosts ./hello master: hello world from process 8 of 10 master: hello world from process 0 of 10 master: hello world from process 2 of 10 master: hello world from process 4 of 10 master: hello world from process 6 of 10 node001: hello world from process 7 of 10 node001: hello world from process 3 of 10 node001: hello world from process 9 of 10 node001: hello world from process 5 of 10 node001: hello world from process 1 of 10

Notice the different machine names appearing in the two lines. If you get this output, then time for another pat on the back!

- Alternatively, you could launch your MPI program on the two nodes using this syntax:

sgeadmin@master:~ $ mpirun.mpich2 -np 10 -host master,node001 ./hello node001: hello world from process 5 of 10 node001: hello world from process 7 of 10 node001: hello world from process 1 of 10 node001: hello world from process 9 of 10 master: hello world from process 8 of 10 master: hello world from process 6 of 10 master: hello world from process 4 of 10 node001: hello world from process 3 of 10 master: hello world from process 2 of 10 master: hello world from process 0 of 10

Please TERMINATE your cluster if you are not going to use it again today

Move on to the next tutorial, Computing Pi on an AWS MPI-cluster