Difference between revisions of "Tutorial: Running Multithreaded Programs on AWS"

(→Shell File: runFilterwiki10.sh) |

(→Shell File: runFilterwiki10.sh) |

||

| Line 112: | Line 112: | ||

<br /><br /><br /> | <br /><br /><br /> | ||

| + | =C++ Program: main.cpp= | ||

| + | |||

<br /><br /><br /> | <br /><br /><br /> | ||

| + | <source lang="C++"> | ||

| + | /*************************************************************************** | ||

| + | filterwiki10 | ||

| + | D. Thiebaut | ||

| + | |||

| + | 6/6/10 | ||

| + | |||

| + | Qt3 program. | ||

| + | Compile with qcompile3 | ||

| + | |||

| + | This program was put together to test XGrid against hadoop. | ||

| + | |||

| + | It reads xml files produced by SplitFile and which reside in SplitFile/splits, | ||

| + | and generate a different xml file. | ||

| + | |||

| + | Syntax: | ||

| + | ./filterwiki10 -in 1line.split.0 -out out.txt | ||

| + | |||

| + | |||

| + | Format of input: | ||

| + | ================ | ||

| + | |||

| + | 1line.split.0 is a chunk resulting from splitting the original wikipedia dump | ||

| + | into files of roughly 60 MB (for hadoop split size). | ||

| + | Each line of 1line.split.0 contains a <page>...</page> entry, where all the | ||

| + | information between <page> and </page> is the same as in the enwiki dump. | ||

| + | |||

| + | This was done so that hadoop could take that file and cut it into line and | ||

| + | give it directly to programs, and this way the programs would get exactly | ||

| + | one page of wikipedia | ||

| + | |||

| + | Processing | ||

| + | ========== | ||

| + | |||

| + | |||

| + | Format of output | ||

| + | ================ | ||

| + | |||

| + | The output is xml and in the format of an output that could result from | ||

| + | hadoop. Each line is of the form | ||

| + | |||

| + | Id\t<xml>...</xml> | ||

| + | |||

| + | i.e. the Id of the wikipedia page, followed by a long line of <xml> sandwiched | ||

| + | between <xml> and </xml>. The contents looks like this (with \n added for | ||

| + | readability): | ||

| + | <xml> | ||

| + | <Id>12</Id> | ||

| + | <ti>Anarchism</ti> | ||

| + | <contribs></contribs> | ||

| + | <cats> | ||

| + | <cat>Anarchism</cat> | ||

| + | <cat>Political ideologies</cat> | ||

| + | </cats> | ||

| + | <lnks> | ||

| + | <lnk>6 February 1934 crisis</lnk> | ||

| + | <lnk>A Greek-English Lexicon</lnk> | ||

| + | </lnks> | ||

| + | <mstFrqs> | ||

| + | <wrd><w>anarchism</w><f>188</f></wrd> | ||

| + | <wrd><w>anarchist</w><f>111</f></wrd> | ||

| + | <wrd><w>individualist</w><f>55</f></wrd> | ||

| + | </mstFrqs> | ||

| + | </xml> | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | ***************************************************************************/ | ||

| + | #include <qapplication.h> | ||

| + | #include <qobject.h> | ||

| + | #include <qtimer.h> | ||

| + | #include "engine.h" | ||

| + | |||

| + | using namespace std; | ||

| + | |||

| + | |||

| + | int main(int argc, char *argv[]) { | ||

| + | |||

| + | if ( argc<5 ) { | ||

| + | cerr << "Syntax: " << argv[0] << " -in inFileName -out outFileName" << endl << endl; | ||

| + | return 1; | ||

| + | } | ||

| + | |||

| + | QApplication app( argc, argv, false ); | ||

| + | engineClass engine; | ||

| + | engine.setDebug( false ); | ||

| + | |||

| + | for ( int i=1; i<argc; i++ ) { | ||

| + | if ( QString( argv[i] )=="-in" && ( argc>=i+1 ) ) | ||

| + | engine.setInFileName( QString( argv[i+1] ) ); | ||

| + | if ( QString( argv[i] )=="-out" && ( argc>=i+1 ) ) | ||

| + | engine.setOutFileName( QString( argv[i+1] ) ); | ||

| + | } | ||

| + | |||

| + | //--- start main application --- | ||

| + | QTimer::singleShot( 0, &engine, SLOT( mainEngine() ) ); | ||

| + | |||

| + | return app.exec(); | ||

| + | } | ||

| + | |||

| + | </source> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

[[Category:Tutorials]] | [[Category:Tutorials]] | ||

Revision as of 14:48, 14 June 2012

--D. Thiebaut 15:39, 14 June 2012 (EDT)

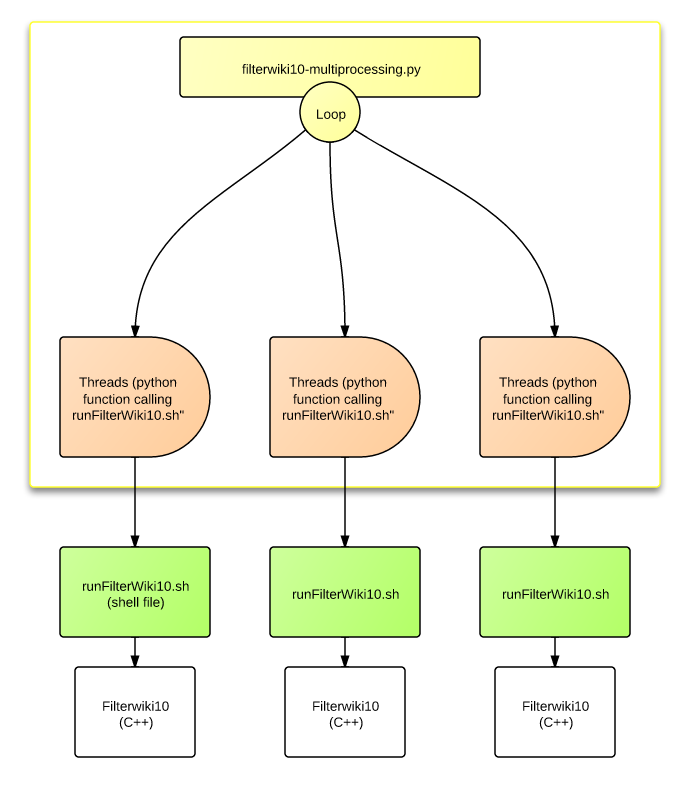

This tutorial is a quick overview of how to run a compiled C++ program on a multi-core machine. In this case the multicore is on AWS (Amazon), but it could also be any multicore desktop machine.

Contents

Overall Block Diagram

Main Python Program: runMultipleFilterWiki10.py

#! /usr/bin/env python2.6

# D. Thiebaut

import sys

import time

import multiprocessing

import subprocess

def syntax():

print "runMultipleFilterWiki10.py -start nn -end nn"

def runFilterWiki( id ):

# runFilterwiki10.sh url inFile outFile

# url = http://hadoop0.dyndns.org/wikipedia/1line.split.nnn.gz

url = "http://hadoop0.dyndns.org/wikipediagz/1line.split." + id + ".gz"

print "runFilterwiki10.sh", url, "infile."+id, "outfile."+id

output = subprocess.Popen( ["runFilterwiki10.sh", url, "infile."+id , "outfile."+id],

stdout=subprocess.PIPE ).communicate()[0]

print output

def main():

start = None

end = None

for i, arg in enumerate( sys.argv ):

#print "2"

if arg=="-start" and i+1 < len( sys.argv ):

start = sys.argv[i+1]

if arg=="-end" and i+1 < len( sys.argv ):

end = sys.argv[i+1]

#print "3"

if start==None or end==None:

syntax()

return

start = int( start )

end = int( end )

print "start = ", start

print "end = ", end

list = []

for i in range( start, end ):

p = multiprocessing.Process( target=runFilterWiki, args=( i, ) )

p.start()

list.append( p )

for p in list:

p.join()

main()

Shell File: runFilterwiki10.sh

#! /bin/bash

# runFilterwiki10.sh

# D. Thiebaut

# runs filterwiki10 and fetches URL files first

#

USAGE="syntax: runFilterwiki10.sh urlOfInputFile LocalInputFileName localOutFileName"

#echo $#

if [ $# != 3 ]; then

echo "$USAGE"

exit 1

fi

url=$1

inFile=$2

outFile=$3

echo /usr/bin/env curl -s -o ${inFile}.gz -G $url

/usr/bin/env curl -s -o ${inFile}.gz -G $url

echo gunzip ${inFile}.gz

gunzip ${inFile}.gz

echo ./filterwiki10 -in $inFile -out $outFile

./filterwiki10 -in $inFile -out $outFile

rm $inFile

echo gzip $outFile

gzip $outFile

/usr/bin/env curl -s -F "uploadedfile=@${outFile}.gz" http://hadoop0.dyndns.org/uploader.php

rm ${outFile}.gz

C++ Program: main.cpp

/***************************************************************************

filterwiki10

D. Thiebaut

6/6/10

Qt3 program.

Compile with qcompile3

This program was put together to test XGrid against hadoop.

It reads xml files produced by SplitFile and which reside in SplitFile/splits,

and generate a different xml file.

Syntax:

./filterwiki10 -in 1line.split.0 -out out.txt

Format of input:

================

1line.split.0 is a chunk resulting from splitting the original wikipedia dump

into files of roughly 60 MB (for hadoop split size).

Each line of 1line.split.0 contains a <page>...</page> entry, where all the

information between <page> and </page> is the same as in the enwiki dump.

This was done so that hadoop could take that file and cut it into line and

give it directly to programs, and this way the programs would get exactly

one page of wikipedia

Processing

==========

Format of output

================

The output is xml and in the format of an output that could result from

hadoop. Each line is of the form

Id\t<xml>...</xml>

i.e. the Id of the wikipedia page, followed by a long line of <xml> sandwiched

between <xml> and </xml>. The contents looks like this (with \n added for

readability):

<xml>

<Id>12</Id>

<ti>Anarchism</ti>

<contribs></contribs>

<cats>

<cat>Anarchism</cat>

<cat>Political ideologies</cat>

</cats>

<lnks>

<lnk>6 February 1934 crisis</lnk>

<lnk>A Greek-English Lexicon</lnk>

</lnks>

<mstFrqs>

<wrd><w>anarchism</w><f>188</f></wrd>

<wrd><w>anarchist</w><f>111</f></wrd>

<wrd><w>individualist</w><f>55</f></wrd>

</mstFrqs>

</xml>

***************************************************************************/

#include <qapplication.h>

#include <qobject.h>

#include <qtimer.h>

#include "engine.h"

using namespace std;

int main(int argc, char *argv[]) {

if ( argc<5 ) {

cerr << "Syntax: " << argv[0] << " -in inFileName -out outFileName" << endl << endl;

return 1;

}

QApplication app( argc, argv, false );

engineClass engine;

engine.setDebug( false );

for ( int i=1; i<argc; i++ ) {

if ( QString( argv[i] )=="-in" && ( argc>=i+1 ) )

engine.setInFileName( QString( argv[i+1] ) );

if ( QString( argv[i] )=="-out" && ( argc>=i+1 ) )

engine.setOutFileName( QString( argv[i+1] ) );

}

//--- start main application ---

QTimer::singleShot( 0, &engine, SLOT( mainEngine() ) );

return app.exec();

}