Difference between revisions of "Tutorial: TensorFlow References"

(→Intro to Machine Learning and TensorFlow, Google video) |

(→Understanding Deep Neural Nets) |

||

| Line 7: | Line 7: | ||

==Understanding Deep Neural Nets== | ==Understanding Deep Neural Nets== | ||

[[Image:TensorFlowPlayGround.png|500px|right]] | [[Image:TensorFlowPlayGround.png|500px|right]] | ||

| − | * Start with this [http://blogs.scientificamerican.com/sa-visual/unveiling-the-hidden-layers-of-deep-learning/ article from Scientific American]: [http://blogs.scientificamerican.com/sa-visual/unveiling-the-hidden-layers-of-deep-learning/ Unveiling the Hidden Layers of Deep Learning, by Amanda Montanez, editor at Scientific American]. This short article references [http://www.scientificamerican.com/article/springtime-for-ai-the-rise-of-deep-learning/ Springtime for AI, the rise of deep-learning], published by Scientific American (you'll need a subscription to access this article.) | + | * '''Step 1''':Start with this [http://blogs.scientificamerican.com/sa-visual/unveiling-the-hidden-layers-of-deep-learning/ article from Scientific American]: [http://blogs.scientificamerican.com/sa-visual/unveiling-the-hidden-layers-of-deep-learning/ Unveiling the Hidden Layers of Deep Learning, by Amanda Montanez, editor at Scientific American]. This short article references [http://www.scientificamerican.com/article/springtime-for-ai-the-rise-of-deep-learning/ Springtime for AI, the rise of deep-learning], published by Scientific American (you'll need a subscription to access this article.) |

<br /> | <br /> | ||

| − | * '''Play | + | * '''Step 2''': ''Play'' with the [http://playground.tensorflow.org/#activation=relu&batchSize=21&dataset=spiral®Dataset=reg-plane&learningRate=0.03®ularizationRate=0&noise=10&networkShape=3,3,2&seed=0.56096&showTestData=true&discretize=true&percTrainData=70&x=false&y=true&xTimesY=true&xSquared=true&ySquared=false&cosX=false&sinX=true&cosY=false&sinY=true&collectStats=false&problem=classification&initZero=false TensorFlow playground], created by Daniel Smilkov and Shan Carter. |

<br /> | <br /> | ||

<br /> | <br /> | ||

| Line 18: | Line 18: | ||

<br /> | <br /> | ||

<br /> | <br /> | ||

| + | |||

==Machine-Learning Recipes== | ==Machine-Learning Recipes== | ||

<br /> | <br /> | ||

Revision as of 09:17, 8 August 2016

--D. Thiebaut (talk) 12:00, 6 August 2016 (EDT)

Contents

References & Tutorials

This page contains resources that will help you get up to speed with Machine Learning and TensorFlow. I have attempted to list the resources in a logical order, so that if you start from scratch, you will be presented by increasingly more sophisticated concepts as you progress. Note that all these tutorials assume a good understanding of Python an of how to install various packages for Python.

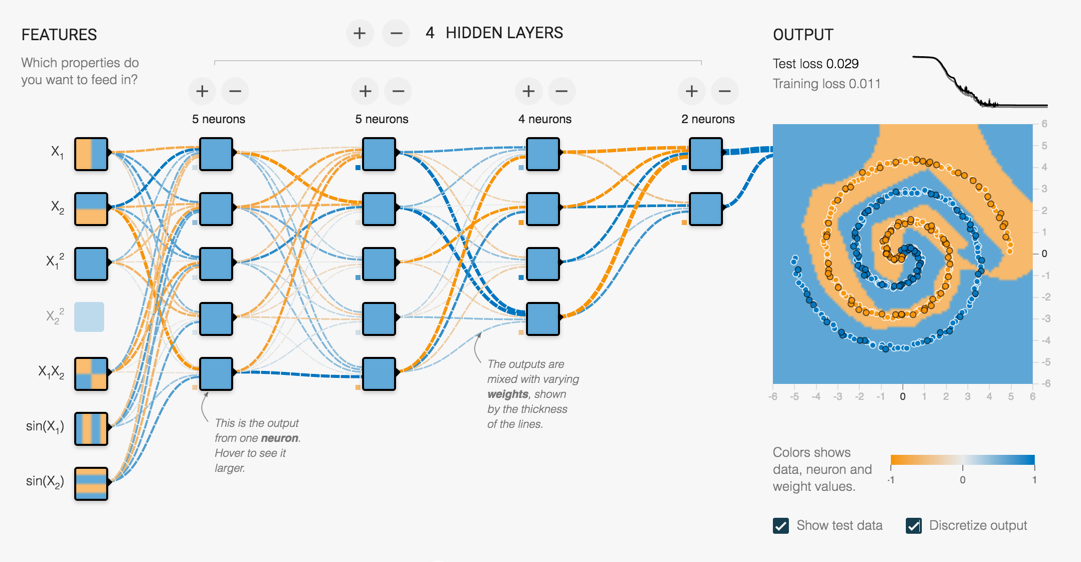

Understanding Deep Neural Nets

- Step 1:Start with this article from Scientific American: Unveiling the Hidden Layers of Deep Learning, by Amanda Montanez, editor at Scientific American. This short article references Springtime for AI, the rise of deep-learning, published by Scientific American (you'll need a subscription to access this article.)

- Step 2: Play with the TensorFlow playground, created by Daniel Smilkov and Shan Carter.

Machine-Learning Recipes

A very good (though quick) introduction to various Machine Learning concepts, presented by Josh Gordon. It is highly recommended to code all the examples presented.

Machine Learning Recipe #1: Hello world!

Machine Learning Recipe #2: Decision Trees

Machine Learning Recipe #3: What makes a good feature?

Machine Learning Recipe #4: Pipeline

Machine Learning Recipe #5: Writing your own classifier

Intro to Machine Learning and TensorFlow, Google video

This is a very good approach to TensorFlow which uses the wine quality data-set from UC Irvine Machine Learning repository. It uses the red wine data (winequality-red.csv). It starts with a 30-minute math tutorial presented by jenhsin0@gmail.com, and it covers

- entropy,

- variable dependency,

- dimensionality reduction,

- graph representation of models,

- activation functions in neural nets,

- loss functions, and

- gradient descent.

After a 10-minute pause (which is part of the video), the next speaker starts with a Jupyter notebook exploring various aspects of the wine-quality data. The notebook and associated data are available from this github repository.

WILDML: A Convolutional Neural Net for Text Classification in TF

- WildML Tutorial: A very detailed tutorial on text classification using TensorFlow.