Difference between revisions of "Tutorial: Running MPI Programs on Hadoop Cluster"

(→Configuration) |

(→Setup Password-Less ssh to the Hadoop cluster) |

||

| (38 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | --[[User:Thiebaut|D. Thiebaut]] ([[User talk:Thiebaut|talk]]) 13:57, 15 October 2013 (EDT) | + | --[[User:Thiebaut|D. Thiebaut]] ([[User talk:Thiebaut|talk]]) 13:57, 15 October 2013 (EDT)<br /> |

| + | Revised: --[[User:Thiebaut|D. Thiebaut]] ([[User talk:Thiebaut|talk]]) 12:02, 15 March 2017 (EDT) | ||

---- | ---- | ||

| + | <br /> | ||

| + | __TOC__ | ||

| + | <br /> | ||

| + | =Change your Password= | ||

| + | <br /> | ||

| + | * Login to hadoop01 with the accounts provided to you, and change your temporary password: | ||

| + | |||

| + | passwd | ||

| + | |||

| + | * You should now be all set. | ||

| + | <br /> | ||

| − | =Setup to | + | =Setup Password-Less '''ssh''' to the Hadoop cluster= |

| − | * | + | <br /> |

| + | [[Image:MPIHapoopClusterStep1.png|600px|center]] | ||

| + | <br /> | ||

| + | <onlysmith> | ||

| + | * The directions below are taken from [http://www.linuxproblem.org/art_9.html this page], and summarized here for the hadoop cluster. In all the steps you will need to replace ''yourusername'' by your actual user name on the hadoop cluster. | ||

| + | <br/> | ||

| + | :* ssh to hadoop01 | ||

| + | <br /> | ||

| + | :::<source lang="text"> | ||

| + | ssh -Y yourusername@hadoop01.dyndns.org | ||

| + | </source> | ||

| + | <br /> | ||

| + | :* enter the following commands | ||

| − | + | <br /> | |

| − | + | :::<source lang="text"> | |

| − | + | ssh-keygen -t rsa ''(and press ENTER 3 times)'' | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | < | ||

| − | |||

| − | |||

| − | ssh-keygen -t rsa | ||

ls .ssh | ls .ssh | ||

cd .ssh | cd .ssh | ||

| − | + | ssh yourusername@hadoop02.dyndns.org mkdir -p .ssh | |

| − | + | cat ~/.ssh/id_rsa.pub | ssh yourusername@hadoop02.dyndns.org 'cat >> .ssh/authorized_keys' | |

| − | + | </source> | |

| − | + | <br /> | |

| − | + | ||

| − | - | + | |

| − | - | + | :* Now ssh to '''hadoop02''' and verify that you can ssh without password, as the authentication is now done through rsa keys. |

| − | cat id_rsa. | + | :* exit from hadoop02 and find yourself again on '''hadoop01''' |

| − | emacs -nw | + | :* repeat the last 2 commands above (ssh and cat) for '''hadoop03''', and '''hadoop04''': |

| − | + | <br /> | |

| + | :::<source lang="text"> | ||

| + | ssh yourusername@hadoop03.dyndns.org mkdir -p .ssh | ||

| + | cat ~/.ssh/id_rsa.pub | ssh yourusername@hadoop03.dyndns.org 'cat >> .ssh/authorized_keys' | ||

| + | |||

| + | ssh yourusername@hadoop04.dyndns.org mkdir -p .ssh | ||

| + | cat ~/.ssh/id_rsa.pub | ssh yourusername@hadoop04.dyndns.org 'cat >> .ssh/authorized_keys' | ||

| + | </source> | ||

| + | <br /> | ||

| + | |||

| + | :* verify that you can ssh to '''hadoop03''' and '''hadoop04''' without password. | ||

| + | * You should now be all set with passwordless ssh to the hadoop01, 02, 03, and 04 cluster. | ||

| + | </onlysmith> | ||

| + | <br /> | ||

| + | |||

| + | =Setup Aliases= | ||

| + | <br /> | ||

| + | * This section is not required, but will save you a lot of typing. | ||

| + | * Edit your .bashrc file | ||

| + | |||

| + | emacs -nw ~/.bashrc | ||

| + | |||

| + | * and add these 3 lines at the end, where you will replace ''yourusername'' by your actual user name. | ||

| + | |||

| + | alias hadoop02='ssh -Y yourusername@hadoop02.dyndns.org' | ||

| + | alias hadoop03='ssh -Y yourusername@hadoop03.dyndns.org' | ||

| + | alias hadoop04='ssh -Y yourusername@hadoop04.dyndns.org' | ||

| + | |||

| + | * Then tell bash to re-read the .bashrc file, since we just modified it. This way bash will learn the 3 new aliases we've defined. | ||

| + | |||

| + | source ~/.bashrc | ||

| + | |||

| + | * Now you should be able to connect to the servers using their name only. For example: | ||

| + | |||

| + | hadoop02 | ||

| + | |||

| + | : this should connect you to '''hadoop02''' directly. | ||

| + | * Exit from hadoop02, and try the same thing for '''hadoop03''', and '''hadoop04'''. | ||

| + | * Note, if you like even shorter commands, you could modify the .bashrc file and make the aliases h2, h3, and h4... Up to you. | ||

| + | |||

| + | <br /> | ||

| − | + | =Create HelloWorld Program & Test MPI= | |

| + | <br /> | ||

| + | [[Image:MPIHapoopClusterStep2.png|600px|center]] | ||

| + | <br /> | ||

| − | + | MPI should already be installed, and your account ready to access it. To verify this, you will create an MPI directory and create a simple MPI "Hello World!" program in it. You will then compile it, and run it as an MPI application. | |

| − | * create a | + | * First create a directory called '''mpi''' |

| − | * | + | |

| + | cd | ||

| + | mkdir mpi | ||

| + | |||

| + | * Then '''cd''' to this directory and create the following C program: | ||

| + | |||

| + | cd mpi | ||

| + | emacs -nw helloWorld.c | ||

| + | |||

| + | :Here's the code: | ||

| + | <br /> | ||

| + | ::<source lang="C"> | ||

| + | // hello.c | ||

| + | // A simple hello world MPI program that can be run | ||

| + | // on any number of computers | ||

| + | #include <mpi.h> | ||

| + | #include <stdio.h> | ||

| + | |||

| + | int main( int argc, char *argv[] ) { | ||

| + | int rank, size, nameLen; | ||

| + | char hostName[80]; | ||

| + | |||

| + | MPI_Init( &argc, &argv); /* start MPI */ | ||

| + | MPI_Comm_rank( MPI_COMM_WORLD, &rank ); /* get current process Id */ | ||

| + | MPI_Comm_size( MPI_COMM_WORLD, &size ); /* get # of processes */ | ||

| + | MPI_Get_processor_name( hostName, &nameLen); | ||

| + | |||

| + | printf( "Hello from Process %d of %d on Host %s\n", rank, size, hostName ); | ||

| + | MPI_Finalize(); | ||

| + | return 0; | ||

| + | } | ||

| + | |||

| + | </source> | ||

| + | <br /> | ||

| + | =Compile & Run on 1 Server= | ||

| + | <br /> | ||

| + | [[Image:MPIHapoopClusterStep3.png|600px|center]] | ||

| + | <br /> | ||

| + | |||

| + | * Compile and Run the program: | ||

| + | <br /> | ||

| + | |||

| + | '''mpicc -o hello helloWorld.c''' | ||

| + | '''mpirun -np 2 ./hello''' | ||

| + | Hello from Process 1 of 2 on Host Hadoop01 | ||

| + | Hello from Process 0 of 2 on Host Hadoop01 | ||

| + | |||

| + | * If you see the two lines starting with "Hello world" on your screen, MPI was successfully installed on your system! | ||

| + | |||

| + | <br /> | ||

| + | |||

| + | =Configuration for Running On Multiple Servers= | ||

<onlysmith> | <onlysmith> | ||

| + | <br /> | ||

| + | * You will now create a file called '''hosts''' that contains all the IP addresses of the servers in our cluster. | ||

| + | * The easy way to do is to '''dig''' each server one after the other: | ||

| + | |||

| + | dig +short hadoop01.dyndns.org | ||

| + | dig +short hadoop02.dyndns.org | ||

| + | dig +short hadoop03.dyndns.org | ||

| + | dig +short hadoop04.dyndns.org | ||

| − | + | * Create a file called '''hosts''' in the directory where the mpi programs are located, and store the IP addresses you will have obtained with '''dig''' in it. | |

| − | + | ||

| − | + | * For those of you who are bash enthusiasts, you can do this in one command: | |

| − | + | ||

| − | + | for i in 1 2 3 4 ; do dig +short hadoop0${i}.dyndns.org ; done > hosts | |

| − | + | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

</onlysmith> | </onlysmith> | ||

| + | =Running HelloWorld.c on all Four Servers= | ||

| + | <br /> | ||

| + | ==Rsync Your Files to Other Servers== | ||

| + | <br /> | ||

| + | [[Image:MPIHapoopClusterStep4.png|600px|center]] | ||

| + | <br /> | ||

| + | <tanbox> | ||

| + | '''Important Note''': Every time you create a new program on Hadoop01, you will need to replicate it on the other 3 servers. This is something that is easily forgotten, so make a mental note to always copy your latest files to the other 3 servers. | ||

| + | </tanbox> | ||

| + | <br /> | ||

| + | * One powerful command to copy files remotely is '''rsync''': | ||

| + | |||

| + | cd | ||

| + | cd mpi | ||

| + | rsync -azv * yourusername@hadoop02.dyndns.org:mpi/ | ||

| + | rsync -azv * yourusername@hadoop03.dyndns.org:mpi/ | ||

| + | rsync -azv * yourusername@hadoop04.dyndns.org:mpi/ | ||

| + | |||

| + | * Or, if you like bash for-loops: | ||

| + | |||

| + | cd | ||

| + | cd mpi | ||

| + | for i in 2 3 4 ; do rsync -azv * yourusername@hadoop0${i}.dyndns.org:mpi/ ; done | ||

| + | |||

| + | * You are now ready to run '''hello''' on all four servers: | ||

| + | |||

| + | <br /> | ||

| + | ==Running on All Four Servers== | ||

| + | <br /> | ||

| + | [[Image:MPIHapoopClusterStep5.png|600px|center]] | ||

| + | <br /> | ||

| + | * Enter the following command: | ||

| + | |||

| + | '''mpirun -np 4 --hostfile hosts ./hello''' | ||

| + | Hello from Process 0 of 4 on Host Hadoop01 | ||

| + | Hello from Process 3 of 4 on Host Hadoop04 | ||

| + | Hello from Process 2 of 4 on Host Hadoop03 | ||

| + | Hello from Process 1 of 4 on Host Hadoop02 | ||

| + | |||

| + | * And try running 10 processes on 4 servers: | ||

| + | |||

| + | '''mpirun -np 10 --hostfile hosts ./hello''' | ||

| + | Hello from Process 3 of 10 on Host Hadoop04 | ||

| + | Hello from Process 7 of 10 on Host Hadoop04 | ||

| + | Hello from Process 1 of 10 on Host Hadoop02 | ||

| + | Hello from Process 5 of 10 on Host Hadoop02 | ||

| + | Hello from Process 9 of 10 on Host Hadoop02 | ||

| + | Hello from Process 2 of 10 on Host Hadoop03 | ||

| + | Hello from Process 6 of 10 on Host Hadoop03 | ||

| + | Hello from Process 0 of 10 on Host Hadoop01 | ||

| + | Hello from Process 8 of 10 on Host Hadoop01 | ||

| + | Hello from Process 4 of 10 on Host Hadoop01 | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | [[Category:MPI]][[Category:C]][[Category:CSC352]] | ||

Latest revision as of 13:16, 23 March 2017

--D. Thiebaut (talk) 13:57, 15 October 2013 (EDT)

Revised: --D. Thiebaut (talk) 12:02, 15 March 2017 (EDT)

Contents

Change your Password

- Login to hadoop01 with the accounts provided to you, and change your temporary password:

passwd

- You should now be all set.

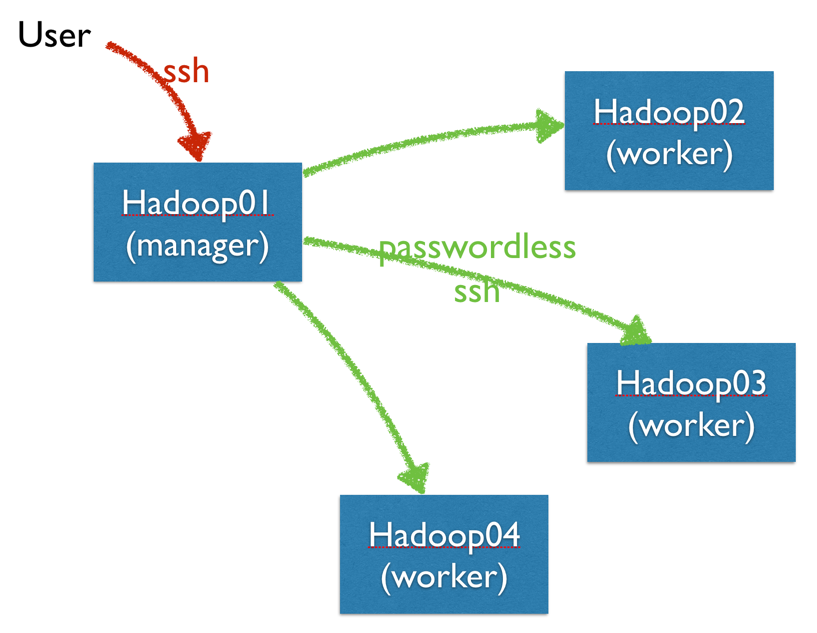

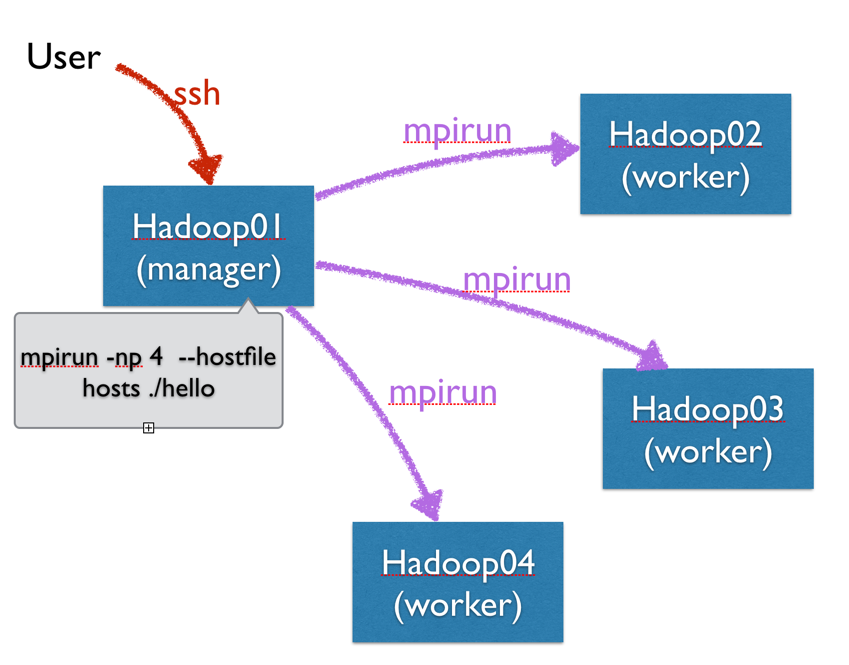

Setup Password-Less ssh to the Hadoop cluster

Setup Aliases

- This section is not required, but will save you a lot of typing.

- Edit your .bashrc file

emacs -nw ~/.bashrc

- and add these 3 lines at the end, where you will replace yourusername by your actual user name.

alias hadoop02='ssh -Y yourusername@hadoop02.dyndns.org' alias hadoop03='ssh -Y yourusername@hadoop03.dyndns.org' alias hadoop04='ssh -Y yourusername@hadoop04.dyndns.org'

- Then tell bash to re-read the .bashrc file, since we just modified it. This way bash will learn the 3 new aliases we've defined.

source ~/.bashrc

- Now you should be able to connect to the servers using their name only. For example:

hadoop02

- this should connect you to hadoop02 directly.

- Exit from hadoop02, and try the same thing for hadoop03, and hadoop04.

- Note, if you like even shorter commands, you could modify the .bashrc file and make the aliases h2, h3, and h4... Up to you.

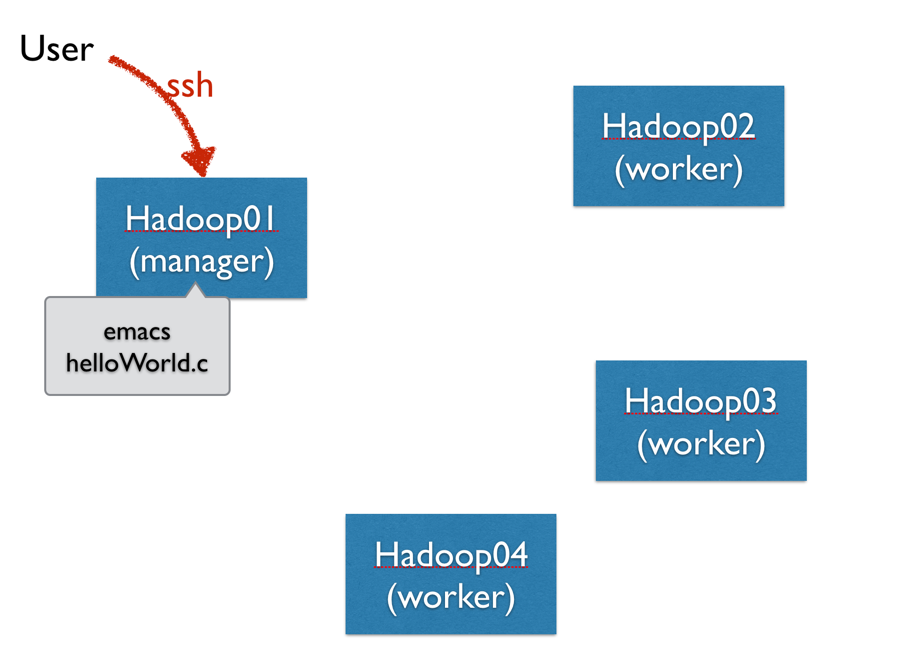

Create HelloWorld Program & Test MPI

MPI should already be installed, and your account ready to access it. To verify this, you will create an MPI directory and create a simple MPI "Hello World!" program in it. You will then compile it, and run it as an MPI application.

- First create a directory called mpi

cd mkdir mpi

- Then cd to this directory and create the following C program:

cd mpi emacs -nw helloWorld.c

- Here's the code:

// hello.c // A simple hello world MPI program that can be run // on any number of computers #include <mpi.h> #include <stdio.h> int main( int argc, char *argv[] ) { int rank, size, nameLen; char hostName[80]; MPI_Init( &argc, &argv); /* start MPI */ MPI_Comm_rank( MPI_COMM_WORLD, &rank ); /* get current process Id */ MPI_Comm_size( MPI_COMM_WORLD, &size ); /* get # of processes */ MPI_Get_processor_name( hostName, &nameLen); printf( "Hello from Process %d of %d on Host %s\n", rank, size, hostName ); MPI_Finalize(); return 0; }

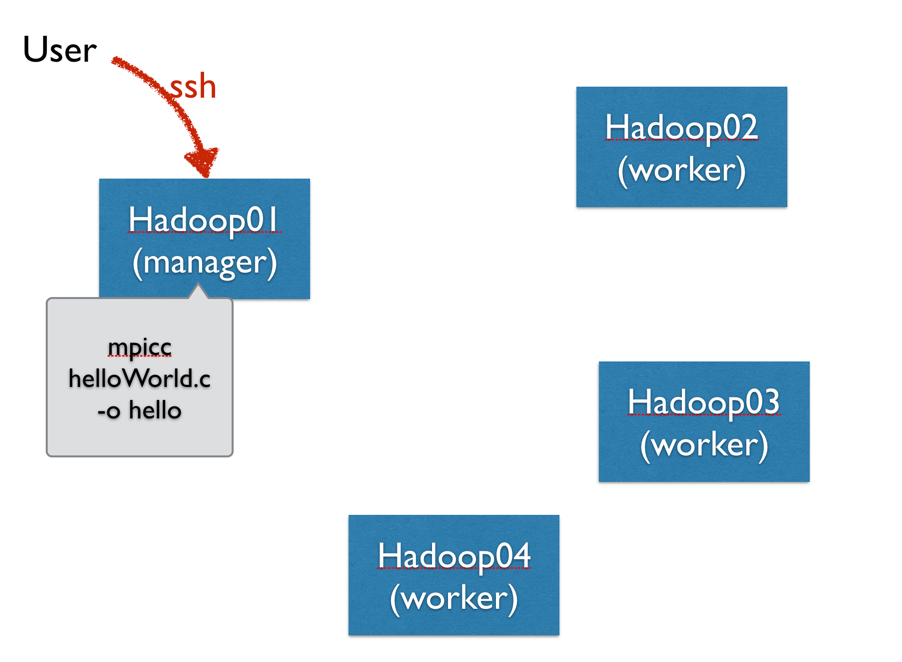

Compile & Run on 1 Server

- Compile and Run the program:

mpicc -o hello helloWorld.c mpirun -np 2 ./hello Hello from Process 1 of 2 on Host Hadoop01 Hello from Process 0 of 2 on Host Hadoop01

- If you see the two lines starting with "Hello world" on your screen, MPI was successfully installed on your system!

Configuration for Running On Multiple Servers

Running HelloWorld.c on all Four Servers

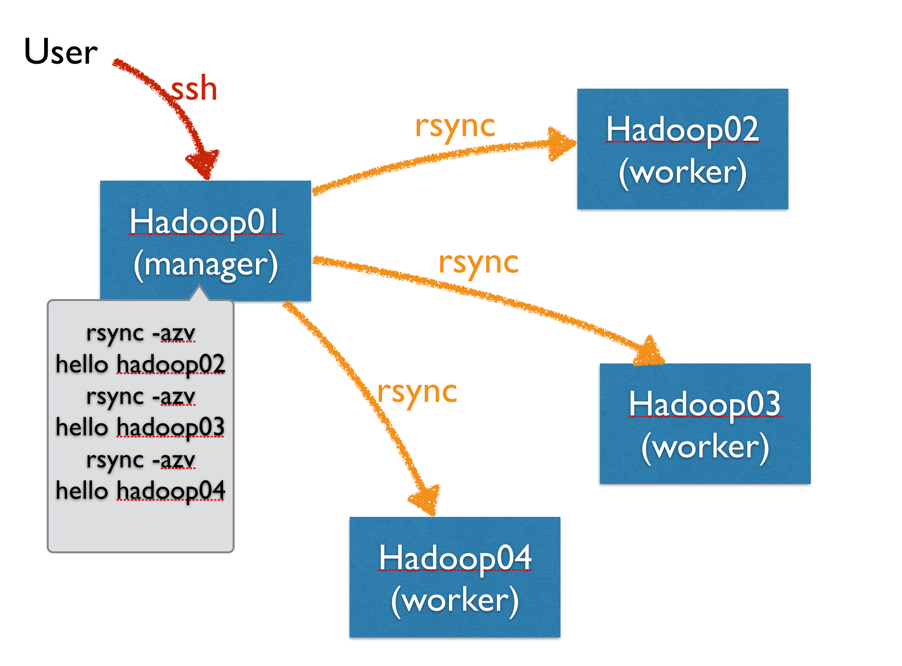

Rsync Your Files to Other Servers

Important Note: Every time you create a new program on Hadoop01, you will need to replicate it on the other 3 servers. This is something that is easily forgotten, so make a mental note to always copy your latest files to the other 3 servers.

- One powerful command to copy files remotely is rsync:

cd cd mpi rsync -azv * yourusername@hadoop02.dyndns.org:mpi/ rsync -azv * yourusername@hadoop03.dyndns.org:mpi/ rsync -azv * yourusername@hadoop04.dyndns.org:mpi/

- Or, if you like bash for-loops:

cd

cd mpi

for i in 2 3 4 ; do rsync -azv * yourusername@hadoop0${i}.dyndns.org:mpi/ ; done

- You are now ready to run hello on all four servers:

Running on All Four Servers

- Enter the following command:

mpirun -np 4 --hostfile hosts ./hello Hello from Process 0 of 4 on Host Hadoop01 Hello from Process 3 of 4 on Host Hadoop04 Hello from Process 2 of 4 on Host Hadoop03 Hello from Process 1 of 4 on Host Hadoop02

- And try running 10 processes on 4 servers:

mpirun -np 10 --hostfile hosts ./hello Hello from Process 3 of 10 on Host Hadoop04 Hello from Process 7 of 10 on Host Hadoop04 Hello from Process 1 of 10 on Host Hadoop02 Hello from Process 5 of 10 on Host Hadoop02 Hello from Process 9 of 10 on Host Hadoop02 Hello from Process 2 of 10 on Host Hadoop03 Hello from Process 6 of 10 on Host Hadoop03 Hello from Process 0 of 10 on Host Hadoop01 Hello from Process 8 of 10 on Host Hadoop01 Hello from Process 4 of 10 on Host Hadoop01