Difference between revisions of "AWS Bootcamp, July 2015"

(→Pics) |

|||

| (13 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

--[[User:Thiebaut|D. Thiebaut]] ([[User talk:Thiebaut|talk]]) 11:07, 10 July 2015 (EDT) | --[[User:Thiebaut|D. Thiebaut]] ([[User talk:Thiebaut|talk]]) 11:07, 10 July 2015 (EDT) | ||

---- | ---- | ||

| − | [[Image:AWSSummit2015.png|right| | + | [[Image:AWSSummit2015.png|right|250px]] |

| + | <br /> | ||

==[http://aws.amazon.com/summits/new-york/ AWS Summit 2015]<P>[http://aws.amazon.com/summits/new-york/ New York City, NY], July 7-8, 2015== | ==[http://aws.amazon.com/summits/new-york/ AWS Summit 2015]<P>[http://aws.amazon.com/summits/new-york/ New York City, NY], July 7-8, 2015== | ||

| − | + | <br /> | |

| − | <center>[[Image:JavitsConventionCenter.jpg|300px]]</center> | + | <center>[[Image:JavitsConventionCenter.jpg|300px|Glass ceiling at the Javits Convention Center]]</center> |

<br /> | <br /> | ||

| − | * I went to the Amazon Web Services (AWS) Summit 2015, in New York City on July 7-8 2015, and attended | + | * I went to the 2-day Amazon Web Services (AWS) Summit 2015, in New York City on July 7-8 2015, and attended a 1-day ''bootcamp'' on the first day, titled "Store, Manage, and Analyze Big Data in the Cloud": |

<blockquote>The Store, Manage, and Analyze Big Data in the Cloud Bootcamp provides a broad, hands-on introduction to data collection, data storage, and analysis using AWS analytics services plus third party tools. In this one-day bootcamp, we show you how to use cloud-based big data solutions and Amazon Elastic MapReduce (EMR), the AWS big data platform, to capture and process data. We also teach you how to work with Amazon Redshift, Amazon Kinesis, and Amazon Data Pipeline. Hands-on lab activities help you learn to work with services and leverage best practices in designing big data environments.</blockquote> | <blockquote>The Store, Manage, and Analyze Big Data in the Cloud Bootcamp provides a broad, hands-on introduction to data collection, data storage, and analysis using AWS analytics services plus third party tools. In this one-day bootcamp, we show you how to use cloud-based big data solutions and Amazon Elastic MapReduce (EMR), the AWS big data platform, to capture and process data. We also teach you how to work with Amazon Redshift, Amazon Kinesis, and Amazon Data Pipeline. Hands-on lab activities help you learn to work with services and leverage best practices in designing big data environments.</blockquote> | ||

<br /> | <br /> | ||

| − | :This was a super fast overview of some of the technologies made available by Amazon to deal with Big Data. The labs allowed participants to work on AWS with | + | :This was a super fast overview of some of the technologies made available by Amazon to deal with Big Data. The labs allowed participants to work on AWS with Hive, [http://aws.amazon.com/kinesis/ Kinesis], and mostly [http://aws.amazon.com/redshift/ Redshift]. It would probably take me 3 weeks of class and lab time to cover the same material in my CSC352 seminar. |

* The second day, I quickly went through the hall with all the different vendors of services built on top of AWS. Keywords found on most of the banners: "Backup", "Agile", "Secure", "Analytics", "Make sense" (as in make sense of your data), "Visualize." Most displays showed colorful graphs of timelines: the variation of workloads and traffic patterns experienced by software apps running on AWS clusters. One display showed the console and a bash prompt in it... | * The second day, I quickly went through the hall with all the different vendors of services built on top of AWS. Keywords found on most of the banners: "Backup", "Agile", "Secure", "Analytics", "Make sense" (as in make sense of your data), "Visualize." Most displays showed colorful graphs of timelines: the variation of workloads and traffic patterns experienced by software apps running on AWS clusters. One display showed the console and a bash prompt in it... | ||

| − | * At 10 that morning, Werner Vogels, CTO of Amazon, gave a presentation of where Amazon is, what it does, and what is new. Interesting to see how CTOs can be treated as rock-stars. Here are some of the comments and ideas I jotted down: | + | * At 10 that morning, [https://en.wikipedia.org/wiki/Werner_Vogels Werner Vogels], CTO of Amazon, gave a presentation of where Amazon is, what it does, and what is new. Interesting to see how CTOs can be treated as rock-stars. Here are some of the comments and ideas I jotted down: |

:* SQL is very much alive. There's an effort to make the cloud look like a big SQL repository. Why? Because a lot of programmers out there know SQL and how to get information out of SQL databases, and few understand Hadoop (which is mostly out, by the way). So it's easier to provide a view of the cloud as an SQL space. | :* SQL is very much alive. There's an effort to make the cloud look like a big SQL repository. Why? Because a lot of programmers out there know SQL and how to get information out of SQL databases, and few understand Hadoop (which is mostly out, by the way). So it's easier to provide a view of the cloud as an SQL space. | ||

:* "There's no hardware any longer," a quote by Werner Vogels. You manage your hardware infrastructure through the Web. It behaves as just another piece of software. | :* "There's no hardware any longer," a quote by Werner Vogels. You manage your hardware infrastructure through the Web. It behaves as just another piece of software. | ||

| − | :* Instead of tuning your cluster of computers so that you get the performance wanted, "just add another node!" That seemed to be the message during the Bootcamp session. Instances (computers) are just pennies an hour. You need more power, just add some more nodes; it's cheaper than paying somebody long hours to figure out how to tune the system. | + | :* Instead of tuning your cluster of computers so that you get the performance wanted, "just add another node!" That seemed to be the message during the Bootcamp session. Instances (computers) are just pennies an hour. You need more power, just add some more nodes; it's cheaper than paying somebody long hours to figure out how to tune the system. |

| + | :* Because MapReduce is inherently fault-tolerant, it's viable to use lots of ''spot-instances'' (you bid on a node with a price per hour, and as long as demand for cpus is low, you can have the node at that price. But when demand goes up, you may lose the node. If it is running a MapReduce type computation, that's ok. The computation that is lost will be launched on another instance, somewhere else. Interesting concept. Also, the variation of the price of spot instances can be large in the States, but much lower in other regions of the world, so these can be harnessed fairly safely by bidding a price slightly higher than the average spot price in these regions. | ||

:* In some of the new AWS services, instead of specifying the hardware infrastructure, the system manager specifies the throughput wanted, both for input, and for output. AWS will figure out how many nodes and what configuration to use. Cool! | :* In some of the new AWS services, instead of specifying the hardware infrastructure, the system manager specifies the throughput wanted, both for input, and for output. AWS will figure out how many nodes and what configuration to use. Cool! | ||

| − | :* AWS is making the cloud real-time, but introducing ''cloud events'': Functions that are triggered when new data comes in. Or when some result is generated in the cloud. Streaming and real-time applications become possible. | + | :* AWS is making the cloud real-time, but introducing ''cloud events'': Functions that are triggered when new data comes in. Or when some result is generated in the cloud. Streaming and real-time applications on AWS become possible. |

| − | + | <br /> | |

| + | =Pics= | ||

<br /> | <br /> | ||

| + | [[Image:AWSSummit2015_a.jpg|350px]] | ||

| + | [[Image:AWSSummit2015_b.jpg|350px]] | ||

| + | [[Image:AWSSummit2015_f.jpg|700px]] | ||

| + | [[Image:AWSSummit2015_c.jpg|350px]] | ||

| + | [[Image:AWSSummit2015_d.jpg|350px]] | ||

| + | [[Image:AWSSummit2015_e.jpg|350px]] | ||

| + | [[Image:AWSSummit2015_g.jpg|350px]] | ||

<br /> | <br /> | ||

| + | <br /> | ||

| + | I also visited Tessa Taylor, '08, at her new job at the NYT, and Inky Lee, '12, who just finished her MFA at ASU. | ||

| + | <br /> | ||

| + | <br /> | ||

| + | [[Image:AWSSummit2015_Inky.jpg|350px]] | ||

| + | [[Image:AWSSummit2015_Tessa.jpg|350px]] | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

<br /> | <br /> | ||

<br /> | <br /> | ||

[[Category:News]] | [[Category:News]] | ||

Latest revision as of 16:04, 10 July 2015

--D. Thiebaut (talk) 11:07, 10 July 2015 (EDT)

AWS Summit 2015New York City, NY, July 7-8, 2015

- I went to the 2-day Amazon Web Services (AWS) Summit 2015, in New York City on July 7-8 2015, and attended a 1-day bootcamp on the first day, titled "Store, Manage, and Analyze Big Data in the Cloud":

The Store, Manage, and Analyze Big Data in the Cloud Bootcamp provides a broad, hands-on introduction to data collection, data storage, and analysis using AWS analytics services plus third party tools. In this one-day bootcamp, we show you how to use cloud-based big data solutions and Amazon Elastic MapReduce (EMR), the AWS big data platform, to capture and process data. We also teach you how to work with Amazon Redshift, Amazon Kinesis, and Amazon Data Pipeline. Hands-on lab activities help you learn to work with services and leverage best practices in designing big data environments.

- This was a super fast overview of some of the technologies made available by Amazon to deal with Big Data. The labs allowed participants to work on AWS with Hive, Kinesis, and mostly Redshift. It would probably take me 3 weeks of class and lab time to cover the same material in my CSC352 seminar.

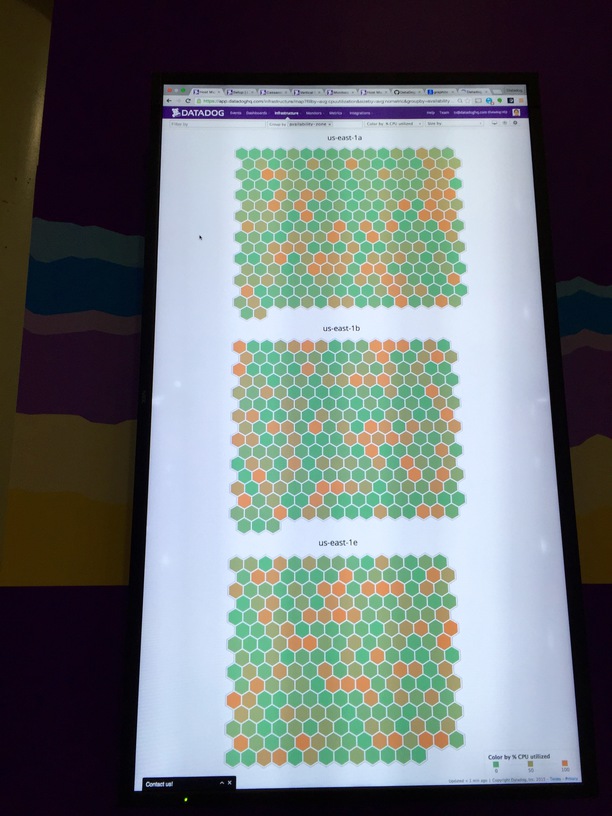

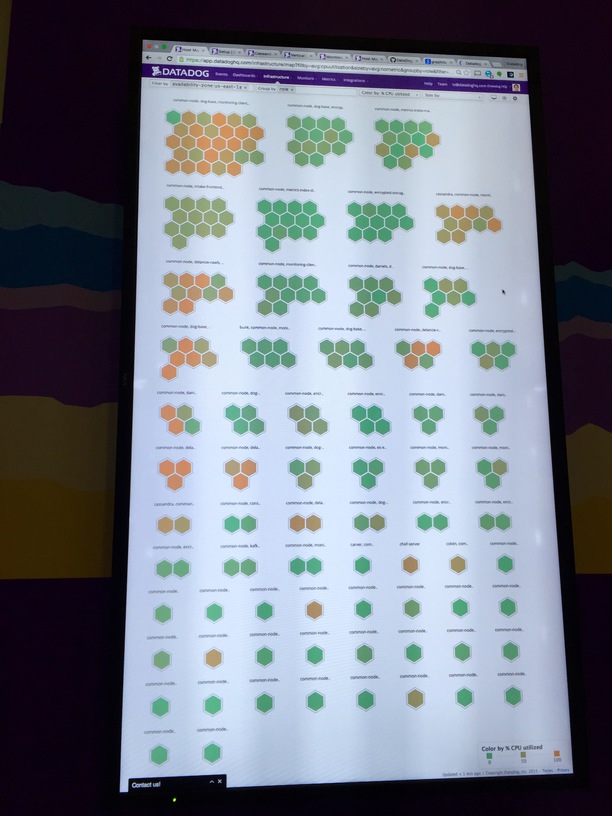

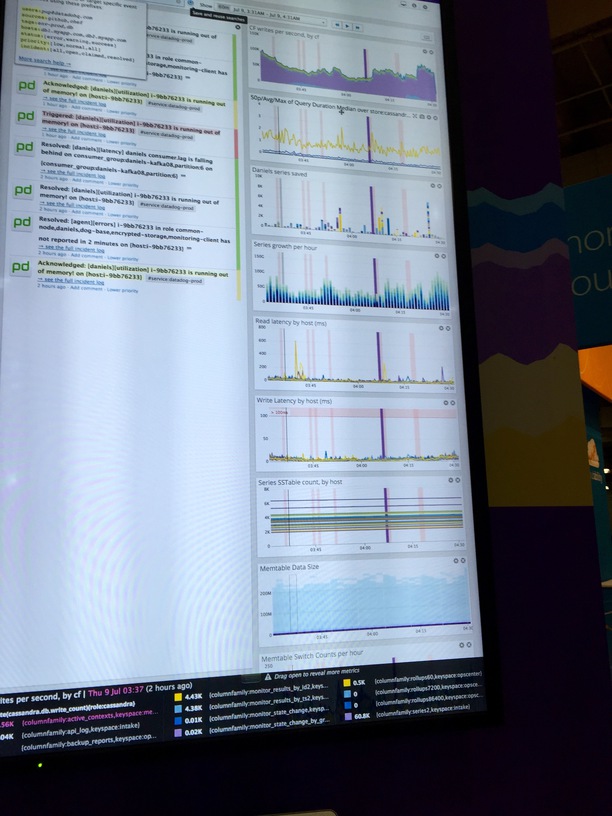

- The second day, I quickly went through the hall with all the different vendors of services built on top of AWS. Keywords found on most of the banners: "Backup", "Agile", "Secure", "Analytics", "Make sense" (as in make sense of your data), "Visualize." Most displays showed colorful graphs of timelines: the variation of workloads and traffic patterns experienced by software apps running on AWS clusters. One display showed the console and a bash prompt in it...

- At 10 that morning, Werner Vogels, CTO of Amazon, gave a presentation of where Amazon is, what it does, and what is new. Interesting to see how CTOs can be treated as rock-stars. Here are some of the comments and ideas I jotted down:

- SQL is very much alive. There's an effort to make the cloud look like a big SQL repository. Why? Because a lot of programmers out there know SQL and how to get information out of SQL databases, and few understand Hadoop (which is mostly out, by the way). So it's easier to provide a view of the cloud as an SQL space.

- "There's no hardware any longer," a quote by Werner Vogels. You manage your hardware infrastructure through the Web. It behaves as just another piece of software.

- Instead of tuning your cluster of computers so that you get the performance wanted, "just add another node!" That seemed to be the message during the Bootcamp session. Instances (computers) are just pennies an hour. You need more power, just add some more nodes; it's cheaper than paying somebody long hours to figure out how to tune the system.

- Because MapReduce is inherently fault-tolerant, it's viable to use lots of spot-instances (you bid on a node with a price per hour, and as long as demand for cpus is low, you can have the node at that price. But when demand goes up, you may lose the node. If it is running a MapReduce type computation, that's ok. The computation that is lost will be launched on another instance, somewhere else. Interesting concept. Also, the variation of the price of spot instances can be large in the States, but much lower in other regions of the world, so these can be harnessed fairly safely by bidding a price slightly higher than the average spot price in these regions.

- In some of the new AWS services, instead of specifying the hardware infrastructure, the system manager specifies the throughput wanted, both for input, and for output. AWS will figure out how many nodes and what configuration to use. Cool!

- AWS is making the cloud real-time, but introducing cloud events: Functions that are triggered when new data comes in. Or when some result is generated in the cloud. Streaming and real-time applications on AWS become possible.

Pics

I also visited Tessa Taylor, '08, at her new job at the NYT, and Inky Lee, '12, who just finished her MFA at ASU.