Difference between revisions of "The Things They Carried"

(→Most Frequent 2-Grams) |

(→Poems) |

||

| (11 intermediate revisions by the same user not shown) | |||

| Line 212: | Line 212: | ||

<center>[[Image:ThingsTheyCarried.png|700px]]</center> | <center>[[Image:ThingsTheyCarried.png|700px]]</center> | ||

<br /> | <br /> | ||

| − | == | + | ==9 Most Frequent Words== |

<br /> | <br /> | ||

<center>[[Image:ThingsTheyCarried10.png|700px]]</center> | <center>[[Image:ThingsTheyCarried10.png|700px]]</center> | ||

<br /> | <br /> | ||

| + | |||

==Most Frequent 2-Grams== | ==Most Frequent 2-Grams== | ||

<br /> | <br /> | ||

* List of 2-grams with a frequency count higher than, or equal to 20. | * List of 2-grams with a frequency count higher than, or equal to 20. | ||

<br /> | <br /> | ||

| − | <source lang="text" highlight="5,13"> | + | <source lang="text" highlight="5,13, 19"> |

65: mitchell - sanders | 65: mitchell - sanders | ||

51: norman - bowker | 51: norman - bowker | ||

| Line 242: | Line 243: | ||

20: mark - fossie | 20: mark - fossie | ||

</source> | </source> | ||

| + | <br /> | ||

| + | =Phrase-Net= | ||

| + | <br /> | ||

| + | A phrase net is a graph showing the relationship existing between words. It used to be part of the Many-Eyes repository of data visualization tools maintained by IBM, and is now defunct. I found Phrase-Net surviving at this URL, though, https://www.cg.tuwien.ac.at/courses/InfoVis/HallOfFame/2011/Gruppe08/Homepage/, and downloaded the library, and applied it to ''The Things They Carried.'' | ||

| + | <br /> | ||

| + | <center>[[Image:phraseNetTheThingsTheyCarried.png|700px]]</center> | ||

| + | <br /> | ||

| + | |||

| + | <onlydft> | ||

| + | =Poems= | ||

| + | <br /> | ||

| + | Poems generated by '''[http://www.smith.edu/psychology/faculty_wraga.php M.J. Wraga]''', from the words appearing in the frequency chart: | ||

| + | <br /> | ||

| + | ::Dark waters of the id | ||

| + | |||

| + | ::Carried war inside a man, | ||

| + | |||

| + | ::Long years crossed away. | ||

| + | |||

| + | ::Morning looked for feeling, | ||

| + | |||

| + | ::Night remembered love. | ||

| + | |||

| + | ::Stories kept the river moved. | ||

| + | <br /> | ||

| + | </onlydft> | ||

| + | <br /> | ||

| + | |||

| + | =Additional Resources/Links= | ||

| + | <br /> | ||

| + | * [https://www.nytimes.com/books/98/09/20/specials/obrien-vietnam.html The Vietnam in Me], by Tim O'Brien, NYT, Oct. 2, 1994. | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | [[Category:Data Visualization]][[Category:Kahn Institute]] | ||

Latest revision as of 09:41, 11 November 2015

--D. Thiebaut (talk) 22:39, 2 October 2015 (EDT)

Contents

Source

Stats

- 4668 lines

- 66025 words

- 382094 characters

Python Program for Word Frequencies

# compute top 100 most frequent word in document import string doc = "TheThingsTheyCarried.txt" stopwords = """a about above across after afterwards again against all almost alone along already also although always am among amongst amoungst amount an and another any anyhow anyone anything anyway anywhere are around as at back be became because become becomes becoming been before beforehand behind being below beside besides between beyond bill both bottom but by call can cannot cant co computer con could couldnt cry de describe detail do done down due during each eg eight either eleven else elsewhere empty enough etc even ever every everyone everything everywhere except few fifteen fify fill find fire first five for former formerly forty found four from front full further get give go had has hasnt have he hence her here hereafter hereby herein hereupon hers herse" him himself his how however hundred i ie if in inc indeed interest into is it its itself keep last latter latterly least less ltd made many may me meanwhile might mill mine more moreover most mostly move much must my myself name namely neither never nevertheless next nine no nobody none noone nor not nothing now nowhere of off often on once one only onto or other others otherwise our ours ourselves out over own part per perhaps please put rather re same see seem seemed seeming seems serious several she should show side since sincere six sixty so some somehow someone something sometime sometimes somewhere still such system take ten than that the their them themselves then thence there thereafter thereby therefore therein thereupon these they thick thin third this those though three through throughout thru thus to together too top toward towards twelve twenty two un under until up upon us very via was we well were what whatever when whence whenever where whereafter whereas whereby wherein whereupon wherever whether which while whither who whoever whole whom whose why will with within without would yet you your yours yourself yourselves dont got just did didnt im """ def displayStops(): s = "" for a in stopwords.split(): s = s+a+" " if len( s )>60: print(s) s = "" print( s ) def main(): global doc, stopwords text = open( doc, "r" ).read() stopwords = set( stopwords.lower().split() ) dico = {} exclude = set(string.punctuation) text = ''.join(ch for ch in text if ch not in exclude) for word in text.lower().split(): if word in stopwords: continue try: dico[word] += 1 except: dico[word] = 1 list = [] for key in dico.keys(): list.append( (dico[key], key) ) list.sort() list.reverse() words = [k for (n,k) in list] print( "\n".join( words[0:100] ) ) #displayStops() main()

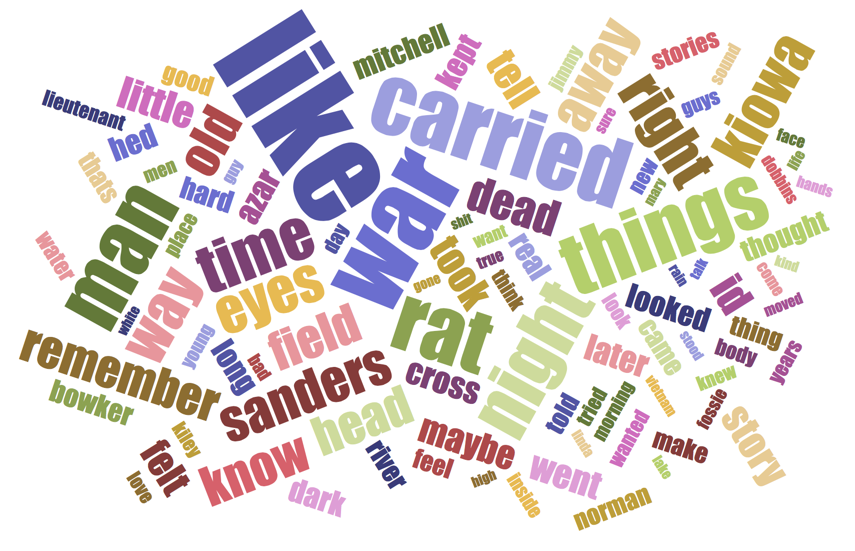

100 Most Frequent Words

1 said 413 2 like 224 3 war 181 4 carried 156 5 man 148 6 rat 147 7 things 146 8 night 136 9 time 119 10 right 114 11 way 112 12 kiowa 109 13 eyes 109 14 away 105 15 sanders 104 16 old 101 17 know 98 18 field 97 19 head 96 20 remember 94 21 say 92 22 dead 90 23 id 86 24 took 85 25 tell 84 26 story 82 27 little 82 28 felt 80 29 maybe 77 30 went 76 31 later 73 32 cross 73 33 azar 71 34 himself 70 35 kept 69 36 dark 69 37 looked 68 38 long 68 39 hed 68 40 real 66 41 mitchell 66 42 bowker 65 43 thought 64 44 hard 64 45 came 63 46 river 62 47 thing 61 48 norman 61 49 good 61 50 told 60 51 thats 60 52 make 60 53 feel 60 54 stories 58 55 water 57 56 new 55 57 body 55 58 years 53 59 wanted 53 60 think 53 61 place 53 62 look 53 63 lieutenant 53 64 day 53 65 tried 52 66 guys 52 67 young 51 68 morning 51 69 men 51 70 knew 51 71 jimmy 51 72 love 50 73 kiley 50 74 inside 50 75 want 49 76 sound 48 77 fossie 48 78 bad 48 79 myself 47 80 guy 47 81 dobbins 47 82 come 47 83 true 46 84 face 46 85 moved 45 86 sure 44 87 life 44 88 hands 44 89 white 43 90 rain 43 91 talk 42 92 stood 42 93 shit 42 94 mary 42 95 lake 42 96 kind 42 97 high 42 98 gone 42 99 vietnam 41 100 linda 41

Word Cloud

100 Most Frequent Words

- Generated on jasondavies.com

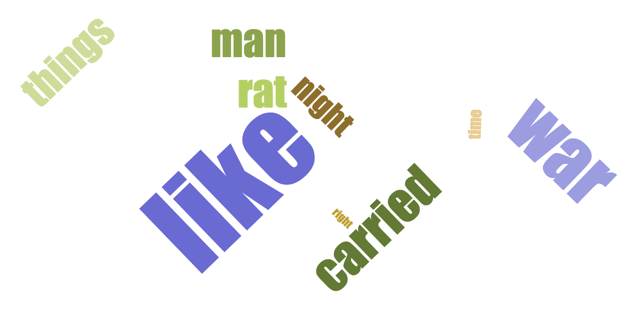

9 Most Frequent Words

Most Frequent 2-Grams

- List of 2-grams with a frequency count higher than, or equal to 20.

65: mitchell - sanders

51: norman - bowker

49: rat - kiley

43: jimmy - cross

40: things - carried

34: mary - anne

32: henry - dobbins

31: rat - said

29: shook - head

28: dave - jensen

25: old - man

25: lieutenant - cross

24: war - story

24: ted - lavender

24: sanders - said

22: lieutenant - jimmy

21: years - old

21: curt - lemon

20: true - war

20: mark - fossie

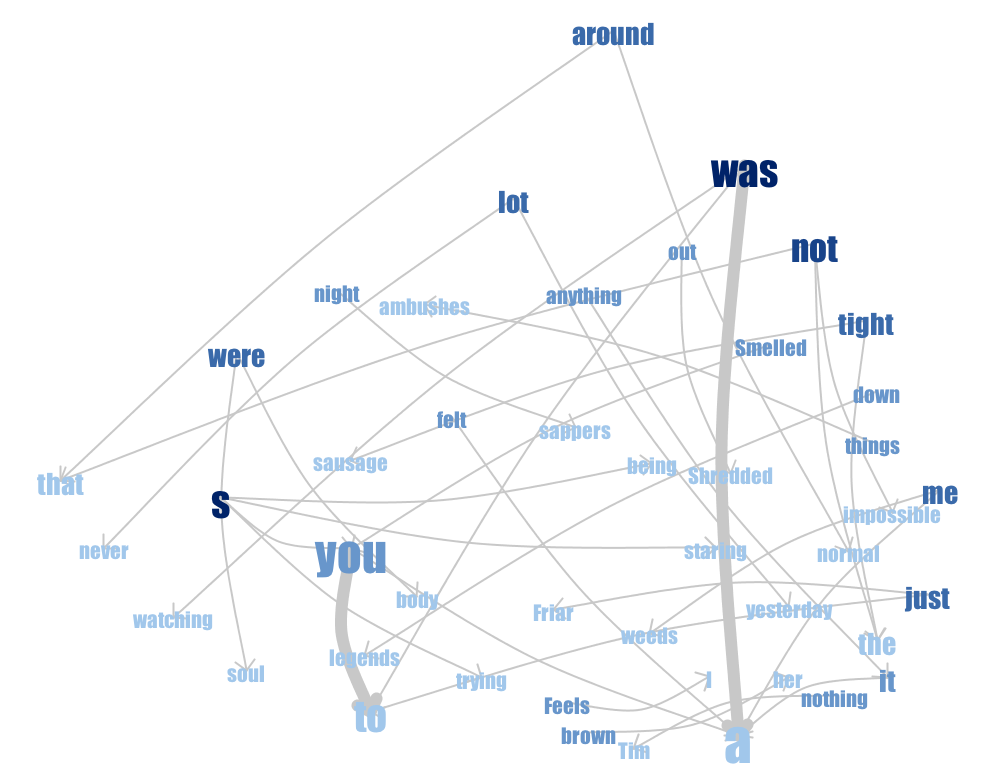

Phrase-Net

A phrase net is a graph showing the relationship existing between words. It used to be part of the Many-Eyes repository of data visualization tools maintained by IBM, and is now defunct. I found Phrase-Net surviving at this URL, though, https://www.cg.tuwien.ac.at/courses/InfoVis/HallOfFame/2011/Gruppe08/Homepage/, and downloaded the library, and applied it to The Things They Carried.

Additional Resources/Links

- The Vietnam in Me, by Tim O'Brien, NYT, Oct. 2, 1994.