Difference between revisions of "CSC352 Homework 4"

(→Problem #2) |

(→Problem #2: Counting Unique Categories) |

||

| (17 intermediate revisions by the same user not shown) | |||

| Line 6: | Line 6: | ||

<br /> | <br /> | ||

| − | ==Problem #1== | + | ==Problem #1: Timelines== |

| − | Below is the timeline of the WordCount program running on 4 xml files of 180 MB each on a cluster of 6 single-core Linux servers. ([[CSC352 Task timeline for processing 4 180MB xml files with WordCount|data available here]]). The files are in the HDFS | + | Below is the timeline of the WordCount program running on 4 xml files of 180 MB each on a cluster of 6 single-core Linux servers. ([[CSC352 Task timeline for processing 4 180MB xml files with WordCount|data available here]]). The files are in the HDFS our the Smith College Hadoop cluster, in '''wikipages/block'''. |

<center> | <center> | ||

| Line 24: | Line 24: | ||

submit hw4 hw4a.pdf | submit hw4 hw4a.pdf | ||

| − | ==Problem #2 == | + | ==Problem #2: Counting Unique Categories == |

Write a Map/Reduce program that reports the number of unique categories and the number of times they appear in wikipages/00/00 on the HDFS. wikipages/00/00 contains 591 individual wikipages in xml. | Write a Map/Reduce program that reports the number of unique categories and the number of times they appear in wikipages/00/00 on the HDFS. wikipages/00/00 contains 591 individual wikipages in xml. | ||

| − | + | Measure the execution time of your program and report it in the header of your program. | |

| − | You are free to write in Java or use ''streaming'' and program in Python or some other language of your choice. | + | ;Optional and Extra Credit |

| + | : Same question for the categories in wikipages/block/all_00.xml, which contains all the wikipages with the Ids of the form x...x00xx. all_00.xml contains 117,617 individual wiki pages. | ||

| + | |||

| + | You are free to write in Java or use ''streaming'' and program in Python or some other language of your choice. If you are using Python, check out Michael Noll's [http://www.michael-noll.com/wiki/Writing_An_Hadoop_MapReduce_Program_In_Python#Improved_Mapper_and_Reducer_code:_using_Python_iterators_and_generators tutorial] for a way to improve the reading of stdin information for the map and for reduce functions. | ||

;Question 1: | ;Question 1: | ||

| − | :Report the execution time | + | :Report the execution time of your program(s) in the header. |

;Question 2: | ;Question 2: | ||

| − | : | + | :Even if you do not attempt the extra-credit option, think about this observation and report your answer: Could there be a problem with the exactness of the result when a MapReduce program processes the file '''all_00.xml'''. Reading [http://developer.yahoo.com/hadoop/tutorial/module5.html Module 5] of the Yahoo Tutorial might help you answer the question. Report the answer in the header of your program(s). |

;'''Note''' | ;'''Note''' | ||

| Line 48: | Line 51: | ||

submit hw4 ''hw4b_yourotherprogram'' | submit hw4 ''hw4b_yourotherprogram'' | ||

| − | ==Problem #3== | + | ==Problem #3: Counting Most Frequent Words== |

Write a Map/Reduce program that reports the five most frequent words in each page, and the number of times they occur in all the pages processed. They should not include stop-words. | Write a Map/Reduce program that reports the five most frequent words in each page, and the number of times they occur in all the pages processed. They should not include stop-words. | ||

| + | |||

| + | You are free to write in Java or use ''streaming'' and program in Python or some other language of your choice. | ||

| + | |||

| + | You are free to report a list of counters, or a single counter for each word. For example, if '''Buck''' appears as one of the 5 most frequent words in 3 pages, and if its frequency is 10 in the first page, 20 in the second, and 30 in the third, then your result could be either | ||

| + | |||

| + | Buck: 10, 20, 30 | ||

| + | |||

| + | or | ||

| + | |||

| + | Buck: 60 | ||

| + | |||

| + | Use the format that will make your life easier in the final project. | ||

| + | |||

| + | ===Details=== | ||

| + | ====File Name==== | ||

| + | In order to find the most frequent words in a file, you will likely need to access the name of the input file from within the map function. The solution to inverted index code in the [http://developer.yahoo.com/hadoop/tutorial/module4.html#solution Yahoo Tutorial, Module 4] indicates that this can be done with this Java code: | ||

| + | |||

| + | <code><pre> | ||

| + | FileSplit fileSplit = (FileSplit)reporter.getInputSplit(); | ||

| + | String fileName = fileSplit.getPath().getName(); | ||

| + | </pre></code> | ||

| + | |||

| + | ====Parsing XML Files==== | ||

| + | Parsing XML files can be done using a special input reader, according to the Apache Hadoop 0.19 [http://hadoop.apache.org/common/docs/r0.19.2/streaming.html#How+do+I+parse+XML+documents+using+streaming%3F documentation]: "How do I parse XML documents using streaming?" | ||

| + | |||

| + | ===Submission=== | ||

| + | |||

| + | Include a sample of the output of your program in its header, and submit it as follows: | ||

| + | |||

| + | submit hw4 hw4c_yourprogramname | ||

| + | |||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | <br /> | ||

| + | [[Category:CSC352]][[Category:MapReduce]][[Category:Hadoop]][[Category:Homework]] | ||

Latest revision as of 16:44, 23 April 2010

This homework is due 4/20/10, at midnight.

Contents

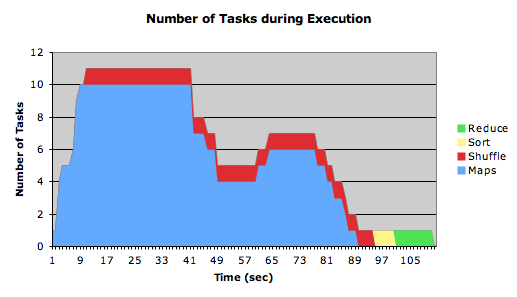

Problem #1: Timelines

Below is the timeline of the WordCount program running on 4 xml files of 180 MB each on a cluster of 6 single-core Linux servers. (data available here). The files are in the HDFS our the Smith College Hadoop cluster, in wikipages/block.

- Question

- Explain the camel back of the time line, in particular the dip in the middle of the Map tasks. Note that this is not an artifact of unsynchronized clocks. The server clocks had been synchronized before the experiment.

Submission

Submit your answer as a pdf from your 352b beowulf account, please.

submit hw4 hw4a.pdf

Problem #2: Counting Unique Categories

Write a Map/Reduce program that reports the number of unique categories and the number of times they appear in wikipages/00/00 on the HDFS. wikipages/00/00 contains 591 individual wikipages in xml.

Measure the execution time of your program and report it in the header of your program.

- Optional and Extra Credit

- Same question for the categories in wikipages/block/all_00.xml, which contains all the wikipages with the Ids of the form x...x00xx. all_00.xml contains 117,617 individual wiki pages.

You are free to write in Java or use streaming and program in Python or some other language of your choice. If you are using Python, check out Michael Noll's tutorial for a way to improve the reading of stdin information for the map and for reduce functions.

- Question 1

- Report the execution time of your program(s) in the header.

- Question 2

- Even if you do not attempt the extra-credit option, think about this observation and report your answer: Could there be a problem with the exactness of the result when a MapReduce program processes the file all_00.xml. Reading Module 5 of the Yahoo Tutorial might help you answer the question. Report the answer in the header of your program(s).

- Note

- The 5 million wikipedia files are available on the local disk of hadoop6, which, unlike the other servers in our cluster contains only one OS, Ubuntu, which takes over the whole local disk, and offers us the server with the biggest local storage.

Submission

Submit your program(s) as above:

submit hw4 hw4b_yourprogram submit hw4 hw4b_yourotherprogram

Problem #3: Counting Most Frequent Words

Write a Map/Reduce program that reports the five most frequent words in each page, and the number of times they occur in all the pages processed. They should not include stop-words.

You are free to write in Java or use streaming and program in Python or some other language of your choice.

You are free to report a list of counters, or a single counter for each word. For example, if Buck appears as one of the 5 most frequent words in 3 pages, and if its frequency is 10 in the first page, 20 in the second, and 30 in the third, then your result could be either

Buck: 10, 20, 30

or

Buck: 60

Use the format that will make your life easier in the final project.

Details

File Name

In order to find the most frequent words in a file, you will likely need to access the name of the input file from within the map function. The solution to inverted index code in the Yahoo Tutorial, Module 4 indicates that this can be done with this Java code:

FileSplit fileSplit = (FileSplit)reporter.getInputSplit();

String fileName = fileSplit.getPath().getName();

Parsing XML Files

Parsing XML files can be done using a special input reader, according to the Apache Hadoop 0.19 documentation: "How do I parse XML documents using streaming?"

Submission

Include a sample of the output of your program in its header, and submit it as follows:

submit hw4 hw4c_yourprogramname