Difference between revisions of "CSC352 Homework 3"

(→Performance Measure) |

|||

| Line 37: | Line 37: | ||

You will also need to figure out a way to pick the ''right'' number of pages per block of pages processed in one swoop by your program. In other words, to process 1000 wiki pages, you could gnereate 100 tasks that can run in parallel, where each task runs on 1 processor and parses 10 different pages. Or you could create 10 tasks processing 100 pages each. '''Make sure you explain why you pick a particular approach.''' | You will also need to figure out a way to pick the ''right'' number of pages per block of pages processed in one swoop by your program. In other words, to process 1000 wiki pages, you could gnereate 100 tasks that can run in parallel, where each task runs on 1 processor and parses 10 different pages. Or you could create 10 tasks processing 100 pages each. '''Make sure you explain why you pick a particular approach.''' | ||

| + | |||

| + | ==Submission== | ||

| + | |||

| + | Please submit a pdf with your explanations, table(s) and/or graph(s), as well as your programs, including everything needed to make them work (that includes files of stop words!). | ||

| + | |||

| + | submit hw3 ''yourfile1'' | ||

| + | submit hw3 ''yourfile2'' | ||

| + | submit hw3 ''etc...'' | ||

Revision as of 14:59, 9 March 2010

Contents

Programming the XGrid

The class decided on the contents of this homework, and its due date: March 30th.

Problem Statement

Process N wiki pages, and for each one

- keep track of the categories contained in the page

- find the 5 most frequent words (not including stop words)

- associate with each category the most frequent words that have been associated with it over the N pages processed

- output the result (or a sample of it)

- measure the execution time of the program

- write a summary of it as illustrated in the guidelines presented in class (3/9, 3/11).

Details

Wiki Pages

The details of how to obtain the Ids of wiki pages, and fetch wiki pages is presented in the XGrid Lab 2.

XGrid Submission

You are free to use asynchronous (Lab 1) jobs or batch (Lab 2) jobs to submit jobs to the XGrid. One might be better than the other, but having the class try several different approaches might be good as a group approach. Note that Batch processing is easier given that Lab 2 is already an example of ways to process wiki pages.

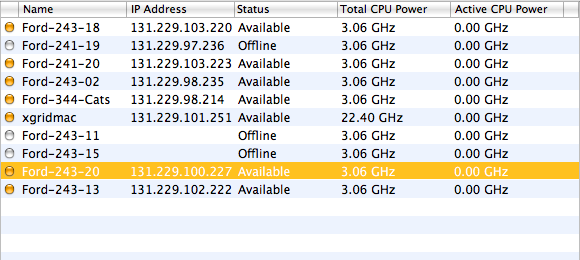

XGrid Controller

You will use the XgridMac controller for this homework, unless the XGrid in Bass becomes available early enough.

Performance Measure

Two performance measures are obvious candidates: The total execution time, and the number of wiki pages per unit time (per second, or per minute if we have a slow implementation).

Your assignment is to compute the number of pages processed per unit of time as a function of the number of processors. The complexity here is that this number is not fixed, and we cannot easily control it (although we could always go to FH341 and turn some machines ON or OFF!). Sometimes the number of processors available will be 14, sometimes 16, sometimes 20...

You will also need to figure out a way to pick the right number of pages per block of pages processed in one swoop by your program. In other words, to process 1000 wiki pages, you could gnereate 100 tasks that can run in parallel, where each task runs on 1 processor and parses 10 different pages. Or you could create 10 tasks processing 100 pages each. Make sure you explain why you pick a particular approach.

Submission

Please submit a pdf with your explanations, table(s) and/or graph(s), as well as your programs, including everything needed to make them work (that includes files of stop words!).

submit hw3 yourfile1 submit hw3 yourfile2 submit hw3 etc...