Difference between revisions of "CSC103 2017: Instructor's Notes"

| Line 312: | Line 312: | ||

| − | Here we start with the | + | Here we start with the rightmost column, start at 1 in the table and go down by 0. The result is 1. We don't move in the table: |

1010011 | 1010011 | ||

+ 0010110 | + 0010110 | ||

------------ | ------------ | ||

| − | 1 | + | 1 |

Done with the rightmost column. We move to the next column and add 1 to 1 (don't think too fast and assume it's 2! 2 does not exist in our table of digits!) | Done with the rightmost column. We move to the next column and add 1 to 1 (don't think too fast and assume it's 2! 2 does not exist in our table of digits!) | ||

Revision as of 09:23, 14 September 2017

--D. Thiebaut (talk) 15:27, 7 September 2017 (EDT)

Contents

CSC103 How Computers Work--Class Notes

Preface |

This book presents material that I teach regularly in a half-semester course titles How Computers Work, in the department of computer science at Smith College. This course is intended for a general audience, and not specifically for computer science majors. Therefore you do not need any specific background to approach the material presented here. Furthermore, the material is self-contained, and you do not need to take the class to understand the material.

The goal of the course is to make students literate about the basic operations of a modern computer, and to cover some of the concepts and issues that are assumed to be understood by the general population, in particular concepts one will find in newspaper articles, such as that of the von Neumann bottleneck, or Moore's Law.

Understanding how computers work first requires observing that they are the physical implementation of rules of mathematics. So in the first part of this book we introduce simple concepts of logic, and explain how the binary system (where we only have 0 and 1 as digits to express numbers) works. We then explain how electronic switches, such as transistors, can be used to implement simple logic circuits which we call logic gates. Remarkably, these logic gates are all that is needed to perform arithmetic operations, such as addition or subtractions, on binary numbers.

This brings us to having a rough, though accurate, unerstanding of how simple hand-held calculators work. They are basically machines that translate decimal numbers into binary numbers, and carry these numbers through different paths through logic circuits to generate the sum, difference or multiplication

At this point, we figure out that an important part of computers and computing as to do with codes. A code is just a system where some symbols are used to represent other symbols. The simplest code we introduce is the one we use to pass from the world of logic where everything is either true or false to the world of binary numbers where digits are either 1 or 0. In this case the code we use is to say that the value true can also be represented by 1, and false by 0. Codes are extremely important in the computer world, as everything at the lowest level is really based on 1s and 0s, but we organize the information through coding to represent extremely complex and sophisticated systems.

Our next step is to see how we can use 1s and 0s to represent actions instead of data. For example, we can use 1 to represent the sum of two numbers, and 0 to represent the subtraction of the second from the first. In this case, if we write 10 1 11, we could mean that we want to add 10 to 11. In turn, writing 11 0 10 would mean subtracting 10 from 11. That is the basis for our exploration of machine language and assembly language. This is probably the most challenging chapter of this book. It requires a methodical approach to learning the material, and a good attention to details. We use a simple computer simulator to write simple, yet complex, assembly language programs that are the basis of all computer programs written today. Your phone, tablet, or laptop all run applications (apps) that are large collection of assembly language instructions.

Because assembly language deals with operations that happen at the tiniest of levels in a computer, it referred to as a low-level language. When engineers write apps for data phones, tablets or laptops, they use languages that allow them to deal with much more complex structures at once. These languages are called high-level languages. Learning how computers work also requires understanding how to control them using some of the tools routinely used by engineers. We introduce Processing as an example of one such language. Processing was created by Ben Fry and Casey Reas at the MIT Media Labs[1]. Their goal was to create a language for artists that would allow one to easily and quickly create artistic compositions, either as static images, music, or videos. The result is Processing; it provides an environment that is user-friendly and uses a simplified version of Java that artists can use to create sketches (Processing programs are called sketches). The Exhibition page of the Processing Web site presents often interesting and sometimes stunning sketches submitted by users.

Introduction |

Current Computer Design is the Result of an Evolutionary Process |

In this course we are going to look at the computer as a tool, as the result of technological experiments that have crystalized currently on a particular design, the von Neumann architecture, on a particular source of energy, electricity, on a particular fabrication technology, silicon transistors, and a particular information representation, the binary system, but any of these could have been different, depending on many factors. In fact, in the next ten or twenty years, one of more of these fundamental parts that make today's computers could change.

Steamboy, a steampunk Japanese animé by director Katsuhiro Ohtomo (who also directed Akira) is interesting in more than the story of a little boy who is searching for his father, a scientist who has discovered a secret method for controlling high pressured steam. What is interesting is that the movie is science fiction taking place not in the future, but in middle of the 19th century, in a world where steam progress and steam machines are much more advanced than they actually were at that time. One can imagine that some events, and some discoveries where made in the world portrayed in the animated film, and that technology evolved in quite a different direction, bringing with it new machines, either steam-controlled tank-like vehicles, or ships, or flying machines.

For computers, we can make the same observation. The reason our laptops today are designed the way they are is really the result of happy accidents in some ways. The way computers are designed, for example, with one processor (more on multi-core processors later), a system of busses, and memory where both data and programs reside side-by-side hasn't changed since John von Neumann wrote his (incomplete and never officially published) First Draft of a Report on the EDVAC,[2] article, in June of 1945. One can argue that if von Neumann hadn't written this report, we may have followed somebody else's brilliant idea for putting together a machine working with electricity, where information is stored and operated on in binary form. Our laptop today could be using a different architecture, and programming them might be a totally different type of problem solving.

The same is true of silicon transistors powered by electricity. Silicon is the material of choice for electronic microprocessor circuits as well as semiconductor circuits we find in today's computers. Its appeal lies in its property of being able to either conduct and not conduct electricity, depending on a signal it receives which is also electrical. Silicon allows us to create electrical switches that are very fast, very small, and consume very little power. But because we are very good at creating semiconductor in silicon, it doesn't mean that it is the substrate of choice. Researchers have shown[3] that complex computation could also be done using DNA, in vials. Think about it: no electricity there; just many vials with solutions containing DNA molecules, a huge number of them, that are induced to code all possible combinations of a particular sequence, such that one of the combinations is the solution to the problem to solve. DNA computing is a form of parallel computation where many different solutions are computed at the same time, in parallel, using DNA molecules[4]. One last example for computation that is not performed in silicon by traveling electrons can be found in optical computing. The idea behind this concept (we really do not have optical computers yet, just isolated experiments showing its potential) is that electrons are replaced with photons, and these photons, which are faster than electrons, but much harder to control.

So, in summary, we start seeing that computing, at least the medium chosen for where the computation takes place can be varied, and does not have to be silicon. Indeed, there exist many examples of computational devices that do not use electronics in silicon and can perform quite complex computation. In consequence, we should also be ready to imagine that new computers in ten, twenty or thirty years will not use semiconductors made of silicon, and may not use electrons to carry information that is controlled by transistors. In fact, it is highly probable that they won't.

While the technology used in creating today's computer is the result of an evolution and choices driven by economic factors and scientific discoveries, among others, one thing we can be sure of is that whatever computing machine we devise and use to perform calculations, that machine will have to use rules of mathematics. It does not matter what technology we use to compute 2 + 2. The computer must follow strict rules and implement basic mathematical rules in the way it treats information.

You may think that Math may be necessary only for programs that, say, display a mathematical curve on the screen, or maintain a spreadsheet of numbers representing somebody's income tax return, but Math might probably not be involved in a video game where we control an avatar who moves in a virtual world. Or that the computer inside a modern data phone is probably not using laws of Mathematics for the great majority of what we du with it during the day. This couldn't be further from the truth. Figuring out where a tree should appear on the screen as our avatar is moving in its virtual space requires applying basic geometry in three dimensions: the tree is at a corner of a triangle formed by the tree, the avatar, and the eye of the virtual camera showing you the image of this virtual world. Our phone's ability to pin point its location as we're sitting in a café sipping on a bubble tea, requires geometry again, a program deep in the phone figuring out how far we are from various signal towers for which the phone knows the exact location, and using triangulation techniques to find our place in relationship to them.

So computers, because they need to perform mathematical operations constantly, must know the rules of mathematics. Whatever they do, they must do it in a way that maintains mathematical integrity. They must also be consistent and predictable. 2 + 2 computed today should yield the same result tomorrow, independent of which computer we use. This is one reason we send mathematical equations onboard space probes that are sent to explore the universe outside our solar system. If there is intelligent life out there, and if it finds our probe, and if it looks inside, it will find math. And the math for this intelligent life will behave the same as math for us. Mathematics, its formulas, its rules, are universal.

But technological processes are not. So computers can be designed using very different technologies, but whatever form they take, they will follow the rules of math when performing computations.

In our present case, the major influence on the way our computers are build is the fact that we are using electricity as the source of power, and that we're using fast moving electrons to represent, or code information. Electrons are cheap. They are also very fast, moving at approximately 3/4 the speed of light in wires[5]. We know how to generate them cheaply (power source), how to control them easily (with switches), and how to transfer them (over electrical wires). These properties were the reason for the development of the first vacuum tube computer by Atanasoff in 1939[6].

The choice of using electricity has influenced greatly a fundamental way in which modern computers work. They all use the binary system at the lowest level. Because electricity can be turned ON or OFF with a switch, it was only logical that these two states would be used to represent information. ON and OFF. 0 and 1. True and False. But if we can represent two different states, two different levels of information, can we represent other than 0 or 1? Say 257? Can we also organize electrical circuitry that can perform the addition of two numbers? The answer is Yes; using the binary numbering system.

Binary System |

This section is an overview of the binary system. Better sources of information can be found on this subject, including this one from the University of Vermont.

To better understand the binary system, we'll refresh our memory about the way our decimal system works, figure out what rules we use to operate in decimal, and carry them over to binary.

First, we'll need to define a new term. The base of a system is the number of digits used in the system. Decimal: base 10: we have 10 digits to write numbers with: 0, 1, 2, 3, 4, 5, 6, 7, 8, and 9.

In binary, the base is 2; we have only two digits to write numbers with: 0, and 1.

Counting in Decimal |

Let's now count in decimal and go slowly, figuring out how we come up with the numbers.

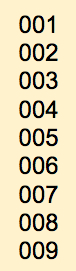

That's the first positive number. Instead of just 1 zero, we pad the number with leading zeros so that the number has 3 digits. This will help us understand better the rule we're so good at using that we have forgotten it!

Let's continue:

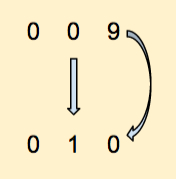

Ok, now an important point in the counting process. We have written all 10 digits in the right-most position of our number. Because we could increment this digit, we didn't have to change the digits on the left. Now that we have reached 9, we need to roll over the list of digits. We have to go from 9 back to 0. Because of this roll-over, we have to increment (that means adding 1) the digit that is directly to the left of the one rolling over.

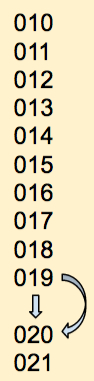

Let's continue:

Notice that after a while we reach 019. The right-most digit has to roll over again, which makes it become 0, and the digit to its left must be incremented by 1. From 1 it becomes 2, and we get 020.

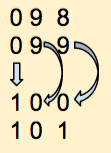

At some point, applying this rule we reach 099. Let's just apply our simple rule: the right most digit must roll over, so it becomes 0, and the digit to its left increments by 1. Because this second digit is also 9 it, too, must roll over and become 0. And because the 2nd digit rolls over, then the third digit increments by 1. We get 100. Does that make sense? We are so good at doing this by heart without thinking of the process we use that sometimes it is confusing to deconstruct such knowledge.

So let's remember this simple rule; it applies to counting in all number systems, whether they are in base 10 or some other base:

Counting in Binary |

Let's now count in base 2, in binary. This time our "internal" table contains only 2 digits: 0, 1. So, whatever we do, we can only use 0 and 1 to write numbers. And the list of numbers we use and from which we'll "roll-over" is simply: 0, 1.

Good start! That's zero. It doesn't matter that we used 5 0s to write it. Leading 0s do not change the value of numbers, in any base whatsoever. We use them here because they help see the process better.

We apply the rule: modify the right-most digit and increment it. 0 becomes 1. No rolling over.

Good again! One more time: we increment the rightmost digit, but because we have reached the end of the available digits, we must roll over. The right-most digit becomes 0, and we increment its left neighbor:

Once more: increment the right-most digit: this time it doesn't roll-over, and we do not modify anything else.

One more time: increment the right-most digit: it rolls over and becomes 0. We have to increment its neighbor to the left, which also rolls over and becomes 0, and forces us to increment its neighbor to the left, the middle digit, which becomes 1:

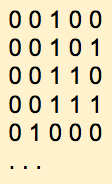

Let's pick up the pace:

And so on. That's basically it for counting in binary.

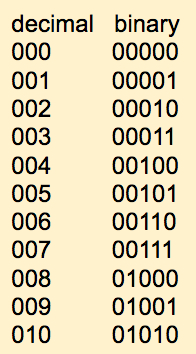

Let's put the numbers we've generated in decimal and in binary next to each other:

- Exercise

- How would we count in base 3? The answer is that we just need to modify our table of available digits to be 0, 1, 2, and apply the rule we developed above. Here is a start:

0000 0001 0002 0010 (2 rolls over to 0, therefore we increment its left neighbor by 1) ...

- Does that make sense? Continue and write all the numbers until you reach 1000, in base 3.

Evaluating Binary Numbers |

What is the decimal equivalent of the binary number 11001 in decimal? To find out, we return to the decimal system and see how we evaluate, or find the value represented by a decimal number. For example:

1247

represents one thousand two hundred forty seven, and we are very good at imagining how large a quantity that is. For example, if you were told that you were to carry 1247 pennies in a bag, you get a sense of how heavy that bag would be.

The value of 1247 is 1 x 1000 + 2 * 100 + 4 * 10 + 7 * 1. The 1000, 100, 10, and 1 factors represent different powers of the base, 10. We can also rewrite it as

1247 = 1 x 103 + 2 x 102 + 4 x 101 + 7 x 100 = 1 x 1000 + 2 x 100 + 4 x 10 + 7 x 1 = 1247

So the rule here is that to find the value or weight of a number written in a particular base is to multiply each digit by the base raised to increasing powers, starting with the power 0 for the rightmost digit.

Let's try that for the binary number 11001. The base is 2 in this case, so the value is computed as:

11001 = 1 x 24 + 1 x 23 + 0 x 22 + 0 x 21 + 1 x 20 = 1 x 16 + 1 x 8 + 0 x 4 + 0 x 2 + 1 x 1 = 16 + 8 + 0 + 0 + 1 = 25 in decimal

References |

- ↑ B. Fry, C. Reas, Processing., MIT Press, 2007.

- ↑ 2.0 2.1 John von Neumann. First Draft of a Report on the EDVAC. IEEE Ann. Hist. Comput. 15, 4 (October 1993), 27-75.

- ↑ Adleman, L. M., "Molecular computation of solutions to combinatorial problems". Science 266 (5187): 1021–1024. 1994.

- ↑ Lewin, D. I., "DNA computing". Computing in Science & Engineering 4 (3): 5–8, 2002.

- ↑ Main, P., "When electrons go with the flow: Remove the obstacles that create electrical resistance, and you get ballistic electrons and a quantum surprise". New Scientist 1887: 30., 1993.

- ↑ Ralston, Anthony; Meek, Christopher, eds., Encyclopedia of Computer Science (second ed.), pp. 488–489, 1976.

- ↑ Algorithm, in Wikipedia, retrieved Oct. 3, 2012, from http://en.wikipedia.org/wiki/Algorithm

- ↑ Steve Lohr, Big Data, Speed, and the Future of Computing, New York Times Technology, Oct 31, 2011.

- ↑ Nate Silver, The Signal and the Noise: Why So Many Predictions Fail-but Some Don't, Penguin, 2012.

- ↑ Moore's Lay, Intel Corporation, 2005. ftp://download.intel.com/museum/Moores_Law/Printed_Material/Moores_Law_2pg.pdf

- ↑ Sundar Iyer, Breaking through the embedded memory bottleneck, part 1, EE Times, July 2012, http://www.eetimes.com/document.asp?doc_id=1279790

- ↑ Samual K. Moore, Multicore is bad news for supercomputers, IEEE Spectrum, Nov. 2008.

- ↑ David R. Henderson and Charles L. Hooper, Making Great Decisions in Business and Life, Chicago Park Press, 1st edition, March 12, 2007.

- ↑ Gordon E. Moore, Cramming More Components onto Integrated Circuits, Electronics, pp. 114–117, April 19, 1965.

- ↑ Tim Worstall, The End of Moore's Law, Forbes, Aug. 29, 2013, http://www.forbes.com/sites/timworstall/2013/08/29/darpa-chief-and-intel-fellow-moores-law-is-ending-soon/, retrieved 9/29/13.

- ↑ C. Reas, B. Fry, Processing: programming for the media arts, AI & Soc (2006) 20: 526–538 DOI 10.1007/s00146-006-0050-9, (http://hlt.media.mit.edu/dfe_readings/processing.pdf)