Difference between revisions of "CSC352 Homework 4"

(Created page with '<bluebox> This homework is due 4/20/10, at midnight. </bluebox> <br /> ==Problem #1== Below is the timeline of the WordCount program running on 4 xml files of 180 MB each on …') |

(→Problem #1) |

||

| Line 13: | Line 13: | ||

[[Image:TaskTimeline4_180MBFiles.png]] | [[Image:TaskTimeline4_180MBFiles.png]] | ||

</center> | </center> | ||

| + | |||

;Question | ;Question | ||

| − | :Explain the ''camel'' back of the time line, in particular the dip in the middle of the Map tasks. | + | :Explain the ''camel'' back of the time line, in particular the dip in the middle of the Map tasks. Note that this is not an artifact of unsynchronized clocks. The server clocks had been synchronized before the experiment. |

| + | |||

| + | ===Submission=== | ||

| + | |||

| + | Submit your answer as a pdf from your 352b beowulf account, please. | ||

| + | |||

| + | submit hw4 hw4a.pdf | ||

| + | |||

| + | ==Problem #2 == | ||

| + | |||

| + | Write a Map/Reduce program that reports the number of unique categories and the number of times they appear in wikipages/00/00 on the HDFS. wikipages/00/00 contains 591 individual wikipages in xml. | ||

| + | |||

| + | Same question for the categories in wikipages/block/all_00.xml, which contains all the wikipages with the Ids of the form x...x00xx. all_00.xml contains 117,617 individual wiki pages. | ||

| + | |||

| + | :'''Note''': ''all the 5 million wikipedia files are available on the local disk of hadoop6, which, unlike the other servers in our cluster contains only one OS, Ubuntu, which takes over the whole local disk, and offers us the server with the biggest local storage. | ||

Revision as of 08:56, 10 April 2010

This homework is due 4/20/10, at midnight.

Problem #1

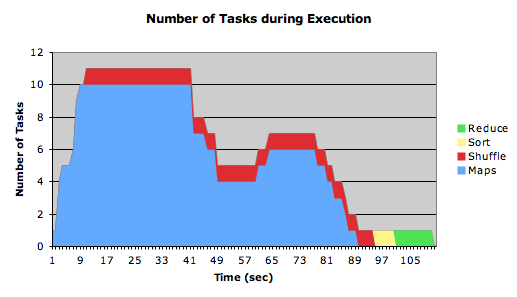

Below is the timeline of the WordCount program running on 4 xml files of 180 MB each on a cluster of 6 single-core Linux servers. (data available here). The files are in the HDFS on our cluster, in wikipages/block.

- Question

- Explain the camel back of the time line, in particular the dip in the middle of the Map tasks. Note that this is not an artifact of unsynchronized clocks. The server clocks had been synchronized before the experiment.

Submission

Submit your answer as a pdf from your 352b beowulf account, please.

submit hw4 hw4a.pdf

Problem #2

Write a Map/Reduce program that reports the number of unique categories and the number of times they appear in wikipages/00/00 on the HDFS. wikipages/00/00 contains 591 individual wikipages in xml.

Same question for the categories in wikipages/block/all_00.xml, which contains all the wikipages with the Ids of the form x...x00xx. all_00.xml contains 117,617 individual wiki pages.

- Note: all the 5 million wikipedia files are available on the local disk of hadoop6, which, unlike the other servers in our cluster contains only one OS, Ubuntu, which takes over the whole local disk, and offers us the server with the biggest local storage.