XGrid Tutorial Part 2: Processing Wikipedia Pages

| This tutorial is intended for running distributed programs on an 8-core MacPro that is setup as an XGrid Controller at Smith College. Most of the steps presented here should work on other Apple grids, except for the specific details of login and host addresses. Another document details how to access the 88-processor XGrid in the Science Center at Smith College. This document is the second part of a tutorial on the XGrid and follows the Monte Carlo tutorial. Make sure you go through this tutorial first. |

Setup

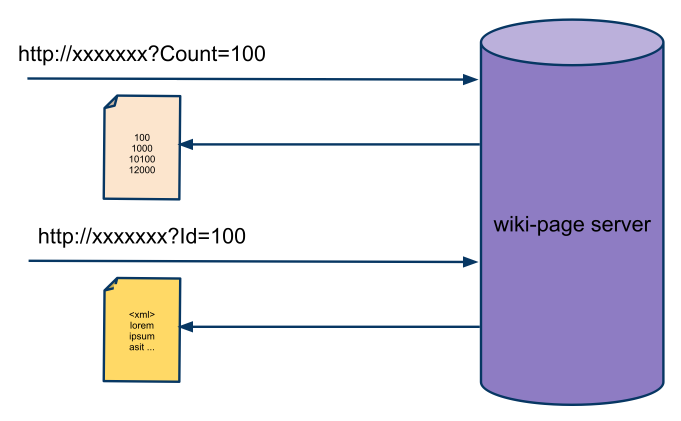

The main setup is shown below

See the Project 2 page for more information on accessing the server of wikipedia pages.

In summary, any computer can issue http requests to the server at the Url associated with the wiki page server and append ?Count=nnnn at the end to get a list of nnnn Ids, or ?Id=nnnn to get the contents of the page with the given Id.

Goal of this Tutorial

Create a Pipeline

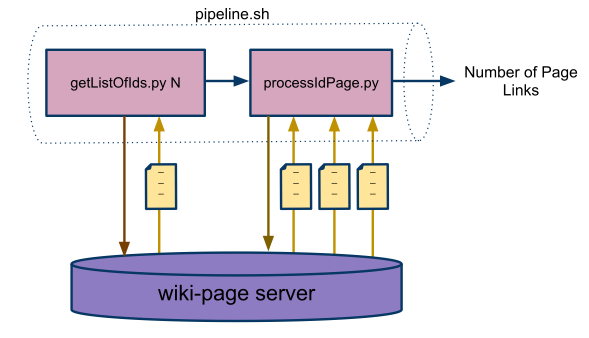

The goal is to create a pipeline of two programs (and possibly other Mac OS X commands) that will retrieve several pages from the wiki-page server and process them. The programs are used in a pipeline fashion, the output of one being fed to the input of the other. A third program, a bash script called pipeline.sh, organizes the pipeline structure.

The figure below illustrates the process.

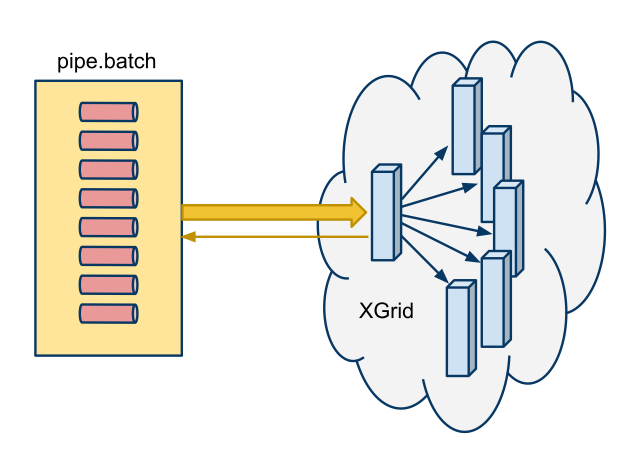

Submit a Batch of Jobs to the XGrid

Once the pipeline is created, and tested on the XGrid, a batch job is created. Batch jobs are PLIST files containing the files that need to be sent to the XGrid, the data files required, if any, and the command or commands to be executed.

The figure below illustrates the process. The XGrid controller is "clever" enough to break the batch job into individual processes that are sent to the different agents that are available.

The Basic Elements of the Pipeline

- getListOfIds.py

- This program is given a number and fetches that many Ids from the wiki-page server.

- processIdPage.py

- This program receives a list of Ids from the command line or from standard input, and fetches the wiki-pages corresponding to these Ids. There is no limitation on the number of Ids except the amount of buffering offered by the computer.

- pipeline.sh

- This program is the glue that makes the previous two programs work in a pipeline fashion.

Typical Usage

getListOfIds.py

- getListOfIds.py receives the number of Ids it should retrieve on the command line:

./getListOfIds.py -n 10 10000 10050000 10070000 10140000 10200000 10230000 1030000 10320000 1040000 10430000

- (Note: make sure the different program are made executable with the chmod +x command.)

- Another interesting use of a command that outputs a collection of lines is that we can easily "carve" this list with the head and tail Linux commands:

./getListOfIds.py -n 10 | tail -5 10230000 1030000 10320000 1040000 10430000

./getListOfIds.py -n 10 | tail -5 | head -2 10230000 1030000

processIdPage.py

- processIdPage.py accepts a list of Ids from the command line of from standard input:

./processIdPage.py 10000 10050000 count:26

- Here 26 represents the number of links to other pages that exist in the two wiki pages with Ids 10000 and 10050000.

./getListOfIds.py -n 10 | ./processIdPage.py count:152

- If the list of Ids is stored in a file, processIdPage.py can easily get them as follows:

cat Ids.txt | ./processIdPage.py

|  | |

| ||

|  |