Setup Virtual Hadoop Cluster under Ubuntu with VirtualBox

--D. Thiebaut (talk) 14:35, 22 June 2013 (EDT)

Contents

Server Hardware/OS Configuration

- ASUS M5A97 R2.0 AM3+ AMD 970 SATA 6Gb/s USB 3.0 ATX AMD Motherboard ($84.99)

- Corsair Vengeance 16GB (2x8GB) DDR3 1600 MHz (PC3 12800) Desktop Memory (CMZ16GX3M2A1600C10) ($120.22)

- AMD FX-8150 8-Core Black Edition Processor Socket AM3+ FD8150FRGUBOX ($175)

- 2x 1 TB-RAID 1 disks

- used Ctrl-F in POS to call RAID setup.

- setup 2 1TB drives as RAID-1

- Ubuntu Desktop V13.04

Software Setup

Use Ubuntu Software Manager GUI and install following packages:

- VirtualBox

- emacs

- eclipse

- python 3.1

- ddclient (for dyndns.org, e.g. hadoop0.dyndns.org)

- open-ssh to access remotely

- setup keyboard ctrl/capslock swap

- setup 2 video displays

Setup Virtual Cluster

- Suggestion from Bo Feng @ http://b-feng.blogspot.com/2013/02/create-virtual-ubuntu-cluster-on.html:

- Create a virtual machine

- Change the network to bridge mode

- Install Ubuntu Server OS

- Setup the hostname to something like "node-01"

- Boot up the new virtual machine

- sudo rm -rf /etc/udev/rules.d/70-persistent-net.rules

- Clone the new virtual machine

- Initialize with a new MAC address

- Boot up the cloned virtual machine

- edit /etc/hostname

- change "node-01" to "node-02"

- edit /etc/hosts

- change "node-01" to "node-02"

- edit /etc/hostname

- Reboot the cloned virtual machine

- Redo step 4 to 6 until there are enough nodes

- Note: the ip address of each node should start with "192.168." unless you don't have a router.

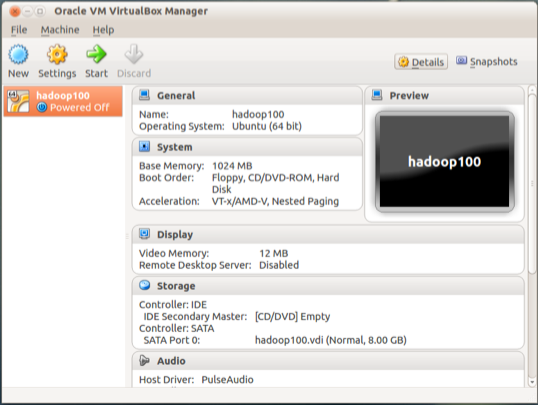

Create First Virtual Server

Based on http://serc.carleton.edu/csinparallel/vm_cluster_macalester.html

- On Ubuntu:

- open windows manager, go to Downloads, right click (need 3-button mouse) on ubuntu-13.04-server-amd64.iso and pick open with archive-mounter

- Start virtualBox

- Create new virtual box

- Linux

- Ubuntu 64 bits

- 2 GB virtual RAM

- Create virtual hard disk, VDI

- dynamically allocated

- hadoop100, 8.00 GB drive size

- install Ubuntu (pick language, keyboard, time zone, etc...)

- User same as for Hadoop0

- partition disk: Guided, LVM

- install security update automatically

- OpenSSH server (leave all others unselected)

- Start Virtual Machine Hadoop100. It works!

- Back on hadoop0

-

download vboxtools from Sourceforge (this is to make the virtual boxes start automatically at boot time...) -

abandonning vboxtools for right now, as it is really to setup a beowulf cluster...

-

- Back in VirtualBox. Stop hadoop100

- Go to its settings, Network, Enable Network Adapter, Bridged Adapter, eth0.

- Restart hadoop100

- Ping xgridmac to see if Internet access works:

ping xgridmac.dyndns.org

- run command ifconfig to get ip address. Note it.

- on Hadoop0, change /etc/hostname to be hadoop100 (was Ubuntu100 for same reason)

- similarly, change /etc/hosts to have line with 127.0.1.1 hadoop100

- Back on xgrid

- go to dyndns.org and make sure hadoop100 exists

- ssh to iP address above

- su

- apt-get install ddclient

- dthiebaut/dyn##etboite

- hadoop100.dyndns.org

- (if need to modify this, the info is in /etc/ddclient.conf)

- When hadoop100 is reachable from ssh (takes a few minutes)...

- On Hadoop100:

- Install java

sudo apt-get install openjdk-6-jdk java -version java version "1.6.0_27" OpenJDK Runtime Environment (IcedTea6 1.12.5) (6b27-1.12.5-1ubuntu1) OpenJDK 64-Bit Server VM (build 20.0-b12, mixed mode)

- Disable ipv6 (not sure it's needed)...

sudo emacs -nw /etc/sysctl.conf

- add these lines at the end:

#disable ipv6 net.ipv6.conf.all.disable_ipv6 = 1 net.ipv6.conf.default.disable_ipv6 = 1 net.ipv6.conf.lo.disable_ipv6 = 1

- reboot

- Install hadoop

cd ~/Downloadswget http://www.bizdirusa.com/mirrors/apache/hadoop/common/stable/hadoop_1.1.2-1_x86_64.debsudo dpkg -i hadoop_1.12-1_x86_64.deb

- follow directives in Michael Noll's tutorial for building a 1-node Hadoop cluster.

- create hduser user and hadoop group (already existed)

- create ssh password-less entry

- open /etc/hadoop/hadoop-env.sh and set JAVA_HOME

export JAVA_HOME=/usr/lib/jvm/java-6-openjdk-amd64/

- Download hadoop from hadoop repository

sudo -i cd /usr/local wget http://www.poolsaboveground.com/apache/hadoop/common/stable/hadoop-1.1.2.tar.gz tar -xzf hadoop-1.1.2.tar.gz mv hadoop-1.1.2 hadoop chown -R hduser:hadoop hadoop

- setup user hduser

su - hduser emacs .bashrc

- and add

# HADOOP SETINGS # Set Hadoop-related environment variables export HADOOP_HOME=/usr/local/hadoop # Set JAVA_HOME (we will also configure JAVA_HOME directly for Hadoop later on) #export JAVA_HOME=/usr/lib/jvm/java-6-sun export JAVA_HOME=/usr/lib/jvm/java-6-openjdk-amd64 # Some convenient aliases and functions for running Hadoop-related commands unalias fs &> /dev/null alias fs="hadoop fs" unalias hls &> /dev/null alias hls="fs -ls" export PATH="/usr/local/sbin:/usr/local/bin:/usr/sbin" export PATH="$PATH:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/usr/local/hadoop/bin:."

This setting of .bashrc should be done by any user wanting to use hadoop.

- Still as user hduser, edit /usr/local/hadoop/conf/hadoop-env.sh and set JAVA_HOME

export JAVA_HOME=/usr/lib/jvm/java-6-openjdk-amd64

- Edit /usr/local/hadoop/conf/core-site.xml and add

<property>

<name>hadoop.tmp.dir</name>

<value>/app/hadoop/tmp</value>

<description>A base for other temporary directories.</description>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:54310</value>

<description>The name of the default file system. A URI whose

scheme and authority determine the FileSystem implementation. The

uri's scheme determines the config property (fs.SCHEME.impl) naming

the FileSystem implementation class. The uri's authority is used to

determine the host, port, etc. for a filesystem.</description>

</property>

- Edit /usr/local/hadoop/conf/mapred-site.xml and add

<property>

<name>mapred.job.tracker</name>

<value>localhost:54311</value>

<description>The host and port that the MapReduce job tracker runs

at. If "local", then jobs are run in-process as a single map

and reduce task.

</description>

</property>

- Edit /usr/local/hadoop/conf/hdfs-site.xml and add

<property>

<name>dfs.replication</name>

<value>1</value>

<description>Default block replication.

The actual number of replications can be specified when the file is created.

The default is used if replication is not specified in create time.

</description>

</property>

- Format namenode

- As user hduser:

hadoop namenode -format

- hadoop configuration directory is in

sudo mkdir -p /app/hadoop/tmp sudo chown hduser:hadoop /app/hadoop/tmp sudo chmod 750 /app/hadoop/tmp

- as su, create link to hadoop-examples*.jar

sudo ls -n /usr/local/hadoop/hadoop-examples-1.1.2.jar /usr/local/hadoop/hadoop-examples.jar

- Test as hduser

cd mkdir input cp /usr/local/hadoop/conf/*.xml input start-all.sh hadoop dfs copyFromLocal input/ input

hadoop dfs -ls Found 1 item drwxr-xr-x - hduser supergroup 0 2013-07-24 11:16 /user/hduser/input

hadoop jar /usr/local/hadoop/hadoop-examples.jar wordcount /usr/hduser/input/ output 13/07/24 11:24:46 INFO input.FileInputFormat: Total input paths to process : 7 13/07/24 11:24:46 INFO util.NativeCodeLoader: Loaded the native-hadoop library 13/07/24 11:24:46 WARN snappy.LoadSnappy: Snappy native library not loaded 13/07/24 11:24:47 INFO mapred.JobClient: Running job: job_201307241105_0006 13/07/24 11:24:48 INFO mapred.JobClient: map 0% reduce 0% 13/07/24 11:24:57 INFO mapred.JobClient: map 28% reduce 0% 13/07/24 11:25:04 INFO mapred.JobClient: map 42% reduce 0% . . . 13/07/24 11:25:22 INFO mapred.JobClient: Physical memory (bytes) snapshot=1448595456 13/07/24 11:25:22 INFO mapred.JobClient: Reduce output records=805 13/07/24 11:25:22 INFO mapred.JobClient: Virtual memory (bytes) snapshot=5797994496 13/07/24 11:25:22 INFO mapred.JobClient: Map output records=3114 hadoop dfs -ls Found 2 items drwxr-xr-x - hduser supergroup 0 2013-07-24 11:16 /user/hduser/input drwxr-xr-x - hduser supergroup 0 2013-07-24 11:29 /user/hduser/output hadoop dfs -ls output Found 3 items -rw-r--r-- 1 hduser supergroup 0 2013-07-24 11:29 /user/hduser/output/_SUCCESS drwxr-xr-x - hduser supergroup 0 2013-07-24 11:28 /user/hduser/output/_logs -rw-r--r-- 1 hduser supergroup 15477 2013-07-24 11:28 /user/hduser/output/part-r-00000 hadoop dfs -cat output/part-r-00000 "*" 11 "_HOST" 1 "alice,bob 11 "local" 2 'HTTP/' 1 (-1). 2 (1/4 1 (maximum-system-jobs 2 *not* 1 , 2 ...

Add New User who will run Hadoop Jobs

- taken from http://amalgjose.wordpress.com/2013/02/09/setting-up-multiple-users-in-hadoop-clusters/

- add new user to Ubuntu

sudo useradd -d /home/mickey -m mickey sudo passwd mickey

- login as hduser

hadoop fs –chmod -R 1777 /app/hadoop/tmp/mapred/ chmod 777 /app/hadoop/tmp hadoop fs -mkdir /user/mickey/ hadoop fs -chown -R mickey:mickey /user/mickey/

- login as mickey

- change shell to bash

chsh

- setup .bashrc and add these lines at the end:

# HADOOP SETINGS # Set Hadoop-related environment variables #export HADOOP_HOME=/usr/local/hadoop # Set JAVA_HOME (we will also configure JAVA_HOME directly for Hadoop later on) #export JAVA_HOME=/usr/lib/jvm/java-6-sun export JAVA_HOME=/usr/lib/jvm/java-6-openjdk-amd64 # Some convenient aliases and functions for running Hadoop-related commands unalias fs &> /dev/null alias fs="hadoop fs" unalias hls &> /dev/null alias hls="fs -ls" export PATH="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin" export PATH="$PATH:/bin:/usr/games:/usr/local/games:/usr/local/hadoop/bin:."

- logout and back in as Mickey

Clone Server

- On Hadoop0, VirtualBox Manager

- Use hadoop100 as Master for cloning

- on hadoop100, remove /etc/udev/rules.d/70-persistent-net.rules

sudo rm -f /etc/udev/rules.d/70-persistent-net.rules

- Not doing this will prevent the network interface from being brought up

- right click on hadoop100 in VBox manager

- pick new name (i.e. hadoop101)

- check ON "Reinitialize the MAC address of all network cards

- pick Linked clone

- Clone away! (quick, 1/2 a second or so)

- right click on new machine, pick settings

- System: select 2GB RAM

- Processor: 1CPU

- Network: bridged adapter

- start the virtual machine

- it will complain that it is not finding the network

- do this:

ifconfig -a

- Notice the first Ethernet adapter id. It should be "eth?"

- edit the file at /etc/network/interface. Change the adapter (twice) from "eth0" to the adapter id in the previous step

- Save the file

- Reboot the virtual appliance

- edit /etc/ddclient.conf on virtual machine. Set it to new host defined with dyndns.org

- edit /etc/hostname and put the name of the new virtual machine there

- edit /etc/hosts and put name of new machine after 127.0.1.1

127.0.1.1 hadoop101

Test Hadoop on Hadoop0 Node

- Download hadoop-1.1.2.tar.gz and install according to Michael G. Noll's excellent instructions.

- Everything works.

- In the process, had to create hduser user and hadoop group.