Hadoop Tutorial 1.1 -- Generating Task Timelines

--D. Thiebaut 15:59, 18 April 2010 (UTC)

Updated --D. Thiebaut (talk) 07:56, 13 April 2017 (EDT)

|

This Hadoop tutorial shows how to generate Task Timelines similar to the ones generated by Yahoo in their report on the TeraSort experiment. See the page on Hadoop/MapReduce tutorials for additional tutorials. |

Introduction

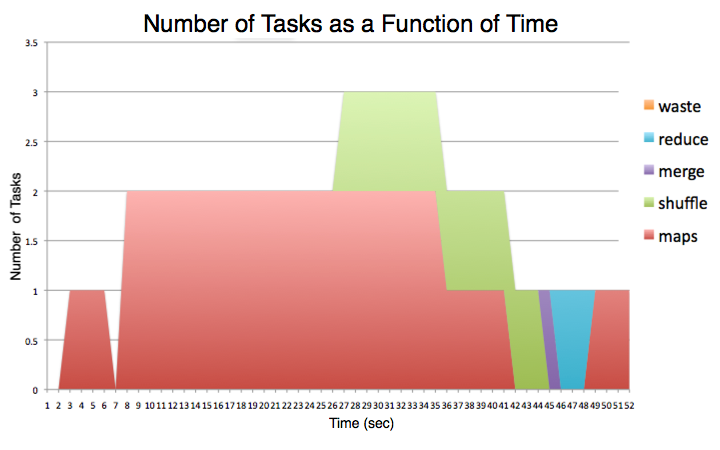

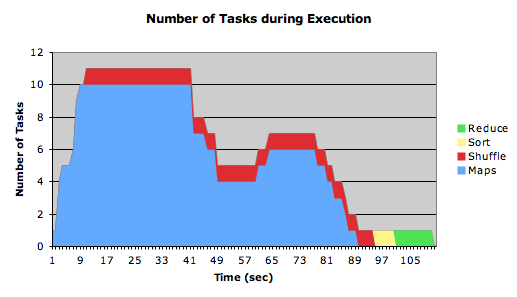

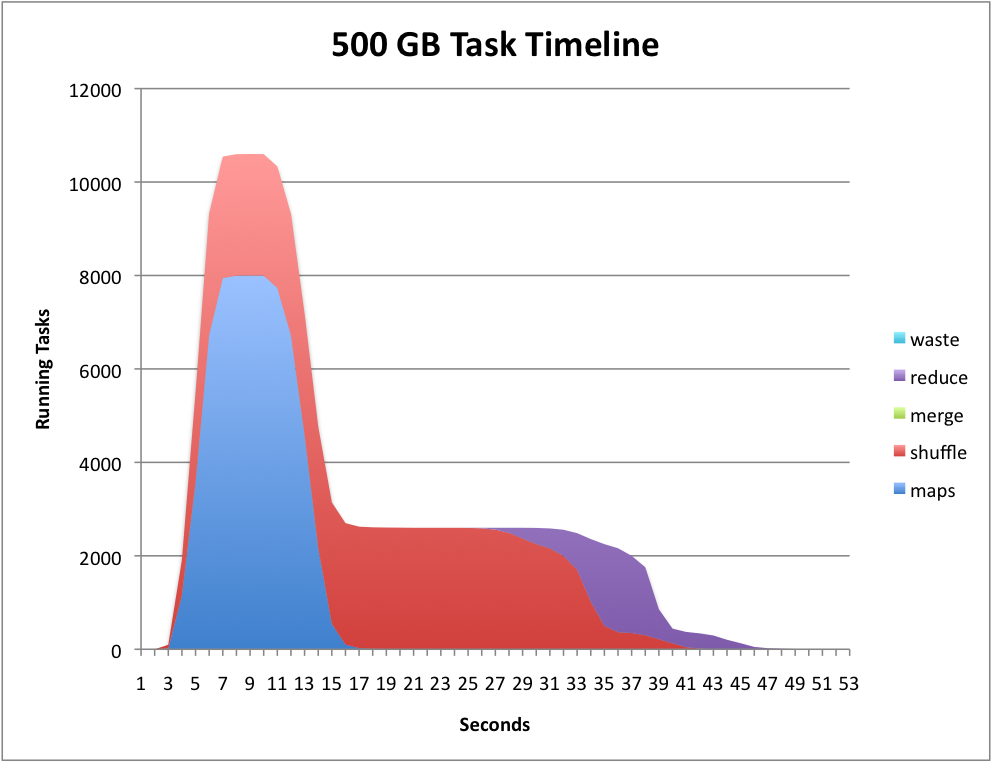

In May 2009 Yahoo announced it could sort a Petabyte of data in 16.25 hours and a Terabyte of data in 62 seconds using Hadoop running on 3658 processors in the first case, and 1460 in the second case [1]. In their report they show very convincing diagrams showing the evolution of the computation as a time-line of map, shuffle, sort, and reduce tasks as a function of time, an example of which is shown below.

The graph is generated by parsing one of the many logs generated by hadoop when a job is running, and is due to one of the authors of the Yahoo report cited above. It's original name is job_history_summary.py, and is available from here. We have renamed it generateTimeLine.py.

Generating the Log

- First run the WordCound program on a couple books, on an AWS cluster of 1 or 2 servers. We'll use the WordCount program of [[Tutorial:_Creating_a_Hadoop_Cluster_with_StarCluster_on_Amazon_AWS | the tutorial on creating a Hadoop cluster with StarCluster].

- Run the word count program against an input directory where you have one or more text files containing large documents (books from Gutenberg.org, for example).

sgeadmin@master:~$ hadoop jar /usr/lib/hadoop-0.20/hadoop-examples.jar wordcount books output2

- When the program is over, find the log of the job you just ran in the log-history. If you are the only user at the moment, it will be the last job in the list. You will need to replace nnnnnnnnnnnnn by the actual numbers that will be included in the file name. These numbers will be different every time you run a Hadoop job.

sgeadmin@master:~$ hadoop dfs -ls output2/_logs/history Found 2 items -rw-r--r-- 3 sgeadmin supergroup 14717 2017-04-13 11:49 /user/sgeadmin/output2/_logs/history/job_nnnnnnnnnn_nnnn_nnnnnnnnnnnnn_sgeadmin_word+count -rw-r--r-- 3 sgeadmin supergroup 49000 2017-04-13 11:49 /user/sgeadmin/output2/_logs/history/master_nnnnnnnnnnnnnn_job_nnnnnnnnnn_nnnn_conf.xml

- Get the "job" file

sgeadmin@master:~$ hadoop dfs -copyToLocal output2/_logs/history/job_201704131133_0002_1492084161004_sgeadmin_word+count .

- Take a look at it with less:

sgeadmin@master:~$ less job_201704131133_0002_1492084161004_sgeadmin_word+count

- Note that it contains a wealth of information that is fairly cryptic and not necessarily easy to decypher.

- To parse this file, we'll use a utility from Yahoo! and we'll call it generateTimeLine.py

sgeadmin@master:~$ cat > generateTimeLine.py

- and copy paste the script whose code is available here.

- Make the script executable:

sgeadmin@master:~$ chmod +x generateTimeLine.py

- Feed the log file above to the script:

sgeadmin@master:~$ cat job_nnnnnnnnn_nnnn_nnnnnnnnnnnn_sgeadmin_word+count | ./generateTimeLine.py

time maps shuffle merge reduce 0 1 0 0 0 1 1 0 0 0 2 3 0 0 0 3 4 0 0 0 4 4 0 0 0 5 5 0 0 0 ...

- Copy/Paste the output of the script into your favorite spreadsheet software or graphing package (R) and generate an Area graph for the data that you will have distributed in individual columns.

- Use the Area type graph. You should obtain something like this:

|  | |

| ||

|  |

Multiple Number of Reduce Tasks

The default is for 1 reduce task. If you want to use more, then use the -r switch when starting your hadoop job:

hadoop jar /home/hadoop/352/dft/wordcount_countBuck/wordcount.jar org.myorg.WordCount -r 2 dft1 dft1-output

|  | |

| ||

|  |

Another Example of timelines

This one shows the timeline of WordCount running on 4 text files (xml) of 180 MB each on a cluster of 6 single-core Linux servers. (data available here)

- Question

- Explain the dip in the middle of the Map tasks.

If you get strange results

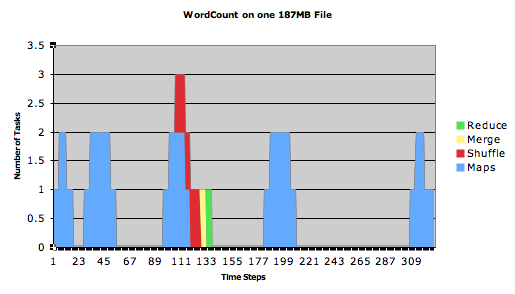

If you get timelines that look strange, like this one:

- it might be that the servers in your cluster are not time synchronized (it shouldn't happen on AWS, but it could happen on a local Hadoop cluster that you may have implemented. You need to run the following command on all the hadoop nodes to synchronize them.

sudo ntpdate ntp.ubuntu.com

References

- ↑ Owen O'Malley and Arun Murthy, Hadoop Sorts a Petabyte in 16.25 Hours and a Terabyte in 62 seconds, http://developer.yahoo.net/blogs, May 2009.