Hadoop Tutorial 1.1 -- Generating Task Timelines

|

This Hadoop tutorial shows how to generate Task Timelines similar to the ones generated by Yahoo in their report on the TeraSort experiment. See the page on Hadoop/MapReduce tutorials for additional tutorials. |

Introduction

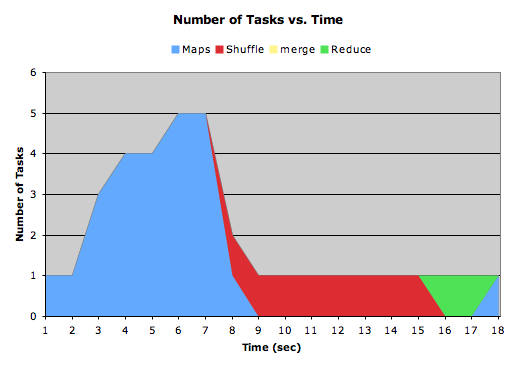

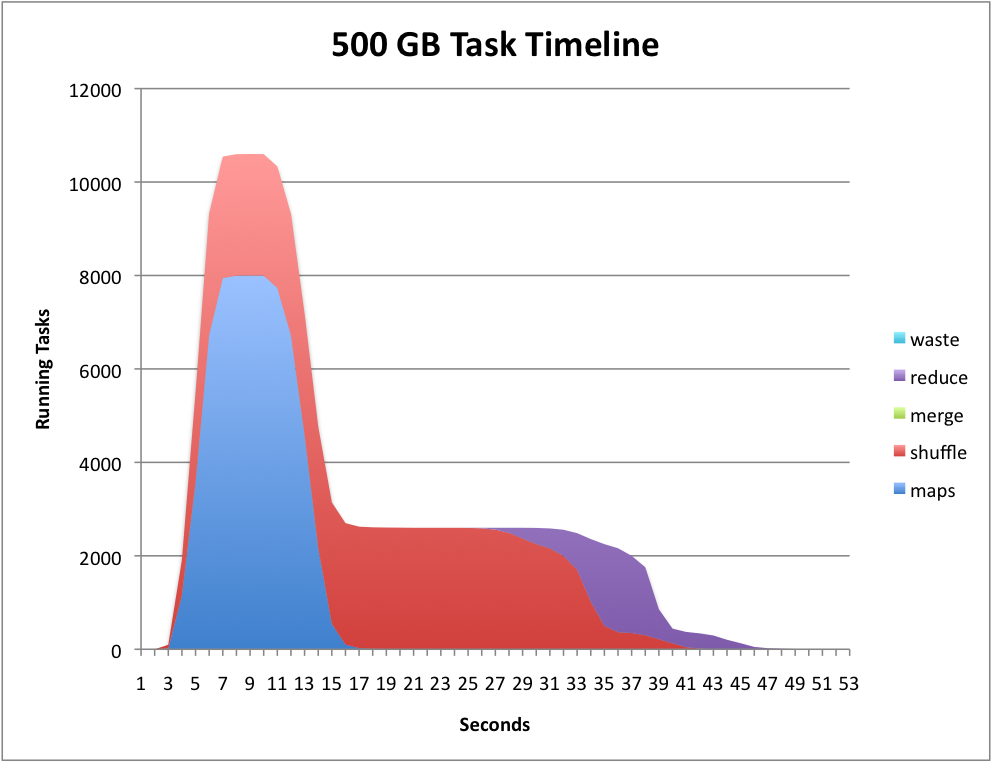

In May 2009 Yahoo announced it could sort a Petabyte of dat in 16.25 hours and a Terabyte of data in 62 seconds using Hadoop running on 3658 processors in the first case, and 1460 in the second case [1]. In their report they show very convincing diagrams showing the evolution of the computation as a time-line of map, shuffle, sort, and reduce tasks as a function of time, an example of which is shown below.

The graph is generated by parsing one of the many logs generated by hadoop when a job is running, and is due to one of the authors of the Yahoo report cited above. It's original name is job_history_summary.py, and is available from here. We have renamed it generateTimeLine.py.

Generating the Log

First run a MapReduce program. We'll use the WordCount program of Tutorial #1.

- Run the word count program on your input directory where you have one or more text files containing large documents.

hadoop jar /home/hadoop/352/dft/wordcount_counters/wordcount.jar org.myorg.WordCount dft6 dft6-output

- When the program is over, find the log of the job you just ran in the log-history (If you are the only user at the moment, it will be the last job in the list.):

ls -ltr ~/hadoop/hadoop/logs/history/ -rwxrwxrwx 1 hadoop hadoop 35578 2010-04-04 14:38 hadoop1_1270135155456_job_201004011119_0014_hadoop_wordcount

- Assume the file we're interested in is the 0014 one. Take a look at it

less hadoop1_1270135155456_job_201004011119_0014_hadoop_wordcount

- Note that it contains a wealth of information that is fairly cryptic and not necessarily easy to decypher.

- Check that the script generateTimeLine.py is installed on your system:

which generateTimeLine.py (if you get response to the command, you have it!)

If the script hasn't been installed yet, create a file in your path that contains the Yahoo script for parsing the log file. The script is also available here.

- Feed the log file above to the script:

cat hadoop1_1270135155456_job_201004011119_0014_hadoop_wordcount | generateTileLine.py time maps shuffle merge reduce 0 1 0 0 0 1 1 0 0 0 2 3 0 0 0 3 4 0 0 0 4 4 0 0 0 5 5 0 0 0 6 5 0 0 0 7 1 1 0 0 8 0 1 0 0 9 0 1 0 0 10 0 1 0 0 11 0 1 0 0 12 0 1 0 0 13 0 1 0 0 14 0 1 0 0 15 0 0 0 1 16 0 0 0 1 17 1 0 0 0

- Copy/Paste the output of the script into your favorite spreadsheet software and generate an Area graph for the data that you will have distributed in individual columns.

- You should obtain something like this:

|  | |

| ||

|  |

Another Example

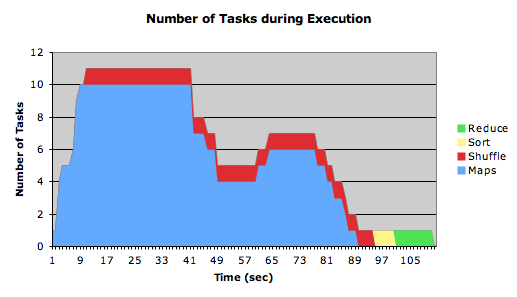

This one shows the timeline of WordCount running on 4 text files (xml) of 180 MB each on a cluster of 6 single-core Linux servers. (data available here)

- Question

- Explain the dip in the middle of the Map tasks.

References

- ↑ Owen O'Malley and Arun Murthy, Hadoop Sorts a Petabyte in 16.25 Hours and a Terabyte in 62 seconds, http://developer.yahoo.net/blogs, May 2009.