Tutorial: Computing Pi on an AWS MPI-Cluster

--D. Thiebaut (talk) 22:55, 28 October 2013 (EDT)

Updated --D. Thiebaut (talk) 12:13, 16 March 2017 (EDT)

This tutorial is the continuation of the tutorial Creating and MPI-Cluster on AWS. Make sure you go through it first. The tutorial also assumes that you have available credentials to access the Amazon AWS system.

Contents

Reference Material

I have used

- the the MPI Tutorial from mpitutorial.com, and

- the StarCluster quick-start tutorial from the StarCluster project

for inspiration and adapted them in the present page to setup a cluster for the CSC352 class. The setup uses MIT's Star Cluster package.

An MPI C-Program for Approximating Pi

The program below works for any number of processes on an MPI cluster or on a single computer.

// piN.c

// D. Thiebaut

// Computes Pi using N processes under MPI

//

// To compile and run:

// mpicc -o piN piN.cpp

// time mpirun -np 2 ./piN 100000000

//

// Output

// Process 1 of 2 started on beowulf2. N= 50000000

// Process 0 of 2 started on beowulf2. N= 50000000

// 50000000 iterations: Pi = 3.14159

//

// real0m1.251s

// user0m1.240s

// sys0m0.000s

//

#include "mpi.h"

#include <stdio.h>

#include <stdlib.h>

#define MANAGER 0

//--------------------------------------------------------------------

// P R O T O T Y P E S

//--------------------------------------------------------------------

void doManager( int, int );

void doWorker( );

//--------------------------------------------------------------------

// M A I N

//--------------------------------------------------------------------

int main(int argc, char *argv[]) {

int myId, noProcs, nameLen;

char procName[MPI_MAX_PROCESSOR_NAME];

int n;

if ( argc<2 ) {

printf( "Syntax: mpirun -np noProcs piN n\n" );

return 1;

}

// get the number of samples to generate

n = atoi( argv[1] );

//--- start MPI ---

MPI_Init( &argc, &argv);

MPI_Comm_rank( MPI_COMM_WORLD, &myId );

MPI_Comm_size( MPI_COMM_WORLD, &noProcs );

MPI_Get_processor_name( procName, &nameLen );

//--- display which process we are, and how many there are ---

printf( "Process %d of %d started on %s. n = %d\n",

myId, noProcs, procName, n );

//--- farm out the work: 1 manager, several workers ---

if ( myId == MANAGER )

doManager( n, noProcs );

else

doWorker( );

//--- close up MPI ---

MPI_Finalize();

return 0;

}

//--------------------------------------------------------------------

// The function to be evaluated

//--------------------------------------------------------------------

double f( double x ) {

return 4.0 / ( 1.0 + x*x );

}

//--------------------------------------------------------------------

// The manager's main work function. Note that the function

// can and should be made more efficient (and faster) by sending

// an array of 3 ints rather than 3 separate ints to each worker.

// However the current method is explicit and better highlights the

// communication pattern between Manager and Workers.

//--------------------------------------------------------------------

void doManager( int n, int noProcs ) {

double sum0 = 0, sum1;

double deltaX = 1.0/n;

int i, begin, end;

MPI_Status status;

//--- first send n and bounds of series to all workers ---

end = n/noProcs;

for ( i=1; i<noProcs; i++ ) {

begin = end;

end = (i+1) * n / noProcs;

MPI_Send( &begin, 1, MPI_INT, i /*node i*/, 0, MPI_COMM_WORLD );

MPI_Send( &end, 1, MPI_INT, i /*node i*/, 0, MPI_COMM_WORLD );

MPI_Send( &n, 1, MPI_INT, i /*node i*/, 0, MPI_COMM_WORLD );

}

//--- perform summation over 1st interval of the series ---

begin = 0;

end = n/noProcs;

for ( i = begin; i < end; i++ )

sum0 += f( i * deltaX );

//--- wait for other half from worker ---

for ( i=1; i<noProcs; i++ ) {

MPI_Recv( &sum1, 1, MPI_DOUBLE, MPI_ANY_SOURCE, 0, MPI_COMM_WORLD, &status );

sum0 += sum1;

}

//--- output result ---

printf( "%d iterations: Pi = %f\n", n, sum0 *deltaX );

}

//--------------------------------------------------------------------

// The worker's main work function. Same comment as for the

// Manager. The 3 ints would benefit from being sent in an array.

//--------------------------------------------------------------------

void doWorker( ) {

int begin, end, n, i;

//--- get n and bounds for summation from manager ---

MPI_Status status;

MPI_Recv( &begin, 1, MPI_INT, MPI_ANY_SOURCE, 0, MPI_COMM_WORLD, &status );

MPI_Recv( &end, 1, MPI_INT, MPI_ANY_SOURCE, 0, MPI_COMM_WORLD, &status );

MPI_Recv( &n, 1, MPI_INT, MPI_ANY_SOURCE, 0, MPI_COMM_WORLD, &status );

//--- sum over boundaries received ---

double sum = 0;

double deltaX = 1.0/n;

for ( i=begin; i< end; i++ )

sum += f( i * deltaX );

//-- send result to manager ---

MPI_Send( &sum, 1, MPI_DOUBLE, MANAGER, 0, MPI_COMM_WORLD );

}

Creating a 10-node cluster

If you are using the AWS account that was given to you in class, it is imperative that you identify your clusters in a unique way, as Amazon somehow stores the clusters in the same "space" reserved for your instructor. Therefore, make sure you suffix your cluster names with your initials!

- Connect to the machine you have access to, and on which starcluster is installed (your laptop, or aurora).

- Make sure you do not have a cluster running:

starcluster listclusters

- make sure a cluster with your initials does not appear in the output. If you see one, then terminate it (replace ABC by your initials):

starcluster terminate myclusterABC

- or, if the first command is not successful:

starcluster terminate -f myclusterABC

- Edit the starcluster configuration file on your machine (laptop or aurora):

emacs -nw ~/.starcluster/config

- Locate the following line, and set the number of nodes to 10:

# number of ec2 instances to launch CLUSTER_SIZE = 10

- Save the config file.

- Start up a new cluster of 10 instances. It's fine to use the same name:

starcluster start myclusterABC

StarCluster - (http://star.mit.edu/cluster) (v. 0.95.6)

Software Tools for Academics and Researchers (STAR)

Please submit bug reports to starcluster@mit.edu

*** WARNING - Setting 'EC2_PRIVATE_KEY' from environment...

*** WARNING - Setting 'EC2_CERT' from environment...

>>> Using default cluster template: smallcluster

.

.

.

You can activate a 'stopped' cluster by passing the -x

option to the 'start' command:

$ starcluster start -x myclusterABC

This will start all 'stopped' nodes and reconfigure the

cluster.

SSH to the Master node

SSH to the master node, and become User sgeadmin:

starcluster sshmaster myclusterABC ... root@master:~# su - sgeadmin sgeadmin@master:~$

Upload the PiN.c program to the Cluster

This step will upload a program from the machine you use to type your starcluster command, to your AWS cluster. If you are using starcluster directly on your laptop, then you will need to have the piN.c program on your laptop. If you are using aurora to enter starcluster commands, then you need the piN.c program to be on aurora.

- Go to the directory where your version of piN.c is located.

cd cd pathToWhereYourFileIsLocated

- Upload piN.c to your AWS cluster:

starcluster put myclusterABC --user sgeadmin piN.c . (don't forget the dot at the end!)

Create a host file

Mpirun.mpich2 will by default launch all the processes you start on the node on which you run the command. By default mpirun.mpich2 assumes only one node in the cluster. To force it to launch the processes on other nodes in the cluster you need to put the names of all nodes in a file called the host file.

Starcluster uses a simple system to name the nodes. The master node is called master and the workers are called node001, node002, etc...

- You can use emacs to create a file called hosts in the /home/mpi directory with the name of your nodes, or you can use bash and do it with a one-line command:

sgeadmin@master:~$ cat /etc/hosts | tail -n 10 | cut -d' ' -f 2 > hosts

- Verify that the hosts file contains the required information:

sgeadmin@master:~$ cat hosts master node001 node002 node003 node004 node005 node006 node007 node008 node009

This list will be used by the mpirun.mpich2 program to figure out which node to distribute the processes to.

Compile and Run the PiN.c program on the 10-node cluster

sgeadmin@master:~$ mpicc -o piN piN.c sgeadmin@master:~$ mpirun.mpich2 -n 10 -f hosts ./piN 10000000 Process 0 of 10 started on master. n = 10000000 Process 7 of 10 started on node007. n = 10000000 Process 3 of 10 started on node003. n = 10000000 Process 6 of 10 started on node006. n = 10000000 Process 2 of 10 started on node002. n = 10000000 Process 8 of 10 started on node008. n = 10000000 Process 9 of 10 started on node009. n = 10000000 Process 5 of 10 started on node005. n = 10000000 Process 4 of 10 started on node004. n = 10000000 Process 1 of 10 started on node001. n = 10000000 10000000 iterations: Pi = 3.141593

If you play around you will find that for large values of n the computation of pi will get noticeably off the mark. The formula we use works well only for a limited number of terms.

Timing the Execution Time of your MPI Program

sgeadmin@master:~$ time mpirun.mpich2 -n 10 -f hosts ./piN 1000000000 Process 0 of 10 started on master. n = 1000000000 Process 3 of 10 started on node003. n = 1000000000 Process 6 of 10 started on node006. n = 1000000000 Process 2 of 10 started on node002. n = 1000000000 Process 4 of 10 started on node004. n = 1000000000 Process 7 of 10 started on node007. n = 1000000000 Process 9 of 10 started on node009. n = 1000000000 Process 1 of 10 started on node001. n = 1000000000 Process 5 of 10 started on node005. n = 1000000000 Process 8 of 10 started on node008. n = 1000000000 1000000000 iterations: Pi = 3.171246 real 0m4.911s user 0m4.072s sys 0m0.156s

Challenge 1 |

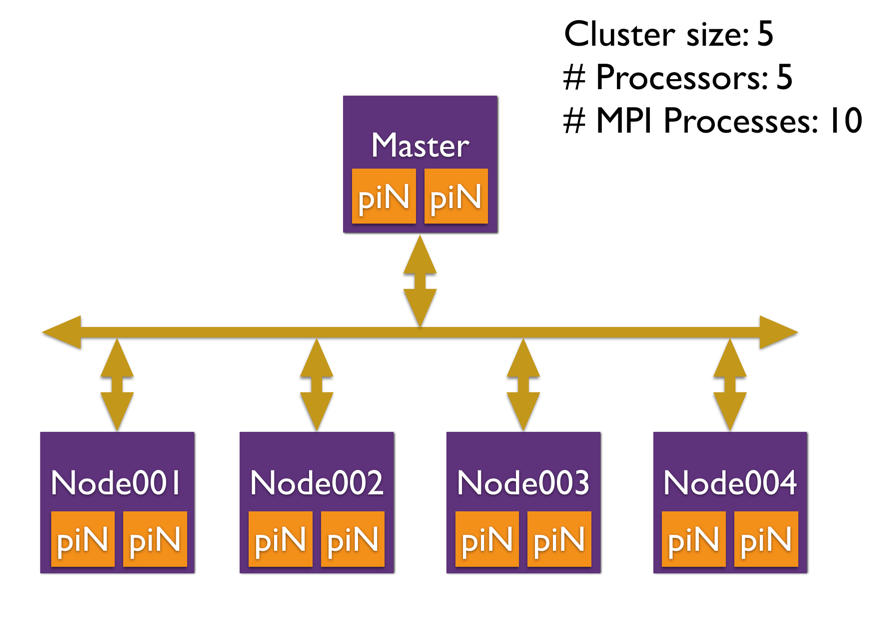

- Running P MPI processes on P nodes seems logical, but it certainly is not the limitation. The figure below shows a situation where 2P MPI processes are running on P nodes.

- While keeping number of terms in the summation N constant, play with the number of MPI processes, equal to the number of nodes in your cluster, twice the number of nodes, three times, etc, and time the execution of the parallel program. See if there is a configuration that runs faster than the others.

Challenge 2 |

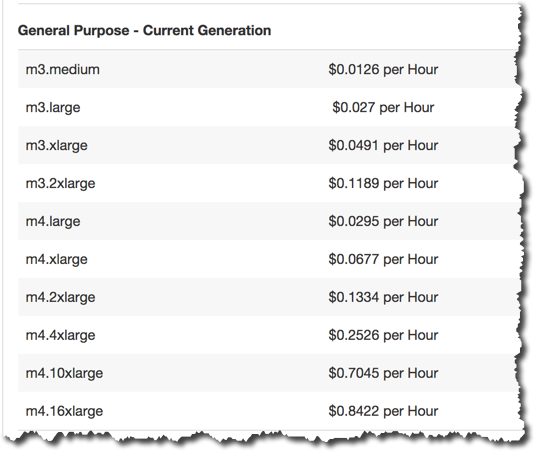

Terminate your cluster, and edit your starcluster config file (emacs ~/.starcluster/config). Change the m3.medium instance type to m3.large. M3.large provides 2 cores (that Amazon calls virtual CPUs), rather than 1 for m3.medium. Restart your cluster, upload your piN.c file again, compile it, create a host file, and perform the same measurements you did in Challenge 1. Do you observe any improvement in execution speed?

When you are done, return your instance type to m3.medium.

Challenge 3 |

Looking at the charts below, figure out how much it has cost to run your MPI application on AWS on the two different clusters (assuming that you were using spot instances, and not free tier instances). Note that Amazon charges by the hour. So if you use 5 minutes of time on your cluster (between start and stop operations), Amazon charges for an hour of computer time. Also, if you use 10 machines in your cluster, you need to multiply the price for the instance by 10!

Types of AMIs

For information, below is the comparison chart of the different spot instances or AMIs (Amazon Machine Instances) available. Spot prices (see aws.amazon.com/ec2/spot/pricing) are set by Amazon fEC2 and fluctuate periodically depending on the supply of and demand for Spot instance capacity. The table below is taken from Amazon's spot pricing page, and displays the Spot price for each region and instance type. The page is updated every 5 minutes.

The default we set in the Starcluster config file is the m3.medium, but notice that there are many others available. Of course the more powerful machines come at a higher price. But given that some have many cores, the elevated cost for them is worth the potential boost in performance to be gained.