Hadoop Tutorial 1 -- Running WordCount (rev 3)

--D. Thiebaut (talk) 13:26, 4 January 2017 (EST)

Contents

|

This tutorial will introduce you to the Hadoop Cluster in the Computer Science Dept. at Smith College, and how to submit jobs on it. This is Version 3 of this page, and it was tested with Hadoop Version 2.7.3. The list of Hadoop/MapReduce tutorials is available here.

|

Before You Start

- You should be familiar with Hadoop. There are several on-line pages and tutorials that have excellent information. Make sure you browse them first!

- MapReduce Tutorial at apache.org. A must-read!

- Hadoop Tutorial at Yahoo!. Also very good!

- Section 6 in Tom White's Hadoop, the Definitive Guide is also good reading material.

- This tutorial is heavily based and adapted from the wordcount example found in this excellent Apache tutorial.

Login

Depending on where you are, this step will vary. Just proceed using the login account that was given to you for the Hadoop machine you'll be working with.

Basic Hadoop Admin Commands

(Taken from Hadoop Wiki's Getting Started with Hadoop):

The ~/hadoop/bin directory contains some scripts used to launch Hadoop DFS and Hadoop Map/Reduce daemons. These are:

- start-all.sh - Starts all Hadoop daemons, the namenode, datanodes, the jobtracker and tasktrackers.

- stop-all.sh - Stops all Hadoop daemons.

- start-mapred.sh - Starts the Hadoop Map/Reduce daemons, the jobtracker and tasktrackers.

- stop-mapred.sh - Stops the Hadoop Map/Reduce daemons.

- start-dfs.sh - Starts the Hadoop DFS daemons, the namenode and datanodes.

- stop-dfs.sh - Stops the Hadoop DFS daemons.

Running the Map-Reduce WordCount Program

- We'll take the example directly from Michael Noll's Tutorial (1-node cluster tutorial), and count the frequency of words occuring in James Joyce's Ulysses.

Creating a working directory for your data

- If you haven't done so, ssh to driftwood with the user account that was given to you and create a directory for yourself. We'll use dft as an example in this tutorial, but use your own identifier.

hadoop@hadoop102:~$ cd hadoop@hadoop102:~$ cd 352 hadoop@hadoop102:~/352$ mkdir dft (replace dft by your favorite identifier) hadoop@hadoop102:~/352$ cd dft

Creating/Downloading Data Locally

In order to process a text file with hadoop, you first need to download the file to a personal directory in the hadoop account, then copy it to the Hadoop File System (HDFS) so that the hadoop namenode and datanodes can share it.

Creating a local copy for User Hadoop

- Download a copy of James Joyce's Ulysses:

hadoop@hadoop102:~/352/dft$ wget http://www.gutenberg.org/files/4300/4300-0.txt hadoop@hadoop102:~/352/dft$ mv 4300-0.txt 4300.txt hadoop@hadoop102:~/352/dft$ head -50 4300.txt

- Verify that you read:

"Stately, plump Buck Mulligan came from the stairhead, bearing a bowl of lather on which a mirror and a razor lay crossed."

|  | |

| ||

|  |

Copy Data File to HDFS

- Create a dft (or whatever your identifier is) directory in the Hadoop File System (HDFS) and copy the data file 4300.txt to it:

x@x:~$ cd 352 x@x:~/352$ cd dft x@x:~/352/dft$ hadoop fs -copyFromLocal 4300.txt dft x@x:~/352/dft$ hadoop fs -ls x@x:~/352/dft$ hadoop fs -ls dft

- Verify that your directory is now in the Hadoop File System, as indicated above, and that it contains the 4300.txt file, of size 1.5 MBytes.

WordCount.java Map-Reduce Program

- Here's the full WordCount program:

import java.io.IOException; import java.util.StringTokenizer; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class WordCount { public static class TokenizerMapper extends Mapper<Object, Text, Text, IntWritable>{ private final static IntWritable one = new IntWritable(1); private Text word = new Text(); public void map(Object key, Text value, Context context ) throws IOException, InterruptedException { StringTokenizer itr = new StringTokenizer(value.toString()); while (itr.hasMoreTokens()) { word.set(itr.nextToken()); context.write(word, one); } } } public static class IntSumReducer extends Reducer<Text,IntWritable,Text,IntWritable> { private IntWritable result = new IntWritable(); public void reduce(Text key, Iterable<IntWritable> values, Context context ) throws IOException, InterruptedException { int sum = 0; for (IntWritable val : values) { sum += val.get(); } result.set(sum); context.write(key, result); } } public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); Job job = Job.getInstance(conf, "word count"); job.setJarByClass(WordCount.class); job.setMapperClass(TokenizerMapper.class); job.setCombinerClass(IntSumReducer.class); job.setReducerClass(IntSumReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); FileInputFormat.addInputPath(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1])); System.exit(job.waitForCompletion(true) ? 0 : 1); } }

- The program has several sections:

The map section

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>{

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

}

The TokenizerMapper class takes lines of text that are fed to it (the text files are automatically broken down into lines by Hadoop--No need for us to do it!), and breaks them into words. Outputs a datagram for each word that is a ( String, int ) tuple, of the form ( "some-word", 1), since each tuple corresponds to the first occurrence of each word, so the initial frequency for each word is 1.

The reduce section

public static class IntSumReducer

extends Reducer<Text,IntWritable,Text,IntWritable> {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

The reduce section gets collections of datagrams of the form [( word, n1 ), (word, n2)...] where all the words are the same, but with different numbers. These collections are the result of a sorting process that is integral to Hadoop and which gathers all the datagrams with the same word together. The reduce process gathers the datagrams inside a datanode, and also gathers datagrams from the different datanodes into a final collection of datagrams where all the words are now unique, with their total frequency (number of occurrences).

The map-reduce organization section

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

Here we see that the combining stage and the reduce stage are implemented by the same reduce class, which makes sense, since the number of occurrences of a word as generated on several datanodes is just the sum of the numbers of occurrences.

The datagram definitions

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

As the documentation indicates, the datagrams are of the form (String, int).

Compiling WordCount

- Create your own copy of WordCount.java in your 352/xxx directory.

- Compile it

x@x:~/352/dft$ hadoop com.sun.tools.javac.Main WordCount.java

- Create a jar file

x@x:~/352/dft$ jar cf wc.jar WordCount*.class

- Make sure you have two new files in your directory, a .class and .jar file:

x@x:~/352/dft$ ls -ltr | tail -2 -rw-r--r-- 1 hduser cats 1491 Jan 4 14:54 WordCount.class -rw-r--r-- 1 hduser cats 3075 Jan 4 14:57 wc.jar

Running WordCount

Run the WordCount java program as follows:

x@x:~/352/dft$ hadoop jar wc.jar WordCount dft dft-output

The program takes about 10 seconds to execute on the host. The output generated will look something like this:

17/01/04 15:00:55 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

17/01/04 15:00:55 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId=

17/01/04 15:00:55 WARN mapreduce.JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

17/01/04 15:00:55 INFO input.FileInputFormat: Total input paths to process : 2

17/01/04 15:00:55 INFO mapreduce.JobSubmitter: number of splits:2

17/01/04 15:00:55 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local116824815_0001

17/01/04 15:00:55 INFO mapreduce.Job: The url to track the job: http://localhost:8080/

17/01/04 15:00:55 INFO mapreduce.Job: Running job: job_local116824815_0001

17/01/04 15:00:55 INFO mapred.LocalJobRunner: OutputCommitter set in config null

17/01/04 15:00:55 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1

[some lines removed...]

17/01/04 15:00:58 INFO mapred.LocalJobRunner: 2 / 2 copied.

17/01/04 15:00:58 INFO mapred.Task: Task attempt_local116824815_0001_r_000000_0 is allowed to commit now

17/01/04 15:00:58 INFO output.FileOutputCommitter: Saved output of task 'attempt_local116824815_0001_r_000000_0' to hdfs://localhost:9000/user/hduser/dft-output/_temporary/0/task_local116824815_0001_r_000000

17/01/04 15:00:58 INFO mapred.LocalJobRunner: reduce > reduce

17/01/04 15:00:58 INFO mapred.Task: Task 'attempt_local116824815_0001_r_000000_0' done.

17/01/04 15:00:58 INFO mapred.LocalJobRunner: Finishing task: attempt_local116824815_0001_r_000000_0

17/01/04 15:00:58 INFO mapred.LocalJobRunner: reduce task executor complete.

17/01/04 15:00:58 INFO mapreduce.Job: map 100% reduce 100%

17/01/04 15:00:58 INFO mapreduce.Job: Job job_local116824815_0001 completed successfully

17/01/04 15:00:59 INFO mapreduce.Job: Counters: 35

File System Counters

FILE: Number of bytes read=2877952

FILE: Number of bytes written=5875924

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=7904635

HDFS: Number of bytes written=525803

HDFS: Number of read operations=22

HDFS: Number of large read operations=0

HDFS: Number of write operations=5

Map-Reduce Framework

Map input records=65154

Map output records=535896

Map output bytes=5219042

Map output materialized bytes=1433616

Input split bytes=224

Combine input records=535896

Combine output records=98630

Reduce input groups=49315

Reduce shuffle bytes=1433616

Reduce input records=98630

Reduce output records=49315

Spilled Records=197260

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=159

Total committed heap usage (bytes)=1388838912

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=3161854

File Output Format Counters

Bytes Written=525803

If you need to kill your job...

- If for any reason your job is not completing correctly (may be some "Too many fetch failure" errors?), locate your job Id (job_local116824815_0001 in our case), and kill it:

x@x:~/352/dft$ hadoop job -kill job_local116824815_0001

Getting the Output

- Let's take a look at the output of the program:

hadoop@hadoop102:~/352/dft$ hadoop dfs -ls x@x:~/352/dft$ hadoop fs -ls Found 2 items drwxr-xr-x - hduser supergroup 0 2017-01-04 14:23 dft drwxr-xr-x - hduser supergroup 0 2017-01-04 15:00 dft-output

- Verify that a new directory with -output at the end of your identifier has been created.

- Look at the contents of this output directory:

x@x:~/352/dft$ hadoop fs -ls dft-output Found 2 items -rw-r--r-- 1 hduser supergroup 0 2017-01-04 15:00 dft-output/_SUCCESS -rw-r--r-- 1 hduser supergroup 525803 2017-01-04 15:00 dft-output/part-r-00000

- Important Node: depending on the settings stored in your hadoop-site.xml file, you may have more than one output file, and they may be compressed (gzipped).

- Finally, let's take a look at the output

x@x:~/352/dft$ hadoop fs -cat dft-output/part-r-00000 | less

- And we get

"YOU 2 #4300] 2 $5,000) 2 % 6 ✠. 2 &c, 4 &c. 2 ($1 2 (1 4 (1) 2 (1/4d, 2 . . . “Come 2 “Defects,” 2 “I 2 “Information 2 “J” 2 “Plain 4 “Project 10 “Right 2 “Viator” 2 • 2

- If we wanted to copy the output file to our local storage (remember, the output is automatically created in the HDFS world, and we have to copy the data from there to our file system to work on it):

x@x:~/352/dft$ cd ~/352/dft x@x:~/352/dft$ hadoop fs -copyToLocal dft-output/part-r-00000 . x@x:~/352/dft$ ls 4300.txt part-r-00000

- To remove the output directory (recursively going through directories if necessary):

x@x:~/352/dft$ hadoop dfs -rm -r dft-output

- Note that it the hadoop program WordCount will not run another time if the output directory exists. It always wants to create a new one, so we'll have to remove the output directory regularly after having saved the output of each job.

|  | |

| ||

|  |

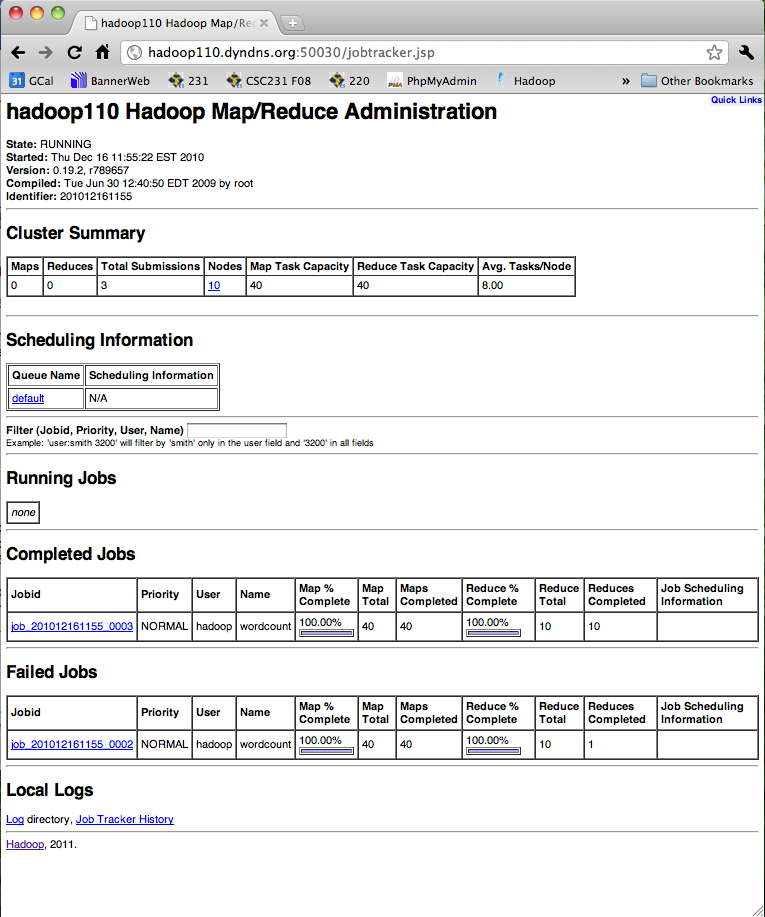

Analyzing the Hadoop Logs

Hadoop keeps track of several logs of the execution of your programs. They are located in the logs sub-directory in the hadoop directory. Some of the same logs are also available from the hadoop Web GUI: http://hadoop110.dyndns.org:50030/jobtracker.jsp

Accessing Logs through the Command Line

Here is an example of the logs in ~/hadoop/logs

cd cd /mnt/win/data/logs/ ls -ltr -rwxrwxrwx 1 root root 15862 Jan 6 09:58 job_201012161155_0004_conf.xml drwxrwxrwx 1 root root 4096 Jan 6 09:58 history drwxrwxrwx 1 root root 368 Jan 6 09:58 userlogs cd history ls -ltr -rwxrwxrwx 1 root root 15862 Jan 6 09:58 hadoop110_1292518522985_job_201012161155_0004_conf.xml -rwxrwxrwx 1 root root 102324 Jan 6 10:02 hadoop110_1292518522985_job_201012161155_0004_hadoop_wordcount

The last log listed in the history directory is interesting. It contains the start and end time of all the tasks that ran during the execution of our Hadoop program.

It contains several different types of lines:

- Lines starting with "Job", that indicate that refer to the job, listing information about the job (priority, submit time, configuration, number of map tasks, number of reduce tasks, etc...

Job JOBID="job_201004011119_0025" LAUNCH_TIME="1270509980407" TOTAL_MAPS="12" TOTAL_REDUCES="1" JOB_STATUS="PREP"

- Lines starting with "Task" referring to the creation or completion of Map or Reduce tasks, indicating which host they start on, and which split they work on. On completion, all the counters associated with the task are listed.

Task TASKID="task_201012161155_0004_m_000000" TASK_TYPE="MAP" START_TIME="1294325917422"\

SPLITS="/default-rack/hadoop103,/default-rack/hadoop109,/default-rack/hadoop102"

MapAttempt TASK_TYPE="MAP" TASKID="task_201012161155_0004_m_000000" \

TASK_ATTEMPT_ID="attempt_201012161155_0004_m_000000_0" TASK_STATUS="SUCCESS"

FINISH_TIME="1294325918358" HOSTNAME="/default-rack/hadoop110"

...

[(MAP_OUTPUT_BYTES)(Map output bytes)(66441)][(MAP_INPUT_BYTES)(Map input bytes)(39285)]

[ (COMBINE_INPUT_RECORDS)(Combine input records)(7022)][(MAP_OUTPUT_RECORDS)

(Map output records)(7022)]}" .

- Lines starting with "MapAttempt", reporting mostly status update, except if they contain the keywords SUCCESS and/or FINISH_TIME, indicating that the task has completed. The final time when the task finished is included in this line.

- Lines starting with "ReduceAttempt", similar to the MapAttempt tasks, report on the intermediary status of the tasks, and when the keyword SUCCESS is included, the finish time of the sort and shuffle phases will also be included.

ReduceAttempt TASK_TYPE="REDUCE" TASKID="task_201012161155_0004_r_000005"

TASK_ATTEMPT_ID="attempt_201012161155_0004_r_000005_0" START_TIME="1294325924281"

TRACKER_NAME="tracker_hadoop102:localhost/127\.0\.0\.1:40971" HTTP_PORT="50060" .

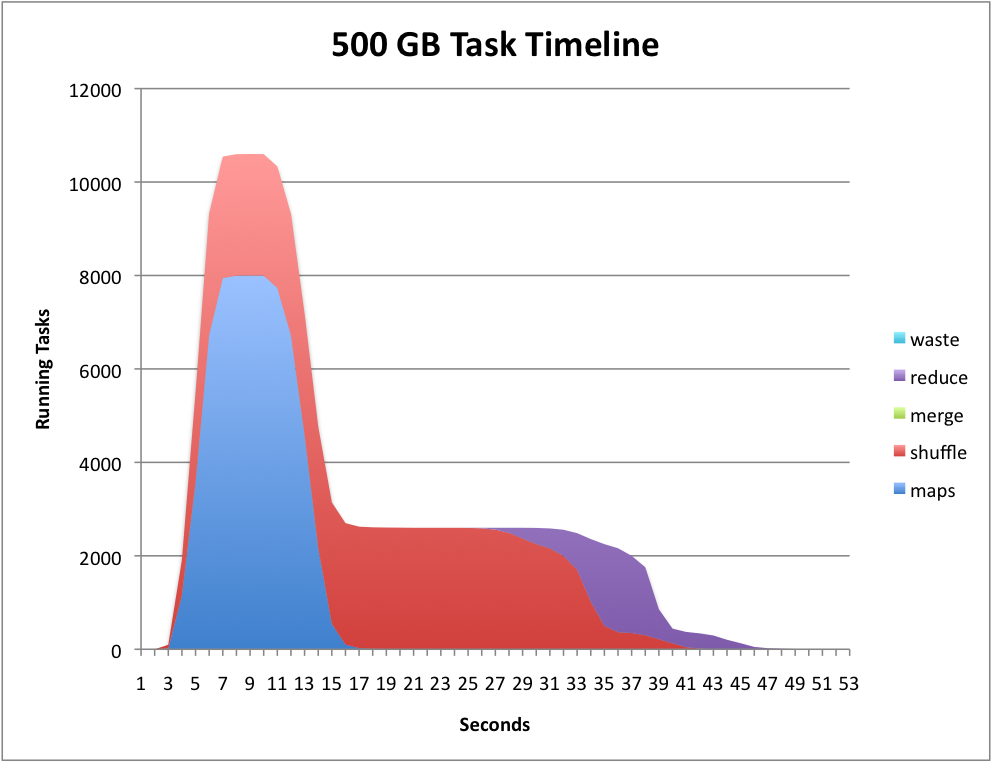

Generating Task Timelines

- Below is an example of a Task Timeline:

- See Generating Task Timelines for a series of steps that will allow you to generate Task Timelines.

Running Your Own Version of WordCount.java

In this section you will get a copy of the wordcount program in your directory, modify it, compile it, jar it, and run it on the Hadoop Cluster.

- Get a copy of the example WordCount.java program that comes with Hadoop:

cd cd 352/dft (use your own directory) cp /Users/hadoop/hadoop/src/examples/org/apache/hadoop/examples/WordCount.java .

- Create a directory where to store the java classes:

mkdir wordcount_classes

- Edit WordCount.java and change the package name to package org.myorg; That will be the extent of our modification for this time.

- Compile the new program:

javac -classpath /Users/hadoop/hadoop/hadoop-0.19.2-core.jar -d wordcount_classes WordCount.java

- create a java archive (jar) file containing the executables:

jar -cvf wordcount.jar -C wordcount_classes/ .

- Remove the output directory from the last run:

hadoop dfs -rmr dft-output

- Run your program on Hadoop:

hadoop jar /Users/hadoop/352/dft/wordcount.jar org.myorg.WordCount dft dft-output

- Check the results

hadoop dfs -ls dft-output hadoop dfs -cat dft-output/part-00000 " 34 "'A 1 "'About 1 "'Absolute 1 "'Ah!' 2 "'Ah, 2 ...

Moment of Truth: Compare 5-PC Hadoop cluster to 1 Linux PC

- The moment of truth has arrived. How is Hadoop fairing against a regular PC running Linux and computing the word frequencies of the contents of Ulysses?

- Step 1: time the execution of WordCount.java on hadoop.

hadoop dfs -rmr dft-output time hadoop jar /home/hadoop/hadoop/hadoop-0.19.2-examples.jar wordcount dft dft-output

- Observe and record the total execution time (real)

- To compute the word frequency of a text with Linux, we can use Linux commands and pipes, as follows:

cat 4300.txt | tr ' ' ' [ret] ' | sort | uniq -c [ret]

- where [ret] indicates that you should press the return/enter key. The explanation of what is going on is nicely presented at http://dsl.org, in the Text Concordance recipe.

- Try the command and verify that you get the word frequency of 4300.txt:

2457

1 _

1 _........................

7 -

3 --

2 --_...

5 --...

1 --............

3 --

1 ?...

6 ...

1 ...?

. . .

1 Zouave

1 zouave's

2 zrads,

1 zrads.

1 Zrads

1 Zulu

1 Zulus

1 _Zut!

- Observe the real execution time.

|  | |

| ||

|  |

|  | |

Instead of map-reducing just one text file, make the cluster work on several books at once. Michael Noll points to several downloadable book in his tutorial on setting up Hadoop on an Ubuntu cluster:

Download them all and time the execution time of hadoop on all these books against a single Linux PC.

| ||

|  |

Counters

- Counters are a nice way of keeping track of events taking place during a mapReduce job. Hadoop will merge the values of all the counters generated by the different tasks and will display the totals at the end of the job.

- The program sections below illustrate how we can create two counters to count the number of times the map function is called, and the number of times the reduce function is called.

- All we need to do is to create a new enum set in the mapReduce class, and to ask the reporter to increment the counters.

.

public class WordCount extends Configured implements Tool {

/**

* define my own counters

*/

enum MyCounters {

MAPFUNCTIONCALLS,

REDUCEFUNCTIONCALLS

}

/**

* Counts the words in each line.

* For each line of input, break the line into words and emit them as

* (<b>word</b>, <b>1</b>).

*/

public static class MapClass extends MapReduceBase

implements Mapper<LongWritable, Text, Text, IntWritable> {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(LongWritable key, Text value,

OutputCollector<Text, IntWritable> output,

Reporter reporter) throws IOException {

// increment task counter

reporter.incrCounter( MyCounters.MAPFUNCTIONCALLS, 1 );

String line = value.toString();

StringTokenizer itr = new StringTokenizer(line);

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

output.collect(word, one);

}

}

}

/**

* A reducer class that just emits the sum of the input values.

*/

public static class Reduce extends MapReduceBase

implements Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterator<IntWritable> values,

OutputCollector<Text, IntWritable> output,

Reporter reporter) throws IOException {

int sum = 0;

// increment reduce counter

reporter.incrCounter( MyCounters.REDUCEFUNCTIONCALLS, 1 );

while (values.hasNext()) {

sum += values.next().get();

}

output.collect(key, new IntWritable(sum));

}

}

...

- When we run the program we get, for example:

10/03/31 20:55:24 INFO mapred.FileInputFormat: Total input paths to process : 6 10/03/31 20:55:24 INFO mapred.JobClient: Running job: job_201003312045_0006 10/03/31 20:55:25 INFO mapred.JobClient: map 0% reduce 0% 10/03/31 20:55:28 INFO mapred.JobClient: map 7% reduce 0% 10/03/31 20:55:29 INFO mapred.JobClient: map 14% reduce 0% 10/03/31 20:55:31 INFO mapred.JobClient: map 42% reduce 0% 10/03/31 20:55:32 INFO mapred.JobClient: map 57% reduce 0% 10/03/31 20:55:33 INFO mapred.JobClient: map 85% reduce 0% 10/03/31 20:55:34 INFO mapred.JobClient: map 100% reduce 0% 10/03/31 20:55:43 INFO mapred.JobClient: map 100% reduce 100% 10/03/31 20:55:44 INFO mapred.JobClient: Job complete: job_201003312045_0006 10/03/31 20:55:44 INFO mapred.JobClient: Counters: 19 10/03/31 20:55:44 INFO mapred.JobClient: File Systems 10/03/31 20:55:44 INFO mapred.JobClient: HDFS bytes read=5536289 10/03/31 20:55:44 INFO mapred.JobClient: HDFS bytes written=1217928 10/03/31 20:55:44 INFO mapred.JobClient: Local bytes read=2950830 10/03/31 20:55:44 INFO mapred.JobClient: Local bytes written=5902130 10/03/31 20:55:44 INFO mapred.JobClient: Job Counters 10/03/31 20:55:44 INFO mapred.JobClient: Launched reduce tasks=1 10/03/31 20:55:44 INFO mapred.JobClient: Rack-local map tasks=2 10/03/31 20:55:44 INFO mapred.JobClient: Launched map tasks=14 10/03/31 20:55:44 INFO mapred.JobClient: Data-local map tasks=12 10/03/31 20:55:44 INFO mapred.JobClient: org.myorg.WordCount$MyCounters 10/03/31 20:55:44 INFO mapred.JobClient: REDUCEFUNCTIONCALLS=315743 10/03/31 20:55:44 INFO mapred.JobClient: MAPFUNCTIONCALLS=104996 10/03/31 20:55:44 INFO mapred.JobClient: Map-Reduce Framework 10/03/31 20:55:44 INFO mapred.JobClient: Reduce input groups=110260 10/03/31 20:55:44 INFO mapred.JobClient: Combine output records=205483 10/03/31 20:55:44 INFO mapred.JobClient: Map input records=104996 10/03/31 20:55:44 INFO mapred.JobClient: Reduce output records=110260 10/03/31 20:55:44 INFO mapred.JobClient: Map output bytes=9041295 10/03/31 20:55:44 INFO mapred.JobClient: Map input bytes=5521325 10/03/31 20:55:44 INFO mapred.JobClient: Combine input records=923139 10/03/31 20:55:44 INFO mapred.JobClient: Map output records=923139 10/03/31 20:55:44 INFO mapred.JobClient: Reduce input records=205483

|  | |

| ||

|  |