Difference between revisions of "CSC352 Homework 1 2013"

(→Plot) |

(→Measuring Execution Time) |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 70: | Line 70: | ||

sys 0m0.074s | sys 0m0.074s | ||

| − | The '''real''' time is the amount of time you experienced waiting in front of your computer. The '''user''' time is the amount of time the | + | The '''real''' time is the amount of time you experienced waiting in front of your computer. The '''user''' time is the amount of time the CPU spent running your program, and not the time it spent running other processes. This time is normally independent of the load of the system, i.e. the number of other users running programs at the same time as you, <font color="red">(section added 9/21/13) but this time is a function of the number of cores in your system. If you have 2 cores, the time reported will be the CPU time measured multiplied by 2!</font>. The [http://en.wikipedia.org/wiki/CPU_time Wikipedia page] on the '''time''' command is clear on this subject: |

| + | |||

| + | <blockquote>'''CPU time and elapsed real time for parallel processing technology'''<br /> | ||

| + | If a program uses parallel processing, total CPU time for that program would be more than its elapsed real time. (Total CPU time)/(Number of CPUs) would be same as elapsed real time if work load is evenly distributed on each CPU and no wait is involved for I/O or other resources. | ||

| + | <br /><br /> | ||

| + | Example: A software application executed on a Hexa-core processor creates three Unix processes for fulfilling the user requirement. Each of these three processes creates two threads, enumerating a total of 6 working threads. Computation is distributed evenly on the 6 independent threads. If no wait for resources is involved, total CPU time is expected to be six times the elapsed real time. | ||

| + | </blockquote> | ||

If you are not using bash as your shell, then the '''time''' application will be the Linux default time application, which usually lives in /usr/bin/time. If you want to use this application instead of the default bash time, you would write: | If you are not using bash as your shell, then the '''time''' application will be the Linux default time application, which usually lives in /usr/bin/time. If you want to use this application instead of the default bash time, you would write: | ||

| Line 85: | Line 91: | ||

</tanbox> | </tanbox> | ||

<br /> | <br /> | ||

| + | |||

==Be Efficient!== | ==Be Efficient!== | ||

<br /> | <br /> | ||

| Line 113: | Line 120: | ||

[[Image:BadSpeedupPlot.png|300px]] | [[Image:BadSpeedupPlot.png|300px]] | ||

| <font color = "green">Something better</font>:<br /> | | <font color = "green">Something better</font>:<br /> | ||

| − | [[Image:BetterSpeedupPlot.gif| | + | [[Image:BetterSpeedupPlot.gif| 400px]] |

|} | |} | ||

<br /> | <br /> | ||

Latest revision as of 15:17, 21 September 2013

--D. Thiebaut (talk) 09:59, 11 September 2013 (EDT)

Contents

Computing Pi using the Monte Carlo Method:

Is it worth multithreading?

A Monte-Carlo Calculation of Pi, by Michael Jay Schillaci, Ph.D. at Roberts Wesleyan College, is a good introduction to computing Pi using Monte-Carlo (See Section 1.3).

The whole idea is to throw darts at a square target in which lies a concentric circle whose diameter is the same as the side of the square. After throwing a huge number N of darts randomly, i.e. not aiming for the circle but aiming just for the square, the ratio of holes created by the darts inside the circle, which we'll call Nc, relative to N is approximately Pi/4. The larger N is, the closer to Pi/4 the ratio becomes.

The goal for this assignment is to compute the speedup of a multithreaded java application where each thread computes its own version of Nc given N. The main program accumulates the different Nc values and the different N values from the threads and reports the resulting value of Pi, and the elapsed user time in ms.

Here is a simple serial version of the Monte Carlo program:

/* MonteCarloPi.java

* D. Thiebaut

* A simple demo program for computing an approximation of Pi using

* the Monte Carlo method.

*

*/

import java.util.Random;

public class MonteCarloPi {

static Random generator = new Random( System.currentTimeMillis() );

/**

* @param args

*/

public static void main(String[] args) {

long N = 10000000;

if ( args.length > 1 )

N = Long.parseLong( args[0] );

long Nc = 0;

for ( long i=0; i<N; i++ ) {

float x = generator.nextFloat()*2 - 1; // random float in [-1,1]

float y = generator.nextFloat()*2 - 1; // random float in [-1,1]

if ( x*x + y*y <= 1.0f )

Nc += 1;

}

System.out.println( N + " random samples yield an approximation of Pi = " + 4.0*Nc/N );

}

}

To run the program, save it in a file called MonteCarloPi.java and proceed as follows (user input is in bold):

javac MonteCarloPi.java java MonteCarloPi 10000000 random samples yield an approximation of Pi = 3.140818

Your Assignment

Write a multithreaded version of this code and measure its execution times when it runs with 2 up to 20 threads. Look at the solution page for the challenges of the 9/10 lecture for inspiration. In particular, you may find using a script to run all your test cases very useful!

Measuring Execution Time

To measure execution times, there are usually two different time program one can use. One is part of the bash shell, so if the computer you are connected to via a shell runs bash as the default shell, then the output of the time application will look like this (on beowulf):

time java MonteCarloPi 10000000 random samples yield an approximation of Pi = 3.1415208 real 0m1.014s user 0m0.361s sys 0m0.074s

The real time is the amount of time you experienced waiting in front of your computer. The user time is the amount of time the CPU spent running your program, and not the time it spent running other processes. This time is normally independent of the load of the system, i.e. the number of other users running programs at the same time as you, (section added 9/21/13) but this time is a function of the number of cores in your system. If you have 2 cores, the time reported will be the CPU time measured multiplied by 2!. The Wikipedia page on the time command is clear on this subject:

CPU time and elapsed real time for parallel processing technology

If a program uses parallel processing, total CPU time for that program would be more than its elapsed real time. (Total CPU time)/(Number of CPUs) would be same as elapsed real time if work load is evenly distributed on each CPU and no wait is involved for I/O or other resources.

Example: A software application executed on a Hexa-core processor creates three Unix processes for fulfilling the user requirement. Each of these three processes creates two threads, enumerating a total of 6 working threads. Computation is distributed evenly on the 6 independent threads. If no wait for resources is involved, total CPU time is expected to be six times the elapsed real time.

If you are not using bash as your shell, then the time application will be the Linux default time application, which usually lives in /usr/bin/time. If you want to use this application instead of the default bash time, you would write:

/usr/bin/time java MonteCarloPi 10000000 random samples yield an approximation of Pi = 3.1425748 0.35user 0.01system 0:00.92elapsed 40%CPU (0avgtext+0avgdata 17356maxresident)k 0inputs+64outputs (0major+4501minor)pagefaults 0swaps

Here the user time is slightly different from the previous measurement, but close enough to the previous value (we'll never have exact equality).

For your assignment record the user time for each run of your program.

Be Efficient!

We spent some time working with a flawed parallel program that was using threads to increment in parallel a global variable sum. We did this to better understand synchronization. But in your program, you do not have to do this. You can let the threads increment their own local sum variables, and make the threads add it to a global variable Sum at the end of their computation. This will reduce the number of times they have to synchronize.

Plot

We haven't played with MatPlotLib yet, so using eXcel is fine (or Matlab if you prefer). Actually any plotting application is fine.

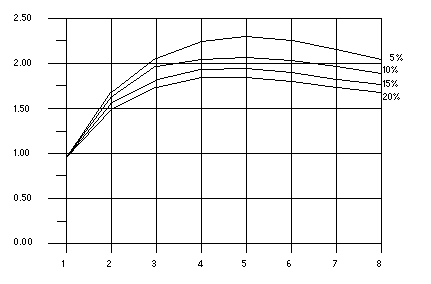

Once you have your setup done, measure the average of three runs of your application for all possible number of threads between 2 and 20, and pot the speedup of your program as a function of number of threads T(t)/T(1), where t is the number of threads. T(1) is the serial time, i.e. the execution time of the serial program without a thread (the program is the thread).

Note: if you can generate a faster version of the serial program given above, please do so! But remember, it has to use the Monte-Carlo approach!

Pick a value of the number of samples N that is large enough so that the 3 user times recorded for each case are close to each other and do not show much variation.

- the fact that the quantity on the Y axis is a speedup as a function of number of threads

- the smallest and largest values for the number of threads

- the type of machine the measurements were taken on, and more importantly how many cores it contains.

Recommendations

An important recommendation: the speedup is a discrete quantity. Do not use lines to represent it. Use discrete symbols instead. You may use lines to link the symbols to indicate a trend, but you should make a mention of the fact that the lines show an extrapolated trend and not real data.

| Something to avoid: both because it uses lines and no symbols, and also because the plot is not labeled correctly. |

Something better: |

Submission

Call your programs MonteCarloPi1.java for the serial version, MonteCarloPiN.java for the multithreaded version. If you used a script call it doMonteCarlo.sh, and submit all your programs using your 352a-xx accounts.

- In the header of MonteCarloN.java, summarize the story you observe in the plot you generated. In particular, answer the following questions:

- Is the speedup ever greater than 1? In other words, is it worth multithreading this application? (make sure you play with different values of N. 100,000,000 is just a value picked from a hat. Something larger may make your graph different).

- Is there a moment where the speedup curve shows that more threads is disadvantageous?

- Is there an optimal number of threads that maximizes the speedup?

- login to your 352a-xx account on beowulf

- rsync or copy-paste your programs in a directory of your choice

- type the command

submit hw1 MonteCarloPi1.java submit hw1 MonteCarloPiN.java submit hw1 doMonteCarlo.sh

- email me a copy of your plot.

Additional Resources

- A nice applet illustrating the Monte Carlo method for computing Pi can be found on the site of the Polymer Dept. at B.U. http://polymer.bu.edu/java/java/montepi/montepiapplet.html

- How to create an XY plot with eXcel