Difference between revisions of "CSC352 Class Page 2013"

(→Weekly Schedule) |

|||

| Line 118: | Line 118: | ||

|| | || | ||

* '''Tuesday''' | * '''Tuesday''' | ||

| + | *** Introduction to '''measuring performance'''. Comparing execution times. | ||

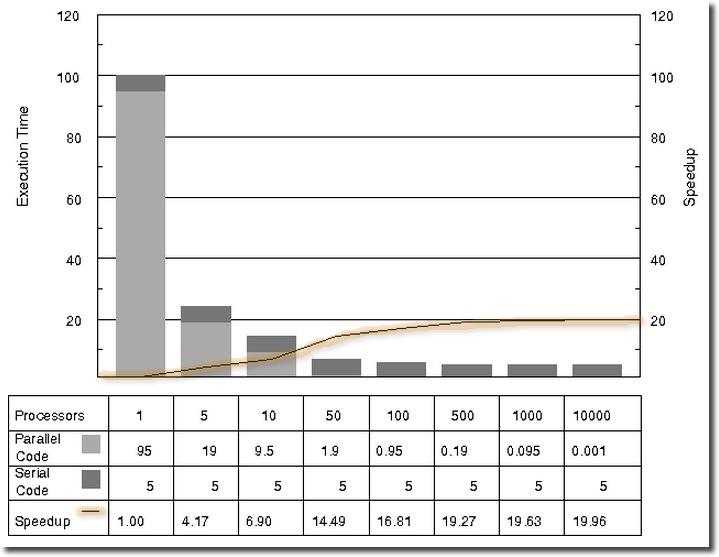

| + | *** Introduction to '''Speedup( ''N'' )''', where ''N'' is the number of threads, or the number of processors. | ||

| + | *** '''Amdahl's Law''' | ||

| + | <center>[[Image:AmdahlsLaw.jpg|450px| right]]</center> | ||

| + | *** '''Processor Utilization''' | ||

| + | <center>[[Image:ParallelProcessorUtilizationDefinition.gif]]</center> | ||

| + | <br /> | ||

| + | <center>[[Image:ParallelProcessorUtilizationGraph.gif|450px|right]]</center> | ||

| + | <br /> | ||

| + | *** What if the two threads in the Parallel Pi program updated a global '''sum''' variable by doing something like this? | ||

| + | |||

| + | sum += thread_sum; | ||

| + | |||

| + | :::where '''thread_sum''' is a local variable used by the thread function to accumulate the terms of the series. | ||

| + | <br /> | ||

** [[CSC352: Computing Pi and Synchronization| Computing Pi and Synchronization]] | ** [[CSC352: Computing Pi and Synchronization| Computing Pi and Synchronization]] | ||

* '''Thursday''' | * '''Thursday''' | ||

Revision as of 14:02, 5 September 2013

--D. Thiebaut (talk) 11:15, 9 August 2013 (EDT)

Weekly Schedule

| Week | Topics | Reading |

| Week 1 9/3 |

Thread 1 ----------------------|====|-------------------------> time

Thread 2 ------------|====|-----------------------------------> time

sum += thread_sum;

|

|

| Week 2 9/10 |

sum += thread_sum;

|

|

| Week 3 9/17 |

|

|

| Week 4 9/24 |

|

|

| Week 5 10/1 |

|

|

| Week 6 10/8 |

|

|

| Week 7 10/15 |

|

|

| Week 8 10/22 |

|

|

| Week 9 10/29 |

|

|

| Week 10 11/5 |

|

|

| Week 11 11/12 |

|

|

| Week 12 11/19 |

|

|

| Week 13 11/26 |

|

|

| Week 14 12/3 |

|

|

| Week 15 12/10 |

|

|

Links and Resources

On-Line Resources

- Introduction to Parallel Processing, by Blaise Barney, Lawrence Livermore National Laboratory. A good read. Covers most of the important topics.

- Introduction to MPI, by Blaise Barney, Lawrence Livermore National Laboratory. Another short but excellent coverage of a topic in parallel processing, this time MPI.

- A 90-Minute Guide to Modern Microprocessors

Classics

- Designing and Building Parallel Programs, by Ian Foster. A relatively old reference (1995), with still good information.

Papers

- A View of Cloud Computing, 2010, By Armbrust, Michael and Fox, Armando and Griffith, Rean and Joseph, Anthony D. and Katz, Randy and Konwinski, Andy and Lee, Gunho and Patterson, David and Rabkin, Ariel and Stoica, Ion and Zaharia, Matei.

- The NIST Definition of Cloud Computing (Draft) (very short paper)

- Nobody ever got fired for using Hadoop on a cluster, Rowstron, Antony and Narayanan, Dushyanth and Donnelly, Austin and O'Shea, Greg and Douglas, Andrew

- The Landscape of Parallel Computing Research: A View From Berkely, 2006, still good! (very long paper)

- Update on a view from Berkeley, 2010. (short paper)

- General-Purpose vs. GPU: Comparisons of Many-Cores on Irregular Workloads, 2010

- Parallel Computing with Patterns and Frameworks, 2010, XRDS.

- Server Virtualization Architecture and Implementation, xrds, 2009.

- Processing Wikipedia Dumps: A Case-Study comparing the XGrid and MapReduce Approaches, D. Thiebaut, Yang Li, Diana Jaunzeikare, Alexandra Cheng, Ellysha Raelen Recto, Gillian Riggs, Xia Ting Zhao, Tonje Stolpestad, and Cam Le T Nguyen, in proceedings of 1st Int'l Conf. On Cloud Computing and Services Science (CLOSER 2011), Noordwijkerhout, NL, May 2011. (longer version)

- Beyond Hadoop, Gregory Mone, CACM, 2013. (short paper).

- Understanding Throughput-Oriented Architectures, CACM, 2010.

- Learning from the Success of MPI, by WIlliam D. Gropp, Argonne National Lab, 2002.

- The Unreasonable Effectiveness of Data, by Halevy, Norvig, Pereira, IEEE Intelligent Systems, IEEE Intelligent Systems, March 2009, Vol. 24, No. 2, pp. 8-12.