Tutorial: Computing Pi on an AWS MPI-Cluster

--D. Thiebaut (talk) 22:55, 28 October 2013 (EDT)

This tutorial is the continuation of the tutorial Creating and MPI-Cluster on AWS. Make sure you go through it first. The tutorial also assumes that you have available credentials to access the Amazon AWS system.

Contents

Reference Material

I have used

- the the MPI Tutorial from mpitutorial.com, and

- the StarCluster quick-start tutorial from the StarCluster project

for inspiration and adapted them in the present page to setup a cluster for the CSC352 class. Our setup uses MIT's Star Cluster package.

An MPI C-Program for Approximating Pi

The program below works for any number of processes on an MPI cluster.

// piN.c

// D. Thiebaut

// Computes Pi using N processes under MPI

//

// To compile and run:

// mpicc -o piN piN.cpp

// time mpirun -np 2 ./piN 100000000

//

// Output

// Process 1 of 2 started on beowulf2. N= 50000000

// Process 0 of 2 started on beowulf2. N= 50000000

// 50000000 iterations: Pi = 3.14159

//

// real0m1.251s

// user0m1.240s

// sys0m0.000s

//

#include "mpi.h"

#include <stdio.h>

#include <stdlib.h>

#define MANAGER 0

//--------------------------------------------------------------------

// P R O T O T Y P E S

//--------------------------------------------------------------------

void doManager( int, int );

void doWorker( );

//--------------------------------------------------------------------

// M A I N

//--------------------------------------------------------------------

int main(int argc, char *argv[]) {

int myId, noProcs, nameLen;

char procName[MPI_MAX_PROCESSOR_NAME];

int n;

if ( argc<2 ) {

printf( "Syntax: mpirun -np noProcs piN n\n" );

return 1;

}

// get the number of samples to generate

n = atoi( argv[1] );

//--- start MPI ---

MPI_Init( &argc, &argv);

MPI_Comm_rank( MPI_COMM_WORLD, &myId );

MPI_Comm_size( MPI_COMM_WORLD, &noProcs );

MPI_Get_processor_name( procName, &nameLen );

//--- display which process we are, and how many there are ---

printf( "Process %d of %d started on %s. n = %d\n",

myId, noProcs, procName, n );

//--- farm out the work: 1 manager, several workers ---

if ( myId == MANAGER )

doManager( n, noProcs );

else

doWorker( );

//--- close up MPI ---

MPI_Finalize();

return 0;

}

//--------------------------------------------------------------------

// The function to be evaluated

//--------------------------------------------------------------------

double f( double x ) {

return 4.0 / ( 1.0 + x*x );

}

//--------------------------------------------------------------------

// The manager's main work function. Note that the function

// can and should be made more efficient (and faster) by sending

// an array of 3 ints rather than 3 separate ints to each worker.

// However the current method is explicit and better highlights the

// communication pattern between Manager and Workers.

//--------------------------------------------------------------------

void doManager( int n, int noProcs ) {

double sum0 = 0, sum1;

double deltaX = 1.0/n;

int i, begin, end;

MPI_Status status;

//--- first send n and bounds of series to all workers ---

end = n/noProcs;

for ( i=1; i<noProcs; i++ ) {

begin = end;

end = (i+1) * n / noProcs;

MPI_Send( &begin, 1, MPI_INT, i /*node i*/, 0, MPI_COMM_WORLD );

MPI_Send( &end, 1, MPI_INT, i /*node i*/, 0, MPI_COMM_WORLD );

MPI_Send( &n, 1, MPI_INT, i /*node i*/, 0, MPI_COMM_WORLD );

}

//--- perform summation over 1st interval of the series ---

begin = 0;

end = n/noProcs;

for ( i = begin; i < end; i++ )

sum0 += f( i * deltaX );

//--- wait for other half from worker ---

for ( i=1; i<noProcs; i++ ) {

MPI_Recv( &sum1, 1, MPI_DOUBLE, MPI_ANY_SOURCE, 0, MPI_COMM_WORLD, &status );

sum0 += sum1;

}

//--- output result ---

printf( "%d iterations: Pi = %f\n", n, sum0 *deltaX );

}

//--------------------------------------------------------------------

// The worker's main work function. Same comment as for the

// Manager. The 3 ints would benefit from being sent in an array.

//--------------------------------------------------------------------

void doWorker( ) {

int begin, end, n, i;

//--- get n and bounds for summation from manager ---

MPI_Status status;

MPI_Recv( &begin, 1, MPI_INT, MPI_ANY_SOURCE, 0, MPI_COMM_WORLD, &status );

MPI_Recv( &end, 1, MPI_INT, MPI_ANY_SOURCE, 0, MPI_COMM_WORLD, &status );

MPI_Recv( &n, 1, MPI_INT, MPI_ANY_SOURCE, 0, MPI_COMM_WORLD, &status );

//--- sum over boundaries received ---

double sum = 0;

double deltaX = 1.0/n;

for ( i=begin; i< end; i++ )

sum += f( i * deltaX );

//-- send result to manager ---

MPI_Send( &sum, 1, MPI_DOUBLE, MANAGER, 0, MPI_COMM_WORLD );

}

Creating a 10-node cluster

To create a 10-node cluster, make sure your current cluster is stopped.

starcluster terminate mycluster

Edit the starcluster configuration file:

cd cd .starcluster emacs -nw config (or use your favorite text editor)

Locate the following line, and set the number of nodes to 10:

# number of ec2 instances to launch CLUSTER_SIZE = 10

Save the config file.

Start up the cluster:

starcluster start mycluster StarCluster - (http://star.mit.edu/cluster) (v. 0.9999) Software Tools for Academics and Researchers (STAR) Please submit bug reports to starcluster@mit.edu *** WARNING - Setting 'EC2_PRIVATE_KEY' from environment... *** WARNING - Setting 'EC2_CERT' from environment... >>> Using default cluster template: smallcluster ... >>> Starting cluster took 2.919 mins The cluster is now ready to use. To login to the master node as root, run: $ starcluster sshmaster mycluster ...

SSH to the Master node

SSH to the master node of your cluster and verify that you have an mpi folder there, and if not, create one.

starcluster sshmaster mycluster ... root@master:~# cd root@master:~# cd /home/mpi/ root@master:/home/mpi#

Return to your Mac command prompt:

root@master:/home/mpi# exit

Upload the PiN.c program to the Cluster

Assuming that you have the version of the piN.c program listed at the top of this page somewhere on your Mac, cd to the directory containing the file:

cd cd pathToWhereYourFileIsLocated

You can now upload the local file to the cluster using the starcluster utility:

starcluster put mycluster piN.c /home/mpi/

Create a host file

Mpirun or mpiexec will by default launch all the processes you start on the node on which you run the command. By default mpirun assumes only one node in the cluster. To force it to launch the processes on other nodes in the cluster you need to put the names of all nodes in a file called the host file.

Starcluster uses a simple system to name the nodes. The master node is called master and the workers are called node001, node002, etc...

Using emacs, create a file called hosts in the /home/mpi directory on your cluster, and put these lines in the file:

master node001 node002 node003 node004 node005 node006 node007 node008 node009

This will be used by the mpirun program to figure out which node to distribute the processes to.

Compile and Run the PiN.c program on the 10-node cluster

root@master:/home/mpi# starcluster sshmaster mycluster root@master:/home/mpi# mpicc -o piN piN.c root@master:/home/mpi# cat hosts master node001 node002 node003 node004 node005 node006 node007 node008 node009 root@master:/home/mpi# mpirun -hostfile hosts -n 10 ./piN 10000000 Process 1 of 10 started on node001. n = 10000000 Process 8 of 10 started on node008. n = 10000000 Process 0 of 10 started on master. n = 10000000 Process 9 of 10 started on node009. n = 10000000 Process 3 of 10 started on node003. n = 10000000 Process 2 of 10 started on node002. n = 10000000 Process 4 of 10 started on node004. n = 10000000 Process 7 of 10 started on node007. n = 10000000 Process 5 of 10 started on node005. n = 10000000 Process 6 of 10 started on node006. n = 10000000 10000000 iterations: Pi = 3.141593

Troubleshooting

I have found that once the 10-node cluster had been successfully started once, and then stopped, it was impossible to restart it, even after terminating it. I followed advice from other AWS/Starcluster users and changed the instance in the config file from m1.small to m1.medium.

Here's the change that needs to be done on your mac's ~/.starcluster/config file:

#NODE_INSTANCE_TYPE = m1.small NODE_INSTANCE_TYPE = m1.medium

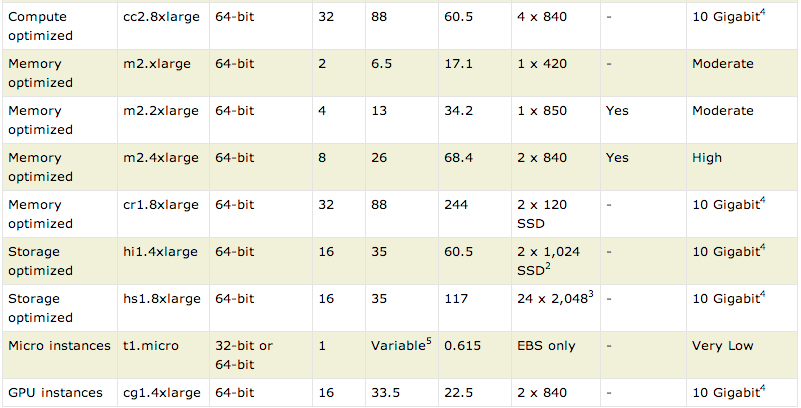

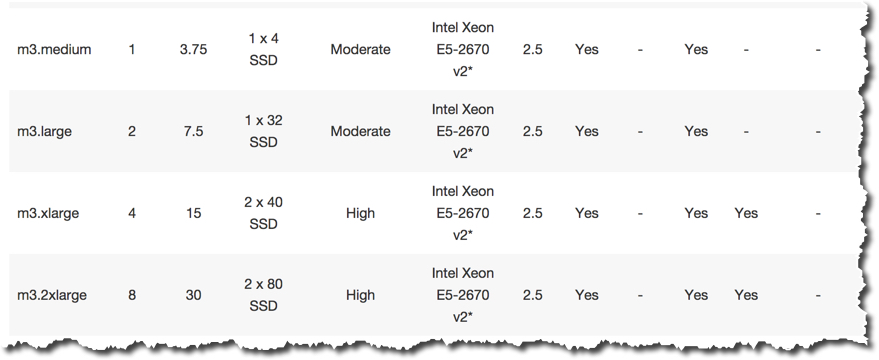

AWS Instance comparison chart

Types of AMIs

For information, below is the comparison chart of the different instances or AMIs (Amazon Machine Instances) available to populate a cluster (captured in Oct. 2013. Check the Up-to-date table on Amazon for current technology). The default used by Starcluster is the m1.small, but notice that many others are available. Of course the more powerful machines come at a higher price. But given that some have many cores, the elevated cost for them is oven worth the potential boost in performance to be gained.

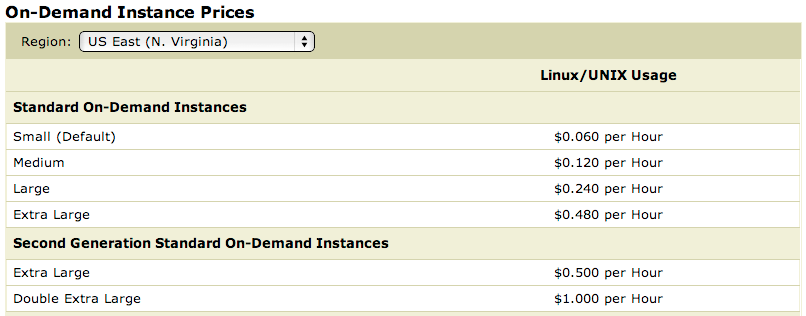

Pricing

The prices per hour of renting of an AMI is given below for a the small instances (more can be found on this Amazon page).