Hadoop Tutorial 3.2 -- Using Your Own Streaming WordCount program

--D. Thiebaut 15:21, 18 April 2010 (UTC)

--D. Thiebaut 21:48, 23 June 2010 (UTC) Revised and added Ruby elastic-mapreduce command section

|

This is Part 2 of the Hadoop on AWS Tutorial. This part deals with streaming our own version of the word-count program and apply it to the Ulysses.txt text. It covers creating the job via the AWS console, and using the Ruby API elastic-mapreduce command. |

Setup

- We'll basically do the same thing as when we used the example WordCount program included in Hadoop, but this time we'll upload our own version of the python program in our S3 folder, and run it instead of Amazon's program. We'll use both the console and the ruby API elastic-mapreduce command to start the streaming job.

- Create two Python programs, mapper.py and reducer.py:

#!/usr/bin/env python

# mapper.py

# D. Thiebaut

#

import sys

#--- get all lines from stdin ---

for line in sys.stdin:

#--- remove leading and trailing whitespace---

line = line.strip()

#--- split the line into words ---

words = line.split()

#--- output tuples [word, 1] in tab-delimited format---

for word in words:

print '%s\t%s' % (word, "1")

and

#!/usr/bin/env python

# reducer.py

# D. Thiebaut

import sys

# maps words to their counts

word2count = {}

# input comes from STDIN

for line in sys.stdin:

# remove leading and trailing whitespace

line = line.strip()

# parse the input we got from mapper.py

word, count = line.split('\t', 1)

# convert count (currently a string) to int

try:

count = int(count)

except ValueError:

continue

try:

word2count[word] = word2count[word]+count

except:

word2count[word] = count

# write the tuples to stdout

# Note: they are unsorted

for word in word2count.keys():

print '%s\t%s'% ( word, word2count[word] )

- Do make sure they do not contain any error! Run them on your local machine, so the syntax can be checked. Fix any errors you may find.

- Create a new subfolder called prog in your S3 main folder, and store mapper.py and reducer.py in it.

- Create a new subfolder called logs in your S3 main folder, if there isn't one there yet. This is where we'll ask MapReduce to store the execution logs.

Using the AWS console

Create a New Job Flow

- Create a New Job Flow in the Amazon Elastic MapReduce space.

- This time select Run your own application and select streaming from the type.

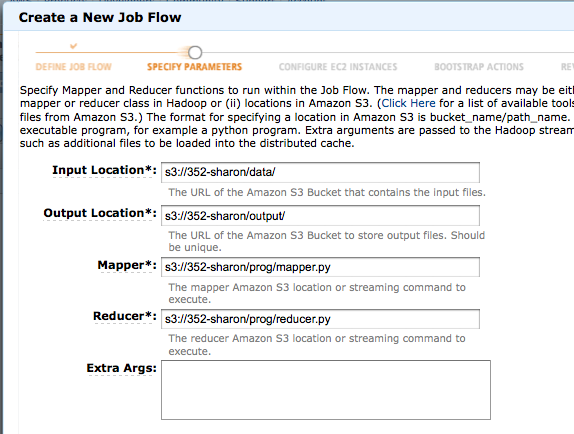

- Specify the parameters as follows:

- Input: s3://yourfolder/data/

- output: s3://yourfolder/output/

- Mapper: s3://yourfolder/prog/mapper.py

- Reducer: s3://yourfolder/prog/reducer.py

- Extra Args: leave blank

- In the Configure EC2 Instances, select 1 instance, and allow debugging. Set the S3 log path to s3://yourfolder/logs/

- Create the Job Flow!

Wait

- Click Refresh in the AWS Console to see the status of your Job Flow.

- Wait...

- Wait...

If your job fails...

- It is possible that your job may fail. In this case get all the logs from S3 to your local machine and delete them from S3.

- On your local machine, use grep to find errors in the logs:

cd logs grep -i error */* grep -i error */*/*

- Here is an example of what you may find:

task-attempts/attempt_201003182044_0001_m_000001_2/stderr:IndentationError: unexpected indent

task-attempts/attempt_201003182044_0001_m_000001_2/syslog:2010-03-18 20:46:03,363 INFO

org.apache.hadoop.streaming.PipeMapRed (Thread-5): MRErrorThread done

task-attempts/attempt_201003182044_0001_m_000001_2/syslog:2010-03-18 20:46:03,449 WARN

org.apache.hadoop.mapred.TaskTracker (main): Error running child

- indicating that the python program may not be well indented!! If this is the case, you know what to do!

If Your Job Runs to Completion

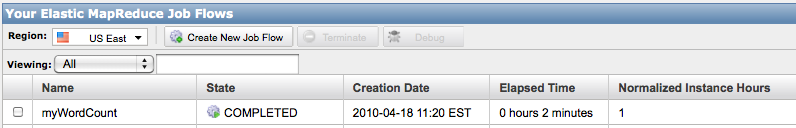

- If your job runs to completion you should get a nice COMPLETED status next to your job in the Firefox S3 Add-On.

Get the results

- Download the output and logs folders to your local machine, and delete them from S3.

- Check that you get the index of Ulysses:

cat part-0000* | sort a 6581 a1 1 aaron 2 aback 1 abaft 1 abandon 1 abandoned 7 abandoning 1 abandonment 1 abasement 2 ... zoe 106 zones 1 zoo 2 zoological 1 zouave 2 zrads 4 zulu 1 zulus 1 zurich 1 zut 1

- Check that we get 107 Buck words in the output, as we did in a prior lab:

cat part-0000* | grep Buck | sort Buck 102 Buckingham 1 Buckled 1 Buckley's. 1 Buckleys 1 Buckshot 1

Using the Ruby elastic-mapreduce Command

- First install ruby and the command line tools following Amazon's Using the Command Line Interface document.

- Make sure the elastic-mapreduce command is in your executable path.

- Make sure you edit the credentials.json file in the installation directory of elastic-mapreduce.

{

"access_id": "ABABABABABABAB", (use your access Key Id)

"private_key": "ABCDEFGHIJKLMNOP/QRSTUVW/XYZ", (use your Secret Access Key)

"keypair": "yourId", (use your Id, which is normally the first part of your pem file name)

"log_uri": "s3://yourBucket/logs" (the name of your main S3 bucket containing a log directory)

}

- Using the S3Fox Organizer, put the mapper.py and reducer.py files in the bin directory in your S3 bucket.

- Put your input text files in an input bucket in S3. We'll call ours data.

- Make sure there is no bucket named S3://yourMainBucket/output

- Launch the streaming job on EMR with the following command (we assume it is launched from Mac OS X):

elastic-mapreduce --create --stream --num-instances 1 \

--mapper s3://yourBucket/bin/mapper.py \

--reducer s3://yourBucket/bin/reducer.py \

--input s3://yourBucket/data \

--name 'My WordCount Job' \

--output s3://yourBucket/output

Created job flow j-141EAOCO6YBKY

- Check that job gets started and runs to completion on the AWS console.

Generate the Timeline

- The information needed is in the logs folder you downloaded from S3.

- In particular, look for the file with a path similar to this one:

logs/j-1TJCCV8GABWSS/jobs/domU-12-31-39-0B-1D-47.compute-\

1.internal_1271604186590_job_201004181523_0001_hadoop_streamjob5567828275675843370.jar

- Pass this file through generateTimeLine.py as we did in Tutorial 1.1.

cd logs/j-1TJCCV8GABWSS/jobs

cat domU-12-31-39-0B-1D-47.compute-\

1.internal_1271604186590_job_201004181523_0001_hadoop_streamjob5567828275675843370.jar | generateTimeLine.py

time maps shuffle merge reduce

0 2 0 0 0

1 2 0 0 0

2 2 0 0 0

3 2 0 0 0

4 2 0 0 0

5 2 0 0 0

6 2 1 0 0

7 2 1 0 0

8 2 1 0 0

9 2 1 0 0

10 2 1 0 0

11 2 1 0 0

12 2 1 0 0

13 2 1 0 0

14 1 1 0 0

15 0 1 0 0

16 0 1 0 0

17 0 1 0 0

18 0 1 0 0

19 0 1 0 0

20 0 1 0 0

21 0 0 1 0

22 0 0 0 1

23 0 0 0 1

24 0 0 0 1

25 0 0 0 1

26 0 0 0 1